![Biggest AI Tool and Model Updates in 2023 [With Features]](https://meetcody.ai/wp-content/uploads/2023/11/All-AI-Tool-and-Model-Updates.png)

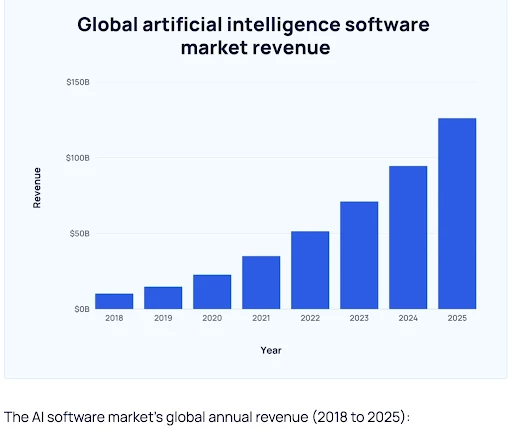

The AI market has grown by 38% in 2023, and one of the major reasons behind it is the large number of AI models and tools introduced by big brands!

But why are companies launching AI models and tools for business?

PWC reports how AI can boost employee potential by up to 40% by 2025!

Check out the graph below for the year-on-year revenue projections in the AI market (2018-2025) —

With a total of 14,700 startups in the United States alone as of March 2023, the business potential of AI is undoubtedly huge!

What are Large Language Models (LLMs) in AI?

Large Language Models (LLMs) are advanced AI tools designed to simulate human-like intelligence through language understanding and generation. These models operate by statistically analyzing extensive data to learn how words and phrases interconnect.

As a subset of artificial intelligence, LLMs are adept at a range of tasks, including creating text, categorizing it, answering questions in dialogue, and translating languages.

Their “large” designation comes from the substantial datasets they’re trained on. The foundation of LLMs lies in machine learning, particularly in a neural network framework known as a transformer model. This allows them to effectively handle various natural language processing (NLP) tasks, showcasing their versatility in understanding and manipulating language.

Read More: RAG (Retrieval-Augmented Generation) vs LLMs?

Which are the Top Open-Source LLMs in 2023?

As of September 2023, the Falcon 180B emerged as the top pre-trained Large Language Model on the Hugging Face Open LLM Leaderboard, achieving the highest performance ranking.

Let’s take you through the top 7 AI Models in 2023 —

1. Falcon LLM

Falcon LLM is a powerful pre-trained Open Large Language Model that has redefined the capabilities of AI language processing.

The model has 180 billion parameters and is trained on 3.5 trillion tokens. It can be used for both commercial and research use.

In June 2023, Falcon LLM topped HuggingFace’s Open LLM Leaderboard, earning it the title of ‘King of Open-Source LLMs.’

Falcon LLM Features:

- Performs well in reasoning, proficiency, coding, and knowledge tests.

- FlashAttention and multi-query attention for faster inference & better scalability.

- Allows commercial usage without royalty obligations or restrictions.

- The platform is free to use.

2. Llama 2

Meta has released Llama 2, a pre-trained online data source available for free. Llama 2 is the second version of Llama, which is doubled in context length and trained 40% more than its predecessor.

Llama 2 also offers a Responsible Use Guide that helps the user understand its best practices and safety evaluation.

Llama 2 Features:

- Llama 2 is available free of charge for both research and commercial use.

- Includes model weights and starting code for both pre-trained and conversational fine-tuned versions.

- Accessible through various providers, including Amazon Web Services (AWS) and Hugging Face.

- Implements an Acceptable Use Policy to ensure ethical and responsible utilization.

3. Claude 2.0 and 2.1

Claude 2 was an advanced language model developed by Anthropic. The model boasts improved performance, longer responses, and accessibility through both an API and a new public-facing beta website, claude.ai.

After ChatGPT, this model offers a larger context window and is considered to be one of the most efficient chatbots.

Claude 2 Features:

- Exhibits enhanced performance over its predecessor, offering longer responses.

- Allows users to interact with Claude 2 through both API access and a new public-facing beta website, claude.ai

- Demonstrates a longer memory compared to previous models.

- Utilizes safety techniques and extensive red-teaming to mitigate offensive or dangerous outputs.

Free Version: Available

Pricing: $20/month

The Claude 2.1 model introduced on 21 November 2023 brings forward notable improvements for enterprise applications. It features a leading-edge 200K token context window, greatly reduces instances of model hallucination, enhances system prompts, and introduces a new beta feature focused on tool use.

Claude 2.1 not only brings advancements in key capabilities for enterprises but also doubles the amount of information that can be communicated to the system with a new limit of 200,000 tokens.

This is equivalent to approximately 150,000 words or over 500 pages of content. Users are now empowered to upload extensive technical documentation, including complete codebases, comprehensive financial statements like S-1 forms, or lengthy literary works such as “The Iliad” or “The Odyssey.”

With the ability to process and interact with large volumes of content or data, Claude can efficiently summarize information, conduct question-and-answer sessions, forecast trends, and compare and contrast multiple documents, among other functionalities.

Claude 2.1 Features:

- 2x Decrease in Hallucination Rates

- API Tool Use

- Better Developer Experience

Pricing: TBA

4. MPT-7B

MPT-7B stands for MosaicML Pretrained Transformer, trained from scratch on 1 Trillion tokens of texts and codes. Like GPT, MPT also works on decoder-only transformers but with a few improvements.

At a cost of $200,000, MPT-7B was trained on the MosaicML platform in 9.5 days without any human intervention.

Features:

- Generates dialogue for various conversational tasks.

- Well-equipped for seamless, engaging multi-turn interactions.

- Includes data preparation, training, finetuning, and deployment.

- Capable of handling extremely long inputs without losing context.

- Available at no cost.

5. CodeLIama

Code Llama is a large language model (LLM) specifically designed for generating and discussing code based on text prompts. It represents a state-of-the-art development among publicly available LLMs for coding tasks.

According to Meta’s news blog, Code Llama aims to support open model evaluation, allowing the community to assess capabilities, identify issues, and fix vulnerabilities.

CodeLIama Features:

- Lowers the entry barrier for coding learners.

- Serves as a productivity and educational tool for writing robust, well-documented software.

- Compatible with popular programming languages, including Python, C++, Java, PHP, Typescript (Javascript), C#, Bash, and more.

- Three sizes available with 7B, 13B, and 34B parameters, each trained with 500B tokens of code and code-related data.

- Can be deployed at zero cost.

6. Mistral-7B AI Model

Mistral 7B is a large language model developed by the Mistral AI team. It is a language model with 7.3 billion parameters, indicating its capacity to understand and generate complex language patterns.

Further, Mistral -7B claims to be the best 7B model ever, outperforming Llama 2 13B on several benchmarks, proving its effectiveness in language learning.

Mistral-7B Features:

- Utilizes Grouped-query attention (GQA) for faster inference, improving the efficiency of processing queries.

- Implements Sliding Window Attention (SWA) to handle longer sequences at a reduced computational cost.

- Easy to fine-tune on various tasks, demonstrating adaptability to different applications.

- Free to use.

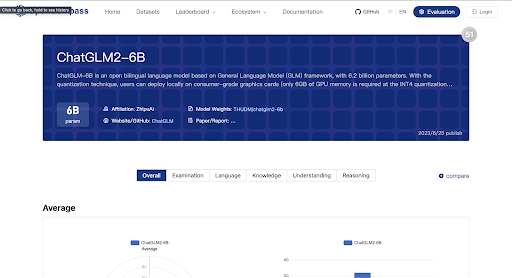

7. ChatGLM2-6B

ChatGLM2-6B is the second version of the open-source bilingual (Chinese-English) chat model ChatGLM-6B.It was developed by researchers at Tsinghua University, China, in response to the demand for lightweight alternatives to ChatGPT.

ChatGLM2-6B Features:

- Trained on over 1 trillion tokens in English and Chinese.

- Pre-trained on over 1.4 trillion tokens for increased language understanding.

- Supports longer contexts, extended from 2K to 32K.

- Outperforms competitive models of similar size on various datasets (MMLU, CEval, BBH).

Free Version: Available

Pricing: On Request

What are AI Tools?

AI tools are software applications that utilize artificial intelligence algorithms to perform specific tasks and solve complex problems. These tools find applications across diverse industries, such as healthcare, finance, marketing, and education, where they automate tasks, analyze data, and aid in decision-making.

The benefits of AI tools include efficiency in streamlining processes, time savings, reducing biases, and automating repetitive tasks.

However, challenges like costly implementation, potential job displacement, and the lack of emotional and creative capabilities are notable. To mitigate these disadvantages, the key lies in choosing the right AI tools.

Which are the Best AI Tools in 2023?

Thoughtful selection and strategic implementation of AI tools can reduce costs by focusing on those offering the most value for specific needs. Carefully selecting and integrating AI tools can help your business utilize AI tool advantages while minimizing the challenges, leading to a more balanced and effective use of technology.

Here are the top 13 AI tools in 2023 —

1. Open AI’s Chat GPT

Chat GPT is a natural language processing AI model that produces humanlike conversational answers. It can answer a simple question like “How to bake a cake?” to write advanced codes. It can generate essays, social media posts, emails, code, etc.

You can use this bot to learn new concepts in the most simple way.

This AI chatbot was built and launched by Open AI, a Research and Artificial company, in November 2022 and quickly became a sensation among netizens.

Features:

- The AI appears to be a chatbot, making it user-friendly.

- It has subject knowledge for a wide variety of topics.

- It is multilingual and has 50+ languages.

- Its GPT 3 version is free to use.

Free Version: Available

Pricing:

- Chat GPT-3: Free

- Chat GPT Plus: 20$/month

Rahul Shyokand, Co-founder of Wilyer:

We recently used ChatGPT to implement our Android App’s most requested feature by enterprise customers. We had to get that feature developed in order for us to be relevant SaaS for our customers. Using ChatGPT, we were able to command a complex mathematical and logical JAVA function that precisely fulfilled our requirements. In less than a week, we were able to deliver the feature to our Enterprise customers by modifying and adapting JAVA code. We immediately unlocked a hike of 25-30% in our B2B SaaS subscriptions and revenue as we launched that feature.

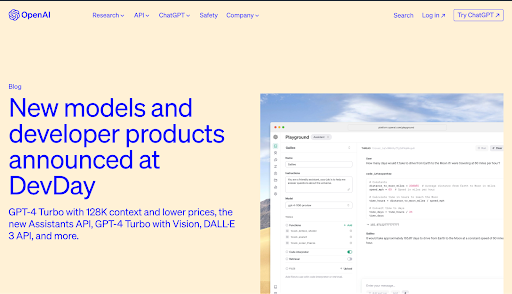

2. GPT-4 Turbo 128K Context

GPT-4 Turbo 128K Context was released as an improved and advanced version of GPT 3.5. With a 128K context window, you can get much more custom data for your applications using techniques like RAG (Retrieval Augmented Generation).

Features:

- Provides enhanced functional calling based on user natural language inputs.

- Interoperates with software systems using JSON mode.

- Offers reproducible output using Seed Parameter.

- Expands the knowledge cut-off by nineteen months to April 2023.

Free Version: Not available

Pricing:

- Input: $0.01/1000 tokens

- Output: $0.3/1000 tokens

3. Chat GPT4 Vision

Open AI launched the Multimodal GPT-4 Vision in March 2023. This version is one of the most instrumental versions of Chat GPT since it can process various types of text and visual formats. GPT-4 has advanced image and voiceover capabilities, unlocking various innovations and use cases.

The generative AI of ChatGPT-4 is trained under 100 trillion parameters, which is 500x the ChatGPT-3 version.

Features:

- Understands visual inputs such as photographs, documents, hand-written notes, and screenshots.

- Detects and analyzes objects and figures based on visuals uploaded as input.

- Offers data analysis of visual formats such as graphs, charts, etc.

- Offers 3x cost-effective model

- Returns 4096 output tokens

Free Version: Not available

Pricing: Pay for what you use Model

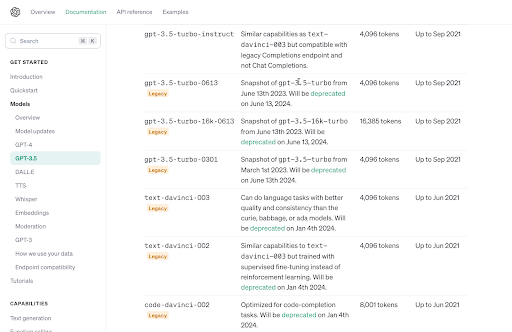

4. GPT 3.5 Turbo Instruct

GPT 3.5 Turbo Instruct was released to mitigate the recurring issues in the GPT-3 version. These issues included inaccurate information, outdated facts, etc.

So, the 3.5 version was specifically designed to produce logical, contextually correct, and direct responses to user’s queries.

Features:

- Understands and executes instructions efficiently.

- Produces more concise and on-point using a few tokens.

- Offers faster and more accurate responses tailored to user’s needs.

- Emphasis on mental reasoning abilities over memorization.

Free Version: Not available

Pricing:

- Input: $0.0015/1000 tokens

- Output: $0.0020/1000 tokens

5. Microsoft Copilot AI Tool

Copilot 365 is a fully-fledged AI tool that works throughout Microsoft Office. Using this AI, you can create documents, read, summarize, and respond to emails, generate presentations, and more. It is specifically designed to increase employee productivity and streamline workflow.

Features:

- Summarizes documents and long-chain emails.

- Generates and summarizes presentations.

- Analyzes Excel sheets and creates graphs to demonstrate data.

- Clean up the Outlook inbox faster.

- Write emails based on the provided information.

Free Version: 30 days Free Trial

Pricing: 30$/month

6. SAP’s Generative AI Assistant: Joule

Joule is a generative AI assistant by SAP that is embedded in SAP applications, including HR, finance, supply chain, procurement, and customer experience.

Using this AI technology, you can obtain quick responses and insightful insights whenever you need them, enabling quicker decision-making without any delays.

Features:

- Assists in understanding and improving sales performance, identifying issues, and suggesting fixes.

- Provides continuous delivery of new scenarios for all SAP solutions.

- Helps in HR by generating unbiased job descriptions and relevant interview questions.

- Transforms SAP user experience by providing intelligent answers based on plain language queries.

Free Version: Available

Pricing: On Request

7. AI Studio by Meta

AI Studio by Meta is built with a vision to enhance how businesses interact with their customers. It allows businesses to create custom AI chatbots for interacting with customers using messaging services on various platforms, including Instagram, Facebook, and Messenger.

The primary use case scenario for AI Studio is the e-commerce and Customer Support sector.

Features:

- Summarizes documents and long-chain emails.

- Generates and summarizes presentations.

- Analyzes Excel sheets and creates graphs to demonstrate data.

- Clean up the Outlook inbox faster.

- Write emails based on the provided information.

Free Version: 30 days free trial

Pricing: 30$/month

8. EY’s AI Tool

EY AI integrates human capabilities with artificial intelligence (AI) to facilitate the confident and responsible adoption of AI by organizations. It leverages EY’s vast business experience, industry expertise, and advanced technology platforms to deliver transformative solutions.

Features:

- Utilizes experience across various domains to deliver AI solutions and insights tailored to specific business needs.

- Ensures seamless integration of leading-edge AI capabilities into comprehensive solutions through EY Fabric.

- Embeds AI capabilities at speed and scale through EY Fabric.

Free Version: Free for EY employees

Pricing: On Request

9. Amazon’s Generative AI Tool for Sellers

Amazon has recently launched AI for Amazon sellers that help them with several product-related functions. It simplifies writing product titles, bullet points, descriptions, listing details, etc.

This AI aims to create high-quality listings and engaging product information for sellers in minimal time and effort.

Features:

- Produces compelling product titles, bullet points, and descriptions for sellers.

- Find product bottlenecks using automated monitoring.

- Generates automated chatbots to enhance customer satisfaction.

- Generates end-to-end prediction models using time series and data types.

Free Version: Free Trial Available

Pricing: On Request

10. Adobe’s Generative AI Tool for Designers

Adobe’s Generative AI for Designers aims to enhance the creative process of designers. Using this tool, you can seamlessly generate graphics within seconds with prompts, expand images, move elements within images, etc.

The AI aims to expand and support the natural creativity of designers by allowing them to move, add, replace, or remove anything anywhere in the image.

Features:

- Convert text prompts into images.

- Offers a brush to remove objects or paint in new ones.

- Provides unique text effects.

- Convert 3D elements into images.

- Moves the objects in the image.

Free Version: Available

Pricing: $4.99/month

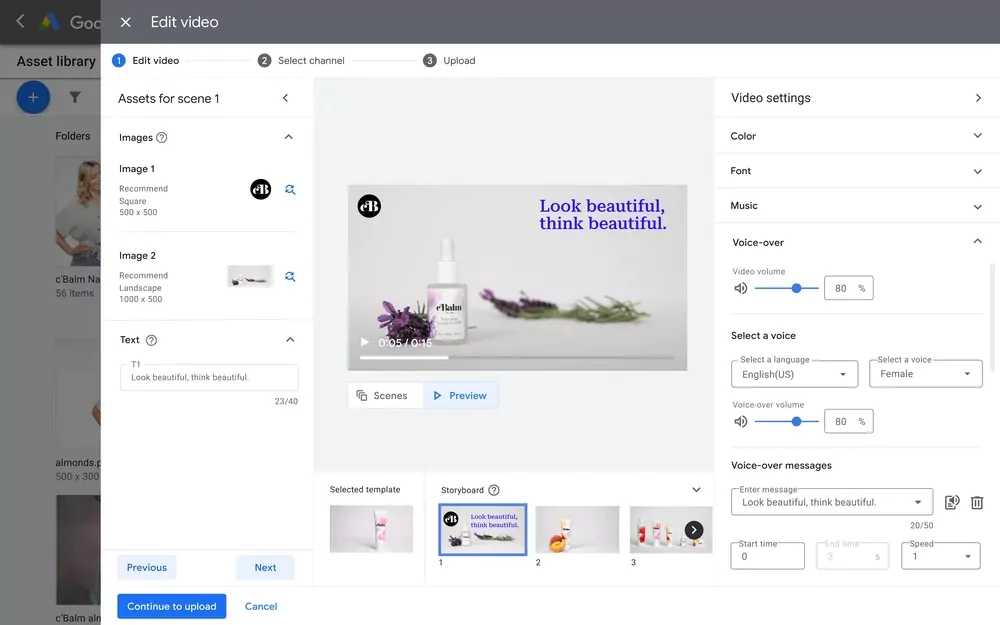

11. Google’s Creative Guidance AI Tool

Google launched a new AI product for ad optimization under the Video Analytics option called Creative Guidance AI. This tool will analyze your ad videos and offer you insightful feedback based on Google’s best practices and requirements.

Additionally, it doesn’t create a video for you but provides valuable feedback to optimize the existing video.

Features:

- Examine if the brand logo is shown within 5 seconds of the video.

- Analyze video length based on marketing objectives.

- Scans high-quality voiceovers.

- Analysis aspect ratio of the video.

Free Version: Free

Pricing: On Request

12. Grok: The Next-Gen Generative AI Tool

Grok AI is a large language module developed by xAI, Elon Musk’s AI startup. The tool is trained with 33 billion parameters, comparable to Meta’s LLaMA 2 with 70 billion parameters.

In fact, according to The Indian Express’s latest report, Gork-1 outperforms Clause 2 and GPT 3.5 but still not GPT 4.

Features:

- Extracts real-time information from the X platform (formerly Twitter).

- Incorporates humor and sarcasm in its response to boost interactions,

- Capable of answering “spicy questions” that many AI rejects.

Free Version: 30 days Free Trial

Pricing: $16/month

Looking for productivity? Here are 10 unique AI tools you should know about!

Large Language Models (LLMs) vs AI Tools: What’s the Difference?

While LLMs are a specialized subset of generative AI, not all generative AI tools are built on LLM frameworks. Generative AI encompasses a broader range of AI technologies capable of creating original content in various forms, be it text, images, music, or beyond. These tools rely on underlying AI models, including LLMs, to generate this content.

LLMs, on the other hand, are specifically designed for language-based tasks. They utilize deep learning and neural networks to excel in understanding, interpreting, and generating human-like text. Their focus is primarily on language processing, making them adept at tasks like text generation, translation, and question-answering.

The key difference lies in their scope and application: Generative AI is a broad category for any AI that creates original content across multiple domains, whereas LLMs are a focused type of generative AI specializing in language-related tasks. This distinction is crucial for understanding their respective roles and capabilities within the AI landscape.

David Watkins, Director of Product Management at Ethos —

At EthOS, our experience with integrating Al into our platform has been transformative. Leveraging IBM Watson sentiment and tone analysis, we can quickly collect customer sentiment and emotions on new website designs, in-home product testing, and many other qualitative research studies.

13. Try Cody, Simplify Business!

Cody is an accessible, no-code solution for creating chatbots using OpenAI’s advanced GPT models, specifically 3.5 turbo and 4. This tool is designed for ease of use, requiring no technical skills, making it suitable for a wide range of users. Simply feed your data into Cody, and it efficiently manages the rest, ensuring a hassle-free experience.

A standout feature of Cody is its independence from specific model versions, allowing users to stay current with the latest LLM updates without retraining their bots. It also incorporates a customizable knowledge base, continuously evolving to enhance its capabilities.

Ideal for prototyping within companies, Cody showcases the potential of GPT models without the complexity of building an AI model from the ground up. While it’s capable of using your company’s data in various formats for personalized model training, it’s recommended to use non-sensitive, publicly available data to maintain privacy and integrity.

For businesses seeking a robust GPT ecosystem, Cody offers enterprise-grade solutions. Its AI API facilitates seamless integration into different applications and services, providing functionalities like bot management, message sending, and conversation tracking.

Moreover, Cody can be integrated with platforms such as Slack, Discord, and Zapier and allows for sharing your bot with others. It offers a range of customization options, including model selection, bot personality, confidence level, and data source reference, enabling you to create a chatbot that fits your specific needs.

Cody’s blend of user-friendliness and customization options makes it an excellent choice for businesses aiming to leverage GPT technology without delving into complex AI model development.

Move on to the easiest AI sign-up ever!