SearchGPT Announced

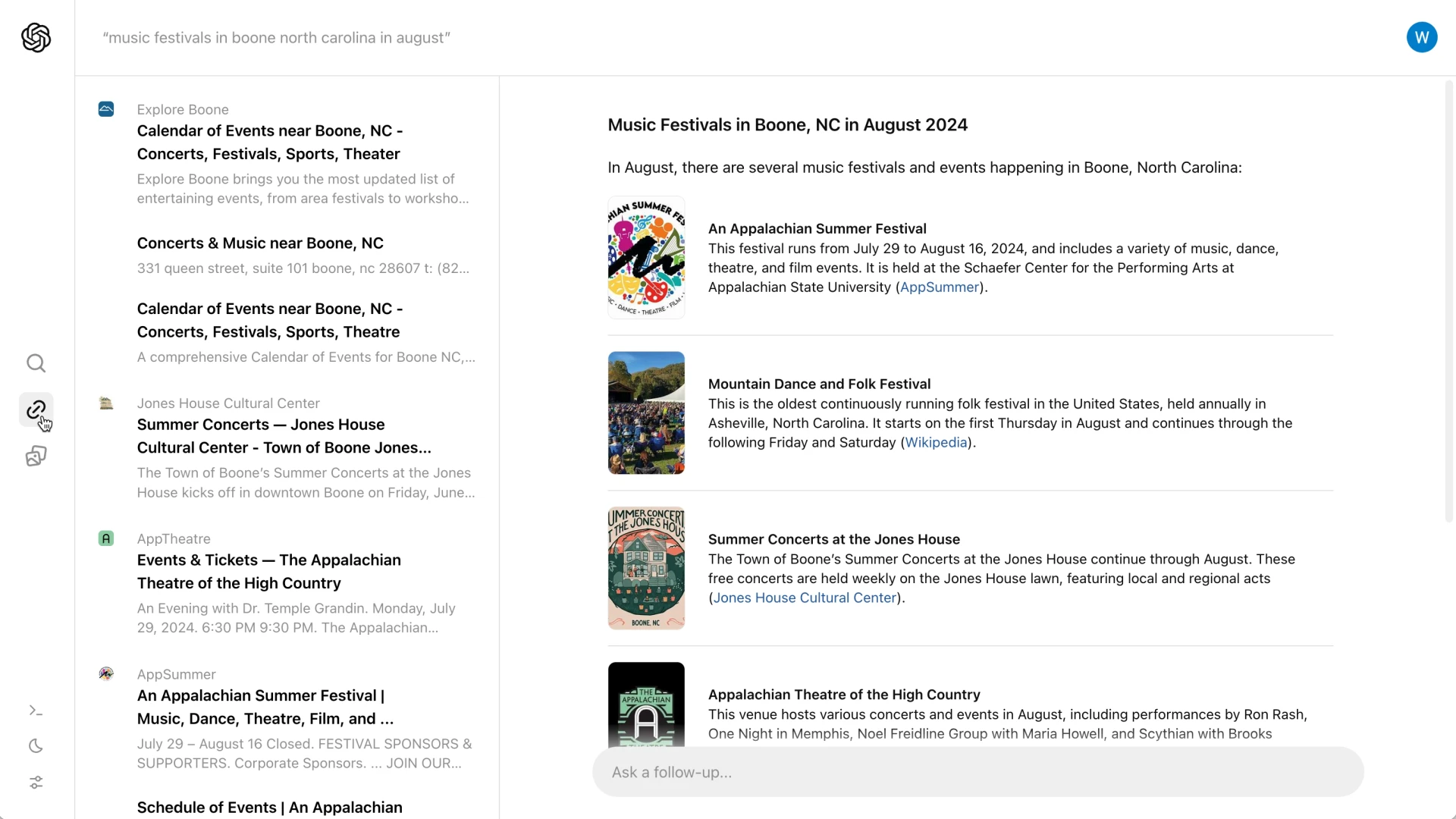

OpenAI has unveiled a groundbreaking prototype called SearchGPT, an AI-powered search engine developed to transform how users access information online. By leveraging advanced conversational models integrated with real-time web data, SearchGPT aims to deliver fast, precise, and timely responses to user queries. Unlike traditional search engines that present a list of links, SearchGPT offers comprehensive summaries accompanied by clear attributions, ensuring users get accurate and relevant information promptly. This innovative approach is designed to streamline the search experience, making it more effective and interactive for users.

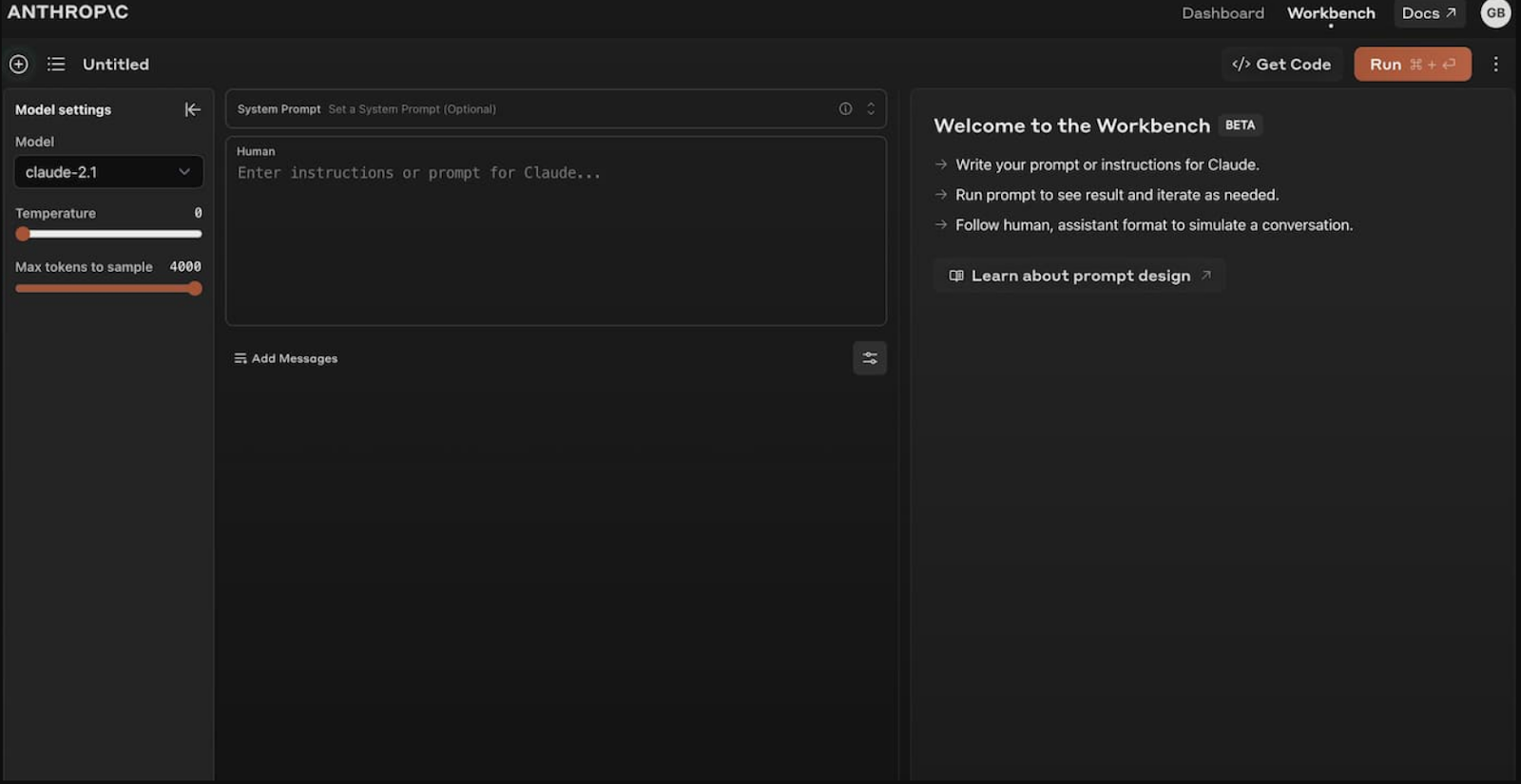

Key Features and Objectives

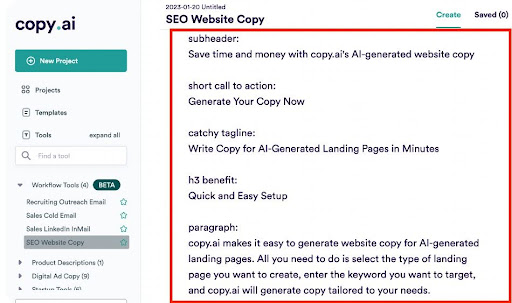

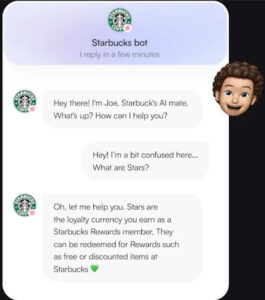

SearchGPT is designed to transform the traditional search experience into a more streamlined and conversational interaction. Unlike conventional search engines that display a list of links, SearchGPT provides concise summaries accompanied by attribution links. This approach allows users to quickly grasp the essence of their query while having the option to explore further details on the original websites.

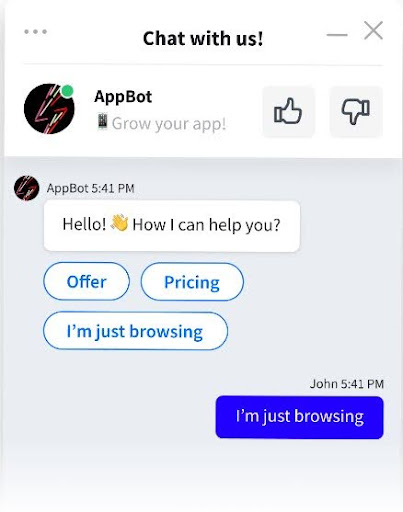

The platform also includes an interactive feature where users can ask follow-up questions, thus enriching the conversational aspect of the search process. Additionally, a sidebar presents additional relevant links, further enhancing the user’s ability to find comprehensive information.

One of the standout features is the introduction of ‘visual answers,’ which showcase AI-generated videos to provide users with a more engaging and informative search experience.

Collaboration with Publishers

SearchGPT has prioritized creating strong partnerships with news organizations to ensure the quality and reliability of the information it provides. By collaborating with reputable publishers like The Atlantic, News Corp, and The Associated Press, OpenAI ensures that users receive accurate and trustworthy search results.

These partnerships also grant publishers more control over how their content is displayed in search results. Publishers can decide to opt out of having their material used for training OpenAI’s AI models while still being prominently featured in search outcomes. This approach aims to protect the integrity and provenance of original content, making it a win-win for both users and content creators.

Differentiation from Competitors

SearchGPT sets itself apart from competitors like Google by addressing significant issues inherent in AI-integrated search engines. Google’s approach often faces criticism for inaccuracies and reducing traffic to original content sources by providing direct answers within search results. In contrast, SearchGPT ensures clear attribution and encourages users to visit publisher sites for detailed information. This strategy not only enhances the user experience with accurate and credible data but also aims to maintain a healthy ecosystem for publishers through responsible content sharing.

User Feedback and Future Integration

The current release of SearchGPT is a prototype, available to a select group of users and publishers. This limited rollout is designed to gather valuable feedback and insights, which will help refine and enhance the service. OpenAI plans to eventually integrate the most successful features of SearchGPT into ChatGPT, thereby making the AI even more connected with real-time web information.

Users who are interested in testing the prototype have the opportunity to join a waitlist, while publishers are encouraged to provide feedback on their experiences. This feedback will be crucial in shaping the future iterations of SearchGPT, ensuring it meets user needs and maintains high standards of accuracy and reliability.

Challenges and Considerations

As SearchGPT enters its prototype phase, it faces various challenges. One crucial aspect is ensuring the accuracy of information and proper attribution to sources. Learning from the pitfalls that Google faced, SearchGPT must avoid errors that could lead to misinformation or misattribution, which could undermine user trust and damage relationships with publishers .

Another significant challenge lies in monetization. Currently, SearchGPT is free and operates without ads during its initial launch phase. This ad-free approach presents a hurdle for developing a sustainable business model capable of supporting the extensive costs associated with AI training and inference. Addressing these financial demands will be essential for the long-term viability of the service .

In summary, for SearchGPT to succeed, OpenAI must navigate these technical and economic challenges, ensuring the platform’s accuracy and developing a feasible monetization strategy.

Conclusion

SearchGPT marks a significant advancement in the realm of AI-powered search technology. By prioritizing quality, reliability, and collaboration with publishers, OpenAI aims to deliver a more efficient and trustworthy search experience. The integration of conversational models with real-time web information sets SearchGPT apart from traditional search engines and rivals like Google.

Feedback from users and publishers will be crucial in shaping the future evolution of this innovative tool. As the prototype phase progresses, OpenAI plans to refine SearchGPT, ensuring it meets the needs and expectations of its users. This ongoing collaboration and iterative improvement process will help achieve a balanced ecosystem that benefits both content creators and users .

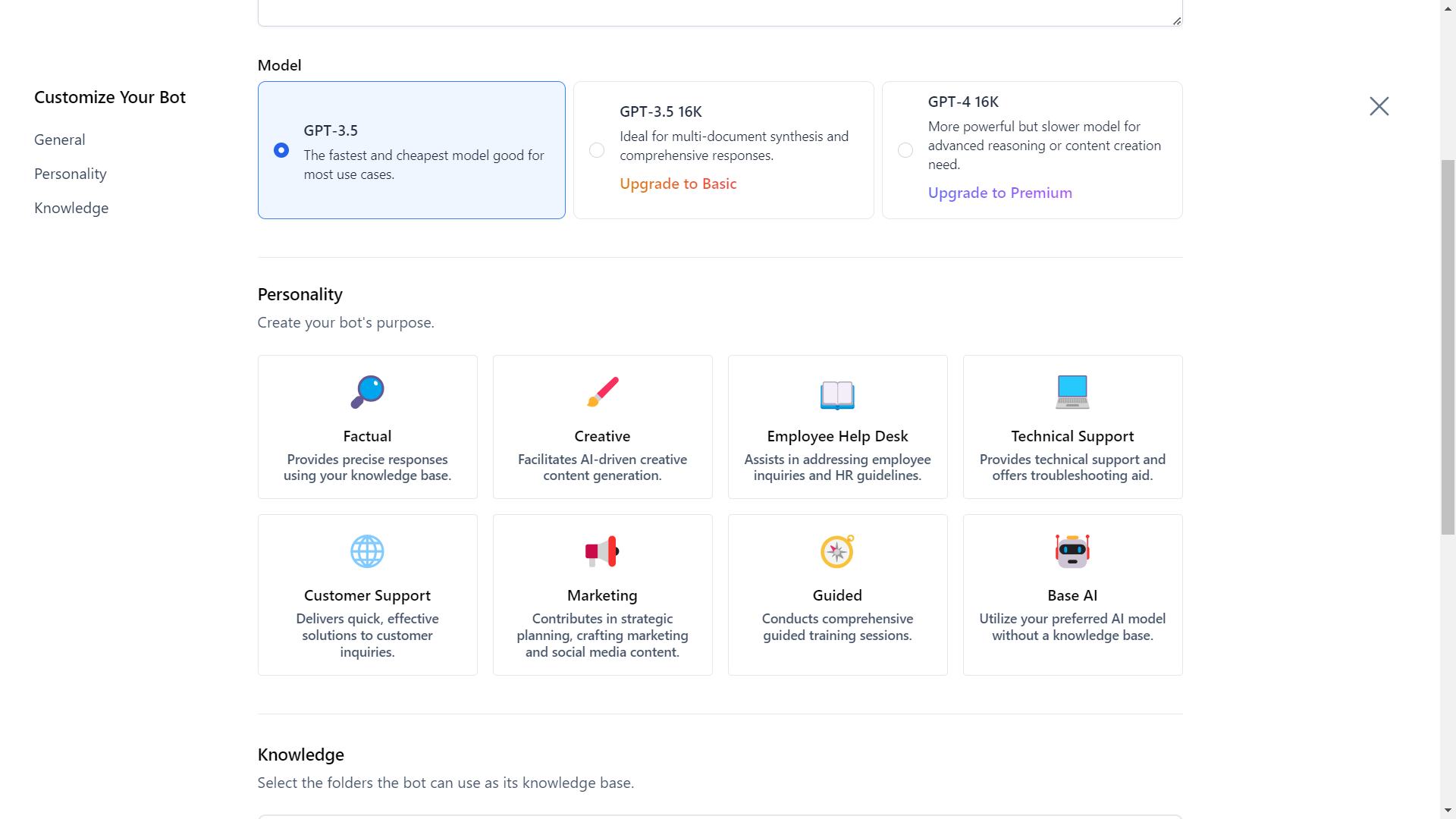

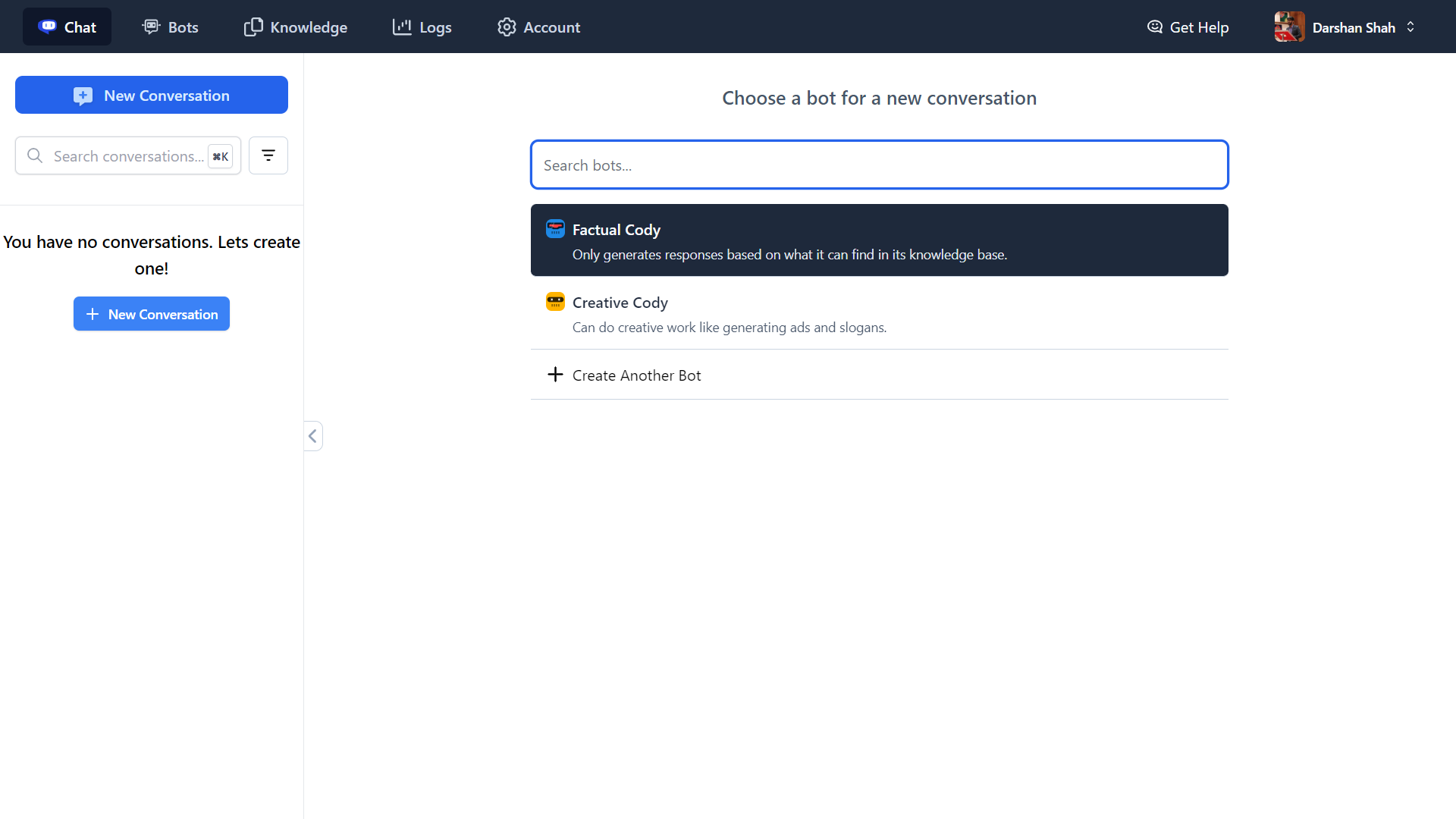

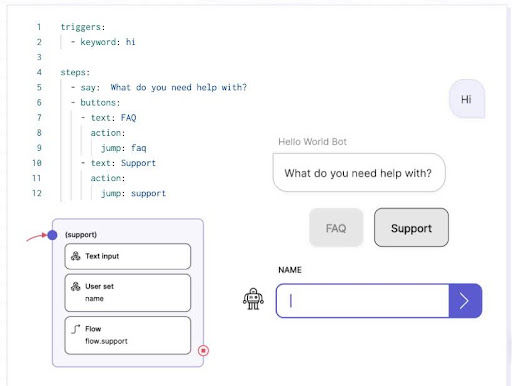

Unveil the future of business intelligence with Cody AI, your intelligent AI assistant beyond just chat. Seamlessly integrate your business, team, processes, and client knowledge into Cody to supercharge your productivity. Whether you need answers, creative solutions, troubleshooting, or brainstorming, Cody is here to support. Explore Cody AI now and transform your business operations!