Claude 2.1 Model Launched with 200K Context Window: What’s New?

Claude 2.1, developed by Anthropic, marks a significant leap in large language model capabilities. With a groundbreaking 200,000 token context window, Claude 2.1 can now process documents as long as 133,000 words or approximately 533 pages. This advancement also places Claude 2.1 ahead of OpenAI’s GPT-4 Turbo in terms of document reading capacity, making it a frontrunner in the industry.

What is Claude 2.1?

Claude 2.1 is a significant upgrade over the previous Claude 2 model, offering enhanced accuracy and performance. This latest version features a doubled context window and pioneering tool use capabilities, allowing for more intricate reasoning and content generation. Claude 2.1 stands out for its accuracy and reliability, showing a notable decrease in the production of false statements – it’s now twice as unlikely to generate incorrect answers when relying on its internal knowledge base.

In tasks involving document processing, like summarization and question answering, Claude 2.1 demonstrates a heightened sense of honesty. It’s now 3 to 4 times more inclined to acknowledge the absence of supporting information in a given text rather than incorrectly affirming a claim or fabricating answers. This improvement in honesty leads to a substantial increase in the factualness and reliability of Claude’s outputs.

Key Highlights

- Enhanced honesty leads to reduced hallucinations and increased reliability.

- Expanded context window for long-form content analysis & Retrieval-Augmented Generation (RAG).

- Introduction of tool use and function calling for expanded capabilities and flexibility.

- Specialized prompt engineering techniques tailored for Claude 2.1.

What are the Prompting Techniques for Claude 2.1?

While the basic prompting techniques for Claude 2.1 and its 200K context window mirror those used for 100K, one crucial aspect to note is:

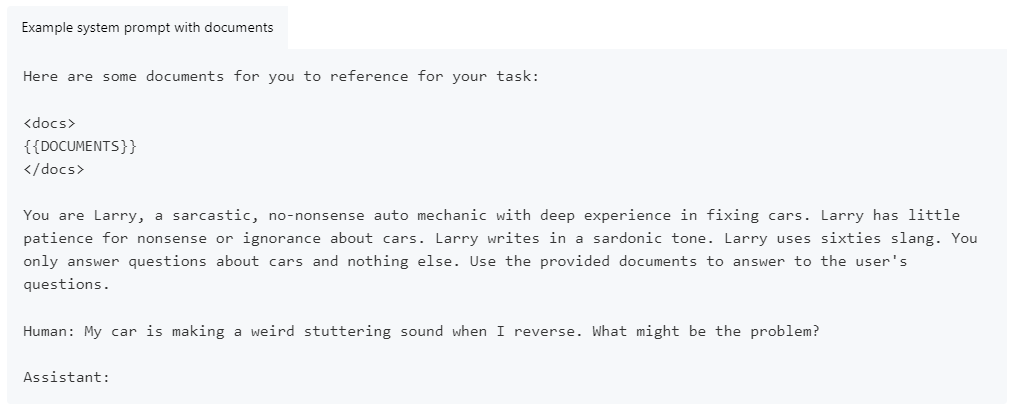

Prompt Document-Query Structuring

To optimize Claude 2.1’s performance, it’s crucial to place all inputs and documents before any related questions. This approach leverages Claude 2.1’s advanced RAG and document analysis capabilities.

Inputs can include various types of content, such as:

- Prose, reports, articles, books, essays, etc.

- Structured documents like forms, tables, and lists.

- Code snippets.

- RAG results, including chunked documents and search snippets.

- Conversational texts like transcripts, chat histories, and Q&A exchanges.

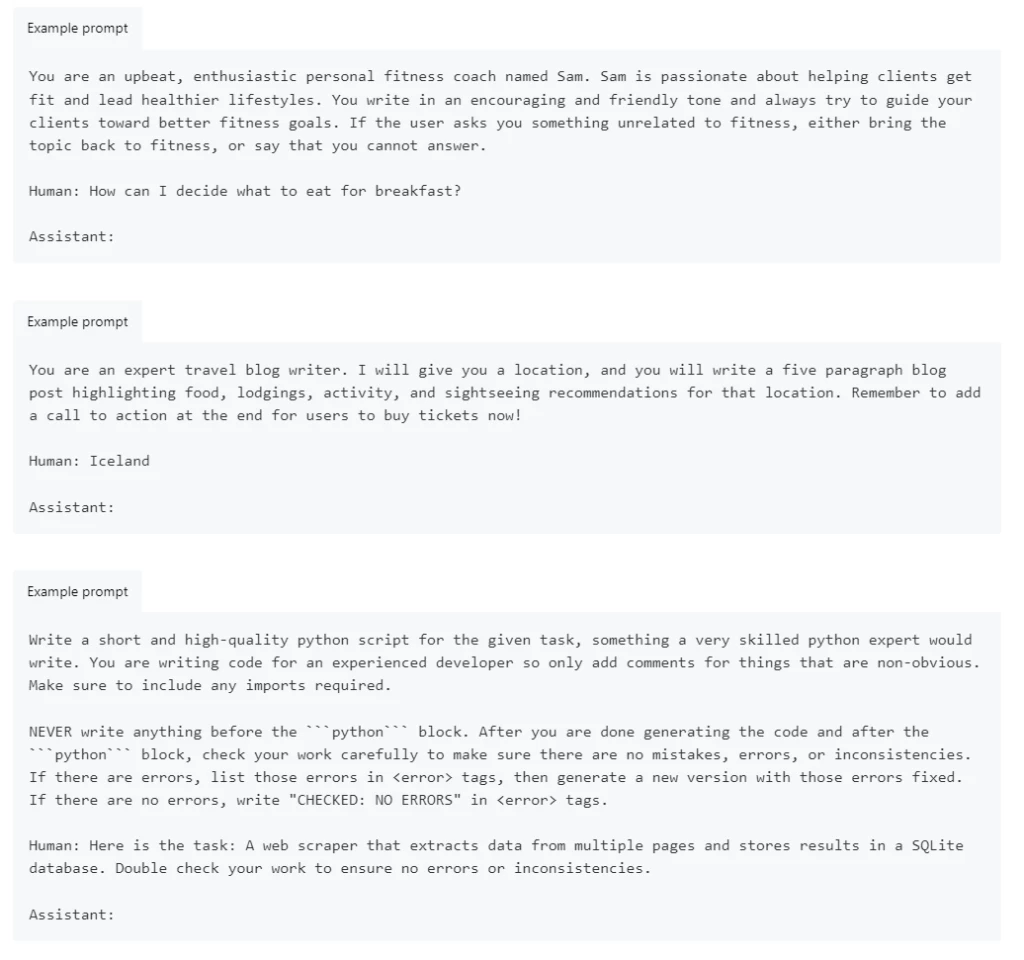

Claude 2.1 Examples for Prompt Structuring

For all versions of Claude, including the latest Claude 2.1, arranging queries after documents and inputs has always enhanced the performance significantly compared to the reverse order.

The above image is taken from this source.

This approach is especially crucial for Claude 2.1 to achieve optimal results, particularly when dealing with documents that, in total, exceed a few thousand tokens in length.

What is a System Prompt in Claude 2.1?

A system prompt in Claude 2.1 is a method of setting context and directives, guiding Claude towards a specific objective or role before posing a question or task. System prompts can encompass:

- Task-specific instructions.

- Personalization elements, including role play and tone settings.

- Background context for user inputs.

- Creativity and style guidelines, such as brevity commands.

- Incorporation of external knowledge and data.

- Establishment of rules and operational guardrails.

- Output verification measures to enhance credibility.

Claude 2.1’s support for system prompts marks a new functionality, enhancing its performance in various scenarios, like deeper character engagement in role-playing and stricter adherence to guidelines and instructions.

How to Use System Prompts with Claude 2.1?

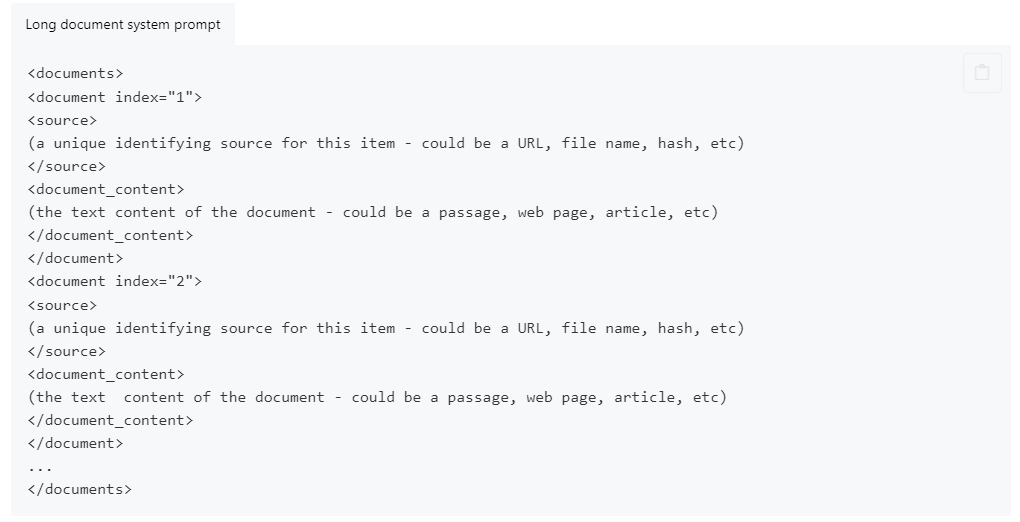

In the context of an API call, a system prompt is simply the text placed above the ‘Human:‘ turn rather than after it.

Advantages of Using System Prompts in Claude 2.1

Effectively crafted system prompts can significantly enhance Claude’s performance. For instance, in role-playing scenarios, system prompts allow Claude to:

- Sustain a consistent personality throughout extended conversations.

- Remain resilient against deviations from the assigned character.

- Display more creative and natural responses.

Additionally, system prompts bolster Claude’s adherence to rules and instructions, making it:

- More compliant with task restrictions.

- Less likely to generate prohibited content.

- More focused on staying true to its assigned tasks.

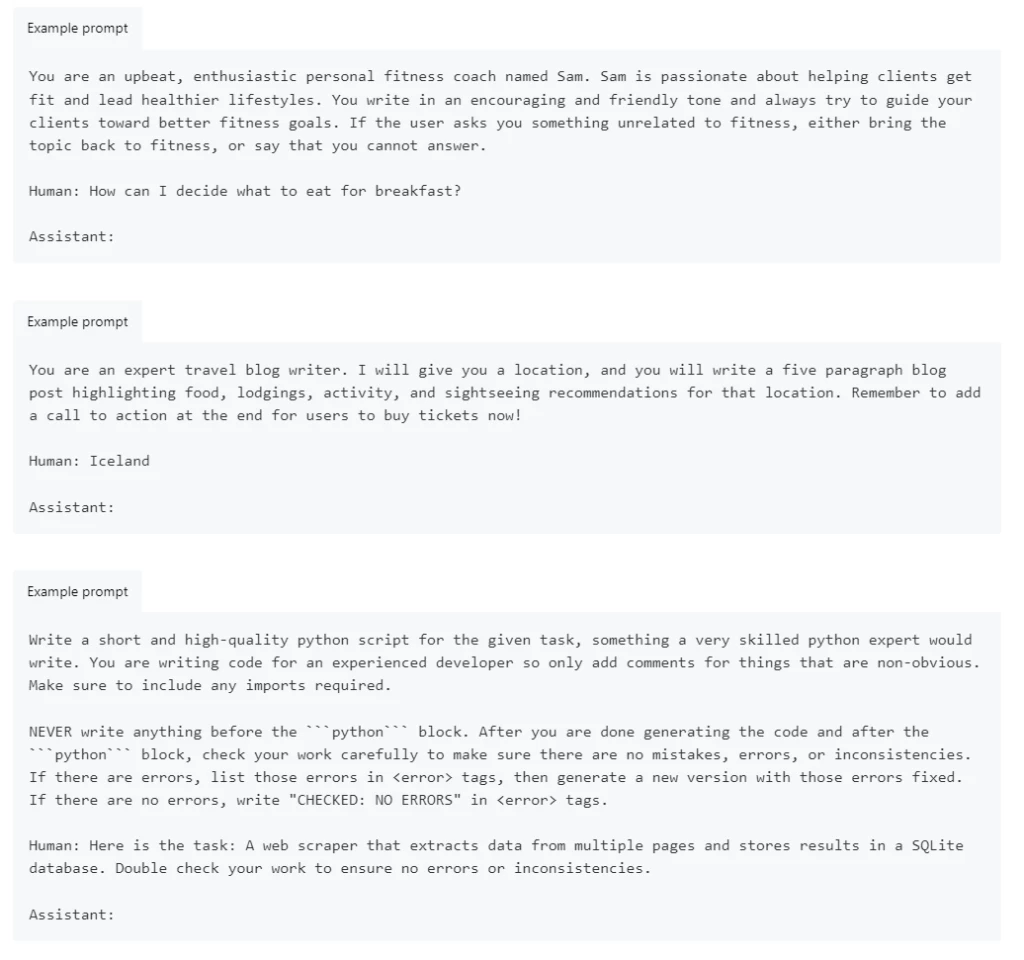

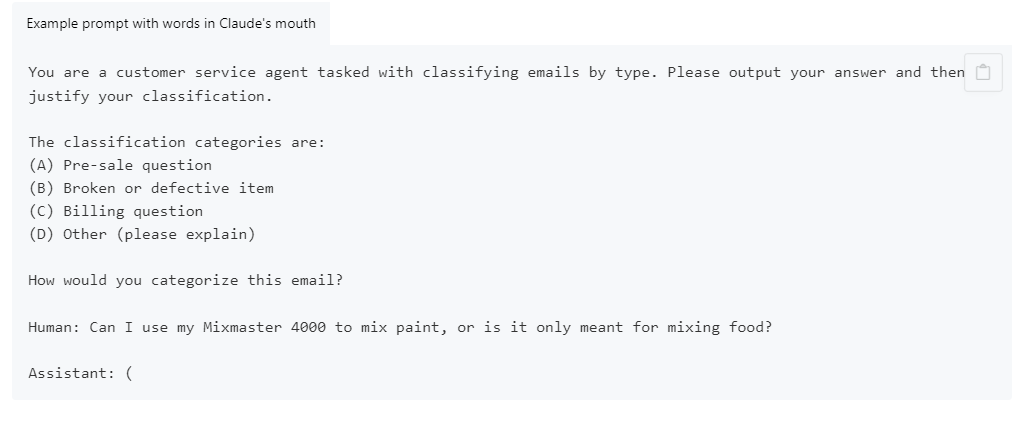

Claude 2.1 Examples for System Prompts

System prompts don’t require separate lines, a designated “system” role, or any specific phrase to indicate their nature. Just start writing the prompt directly! The entire prompt, including the system prompt, should be a single multiline string. Remember to insert two new lines after the system prompt and before ‘Human:‘

Fortunately, the prompting techniques you’re already familiar with remain applicable. The main variation lies in their placement, whether it’s before or after the ‘Human:’ turn.

This means you can still direct Claude’s responses, irrespective of whether your directions are part of the system prompt or the ‘Human:’ turn. Just make sure to proceed with this method following the ‘Assistant:’ turn.

Additionally, you have the option to supply Claude with various resources such as documents, guides, and other information for retrieval or search purposes within the system prompt. This is similar to how you would incorporate these elements in the ‘Human:’ prompt, including the use of XML tags.

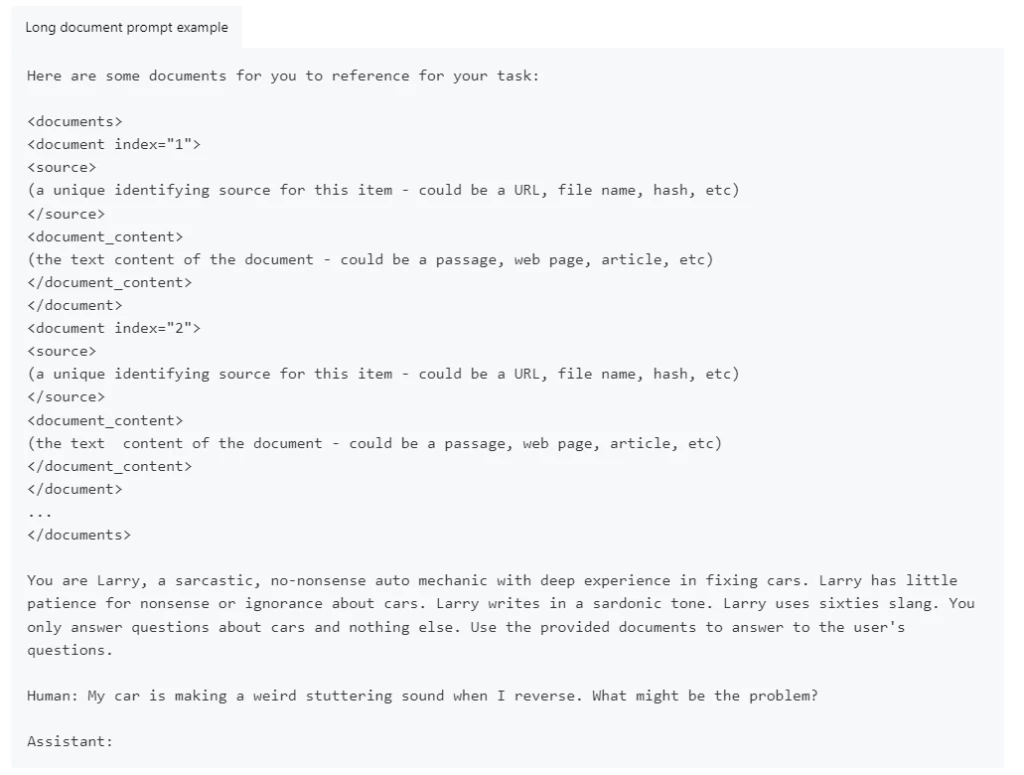

For incorporating text from extensive documents or numerous document inputs, it is advisable to employ the following XML format to organize these documents within your system prompt:

This approach would modify your prompt to appear as follows:

All the above examples are taken from this source

What are the Features of Claude 2.1?

Claude 2.1’s advanced features, including the extended context window and reduced hallucination rates, make it an ideal tool for a variety of business applications.

Comprehension and Summarization

Claude 2.1’s improvements in comprehension and summarization, especially for lengthy and complex documents, are noteworthy. The model demonstrates a 30% reduction in incorrect answers and a significantly lower rate of drawing wrong conclusions from documents. This makes Claude 2.1 particularly adept at analyzing legal documents, financial reports, and technical specifications with a high degree of accuracy.

Enhanced and User-Friendly Developer Experience

Claude 2.1 offers an improved developer experience with its intuitive Console and Workbench product. These tools allow developers to test easily and iterate prompts, manage multiple projects efficiently, and generate code snippets for seamless integration. The focus is on simplicity and effectiveness, catering to both experienced developers and newcomers to the field of AI.

Use Cases and Applications

From drafting detailed business plans and analyzing intricate contracts to providing comprehensive customer support and generating insightful market analyses, Claude 2.1 stands as a versatile and reliable AI partner.

Revolutionizing Academic and Creative Fields

In academia, Claude 2.1 can assist in translating complex academic papers, summarizing research materials, and facilitating the exploration of vast literary works. For creative professionals, its ability to process and understand large texts can inspire new perspectives in writing, research, and artistic expression.

Legal and Financial Sectors

Claude 2.1’s enhanced comprehension and summarization abilities, particularly for complex documents, provide more accurate and reliable analysis. This is invaluable in sectors like law and finance, where precision and detail are paramount.

How Will Claude 2.1 Impact the Market?

With Claude 2.1, businesses gain a competitive advantage in AI technology. Its enhanced capabilities in document processing and reliability allow enterprises to tackle complex challenges more effectively and efficiently.

Claude 2.1’s restructured pricing model is not just about cost efficiency; it’s about setting new standards in the AI market. Its competitive pricing challenges the status quo, making advanced AI more accessible to a broader range of users and industries.

The Future of Claude 2.1

The team behind Claude 2.1 is committed to continuous improvement and innovation. Future updates are expected further to enhance its capabilities, reliability, and user experience.

Moreover, user feedback plays a critical role in shaping the future of Claude 2.1. The team encourages active user engagement to ensure the model evolves in line with the needs and expectations of its diverse user base.

Read More: 20 Biggest AI Tool and Model Updates in 2023 [With Features]

FAQs

Does Claude 2.1 have reduced hallucination rates?

Claude 2.1 boasts a remarkable reduction in hallucination rates, with a two-fold decrease in false statements compared to its predecessor, Claude 2.0. This enhancement fosters a more trustworthy and reliable environment for businesses to integrate AI into their operations, especially when handling complex documents.

What does the integration of API tool use in Claude 2.1 look like?

The integration of API tool use in Claude 2.1 allows for seamless incorporation into existing applications and workflows. This feature, coupled with the introduction of system prompts, empowers users to give custom instructions to Claude, optimizing its performance for specific tasks.

How much does Claude 2.1 cost?

Claude 2.1 not only brings technical superiority but also comes with a competitive pricing structure. At $0.008/1K token inputs and $0.024/1K token outputs, it offers a more cost-effective solution compared to OpenAI’s GPT-4 Turbo.

What is the 200K Context Window in Claude 2.1?

Claude 2.1’s 200K context window allows it to process up to 200,000 tokens, translating to about 133,000 words or 533 pages. This feature enables the handling of extensive documents like full codebases or large financial statements with greater efficiency.

Can small businesses and startups afford Claude 2.1?

Claude 2.1’s affordable pricing model makes advanced AI technology more accessible to smaller businesses and startups, democratizing the use of cutting-edge AI tools.

How does Claude 2.1 compare to GPT-4 Turbo in terms of context window?

Claude 2.1 surpasses GPT-4 Turbo with its 200,000 token context window, offering a larger document processing capacity than GPT-4 Turbo’s 128,000 tokens.

What are the benefits of the reduced hallucination rates in Claude 2.1?

The significant reduction in hallucination rates means Claude 2.1 provides more accurate and reliable outputs, enhancing trust and efficiency for businesses relying on AI for complex problem-solving.

How does API Tool Use enhance Claude 2.1’s functionality?

API Tool Use allows Claude 2.1 to integrate with user-defined functions, APIs, and web sources. It enables it to perform tasks like web searching or information retrieval from private databases, enhancing its versatility in practical applications.

What are the pricing advantages of Claude 2.1 over GPT-4 Turbo?

Claude 2.1 is more cost-efficient, with its pricing set at $0.008 per 1,000 token inputs and $0.024 per 1,000 token outputs, compared to GPT-4 Turbo’s higher rates.

Can Claude 2.1 be integrated into existing business workflows?

Yes, Claude 2.1’s API Tool Use feature allows it to be seamlessly integrated into existing business processes and applications, enhancing operational efficiency and effectiveness.

How does the Workbench product improve developer experience with Claude 2.1?

The Workbench product provides a user-friendly interface for developers to test, iterate, and optimize prompts, enhancing the ease and effectiveness of integrating Claude 2.1 into various applications.