As we step into 2025, artificial intelligence (AI) is reshaping industries, society, and how we interact with technology in exciting and sometimes surprising ways. From AI agents that can work independently to systems that seamlessly integrate text, video, and audio, the field is evolving faster than ever. For tech entrepreneurs and developers, staying ahead of these changes isn’t just smart—it’s essential.

Let’s understand the trends, breakthroughs, and challenges that will shape AI in 2025 and beyond.

A Quick Look Back: How AI Changed Our World

AI’s journey from the 1950s to today has been a remarkable story of evolution. From simple, rule-based systems, it has evolved into sophisticated models capable of reasoning, creativity, and autonomy. Over the last decade, AI has transitioned from experimental to indispensable, becoming a core driver of innovation across industries.

Healthcare

AI-powered tools are now integral to diagnostics, personalized medicine, and even surgical robotics. Technologies like AI-enhanced imaging have pushed the boundaries of early disease detection, rivaling and surpassing human capabilities in accuracy and speed.

Education

Adaptive AI platforms have fundamentally changed how students learn. They use granular data analysis to tailor content, pacing, and engagement at an individual level.

Transportation

Autonomous systems have evolved from experimental prototypes to viable solutions in logistics and public transport, backed by advances in sensor fusion, computer vision, and real-time decision-making.

While these advancements have brought undeniable value, they’ve also exposed complex questions around ethics, workforce implications, and the equitable distribution of AI’s benefits. Addressing these challenges remains a priority as AI continues to scale.

Game-Changing AI Technologies to Watch in 2025

In 2025, the focus isn’t just on making AI smarter but on making it more capable, scalable, and ethical. Here’s what’s shaping the landscape:

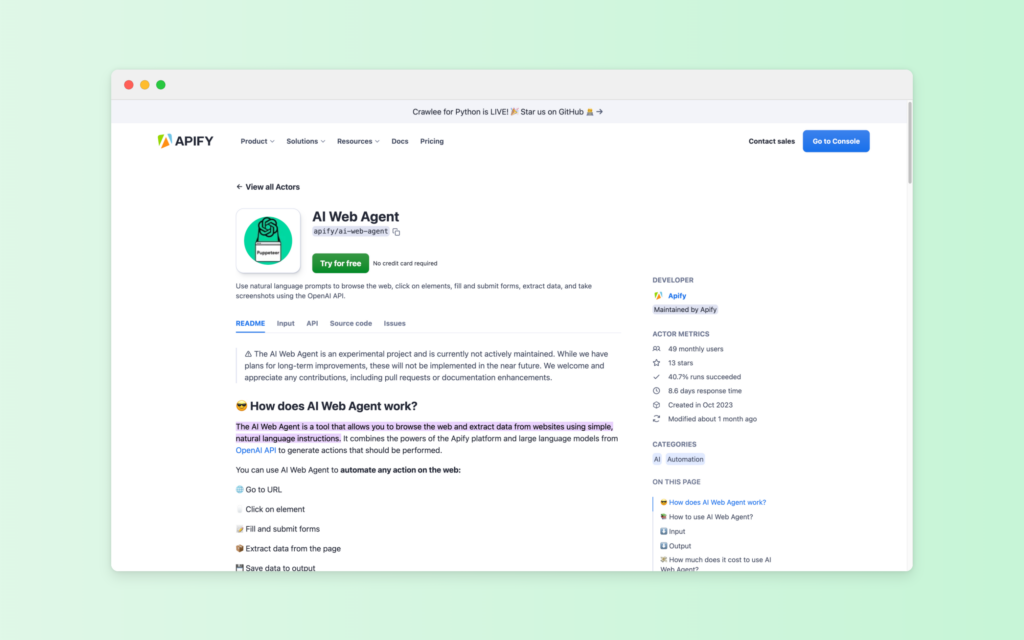

1. Agentic AI: Beyond Task Automation

Agentic AI isn’t just another buzzword. These systems can make decisions and adapt to situations with little to no human input. How about having an AI that manages your schedule, handles projects, or even generates creative ideas? It’s like adding a super-efficient team member who never sleeps.

- For businesses: Think virtual project managers handling complex workflows.

- For creatives: Tools that help brainstorm ideas or edit content alongside you.

As Moody’s highlights, agentic AI is poised to become a driving force behind productivity and innovation across industries.

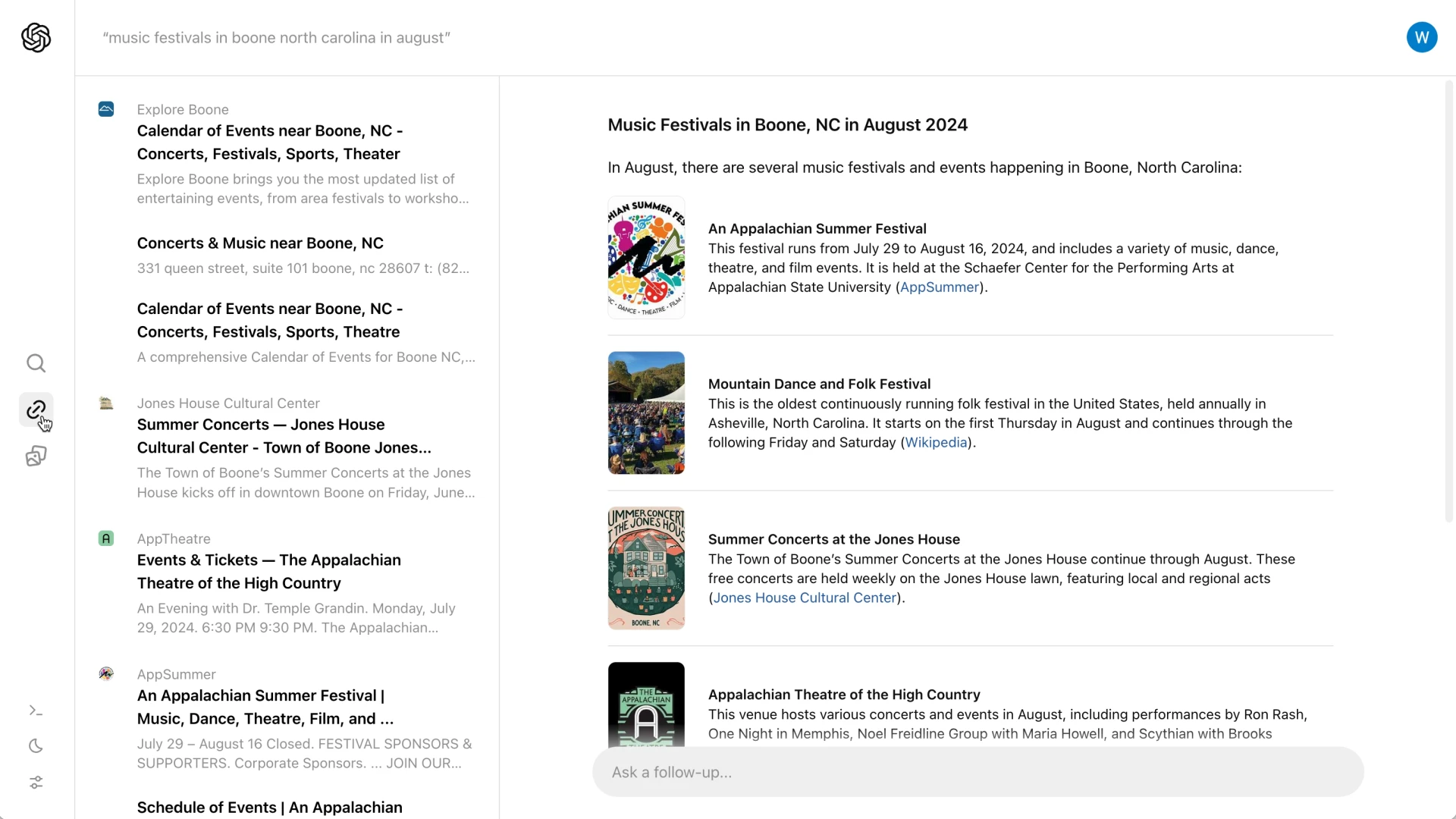

2. Multimodal AI: The Ultimate All-Rounder

This tech brings together text, images, audio, and video in one seamless system. It’s why future virtual assistants won’t just understand what you’re saying—they’ll pick up on your tone, facial expressions, and even the context of your surroundings.

Here are a few examples:

- Healthcare: Multimodal systems could analyze medical data from multiple sources to provide faster and more accurate diagnoses.

- Everyday life: Imagine an assistant that can help you plan a trip by analyzing reviews, photos, and videos instantly.

Gartner predicts that by 2027, 40% of generative AI solutions will be multimodal, up from just 1% in 2023.

3. Synthetic Data: The Privacy-Friendly Solution

AI systems need data to learn, but real-world data often comes with privacy concerns or availability issues. Enter synthetic data—artificially generated datasets that mimic the real thing without exposing sensitive information.

Here is how this could play out:

Scalable innovation: From training autonomous vehicles in simulated environments to generating rare medical data for pharmaceutical research.

Governance imperatives: Developers are increasingly integrating audit-friendly systems to ensure transparency, accountability, and alignment with regulatory standards.

Synthetic data is a win-win, helping developers innovate faster while respecting privacy.

Industries AI Is Transforming Right Now

AI is already making waves in these key sectors:

| Industry | Share of respondents with regular Gen AI use within their organizational roles (Source) |

| Marketing and sales | 14% |

| Product and/or service development | 13% |

| Service operations | 10% |

| Risk management | 4% |

| Strategy and corporate finance | 4% |

| HR | 3% |

| Supply chain management | 3% |

| Manufacturing | 2% |

Healthcare

AI is saving lives. From analyzing medical images to recommending personalized treatments, it’s making healthcare smarter, faster, and more accessible. Early detection tools are already outperforming traditional methods, helping doctors catch problems before they escalate.

Retail

Generative AI is enabling hyper-personalized marketing campaigns, while predictive inventory models reduce waste by aligning supply chains more precisely with demand patterns. Retailers adopting these technologies are reporting significant gains in operational efficiency. According to McKinsey, generative AI is set to unlock $240 billion to $390 billion in economic value for retailers.

Education

Beyond adaptive learning, AI is now augmenting teaching methodologies. For example, generative AI tools assist educators by creating tailored curricula and interactive teaching aids, streamlining administrative burdens.

Transportation & logistics

AI’s integration with IoT systems has enabled unparalleled visibility into logistics networks, enhancing route optimization, inventory management, and risk mitigation for global supply chains.

What’s Next? AI Trends to Watch in 2025

So, where is AI headed? Here are the big trends shaping the future:

1. Self-Improving AI Models

AI systems that refine themselves in real-time are emerging as a critical trend. These self-improving models leverage continuous learning loops, enhancing accuracy and relevance with minimal human oversight. Use cases include real-time fraud detection and adaptive cybersecurity.

2. Synthetic Data’s New Frontiers

Synthetic data is moving beyond privacy-driven applications into more sophisticated scenarios, such as training AI for edge cases and simulating rare or hazardous events. Industries like autonomous driving are heavily investing in this area to model corner cases at scale.

3. Domain-Specific AI Architectures

The era of generalized AI is giving way to domain-specialized architectures. Developers are focusing on fine-tuning models for specific verticals like finance, climate modeling, and genomic research, unlocking new levels of precision and efficiency.

4. Edge AI at Scale

Edge AI processes data locally on a device instead of relying on the cloud. Its real-time capabilities are evolving from niche applications to mainstream adoption. Industries are leveraging edge computing to deploy low-latency AI models in environments with limited connectivity, from remote healthcare facilities to smart manufacturing plants.

5. Collaborative AI Ecosystems

AI is becoming less siloed, with ecosystems that enable interoperability between diverse models and platforms. This fosters more robust solutions through collaboration, particularly in multi-stakeholder environments like healthcare and urban planning.

The Challenges Ahead

While the future of AI is bright, it’s not without hurdles. Here’s what we need to tackle:

Regulations and Ethics

The European Union’s AI Act and California’s data transparency laws are just the beginning. Developers and policymakers must work together to ensure that AI is used responsibly and ethically.

Bias and Fairness

Even as model interpretability improves, the risk of bias remains significant. Developers must prioritize diverse, high-quality datasets and incorporate fairness metrics into their pipelines to mitigate unintended consequences.

Sustainability

Training massive AI models uses a lot of energy. innovations in model compression and energy-efficient hardware are critical to aligning AI development with sustainability goals.

Looking Ahead: How AI Will Shape the Future

AI’s potential to reshape industries and address global challenges is immense. But how exactly will it impact our future? Here’s a closer look:

Empowering Global Challenges

AI-powered tools are analyzing climate patterns, optimizing renewable energy sources, and predicting natural disasters with greater accuracy. For example, AI models can help farmers adapt to climate change by predicting rainfall patterns and suggesting optimal crop rotations.

AI is democratizing healthcare access by enabling remote diagnostics and treatment recommendations. In underserved areas, AI tools are acting as virtual healthcare providers, bridging the gap caused by shortages of medical professionals.

Transforming Work

While AI will automate repetitive tasks, it’s also creating demand for roles in AI ethics, system training, and human-AI collaboration. The workplace is becoming a dynamic partnership between humans and AI, where tasks requiring intuition and empathy are complemented by AI’s precision and scale.

Job roles will evolve toward curating, managing, and auditing AI systems rather than direct task execution.

Tackling Security Threats

AI’s sophistication also introduces risks. Cyberattacks powered by AI and deepfake technologies are becoming more prevalent. To counteract this, predictive threat models and autonomous response systems are already reducing response times to breaches from hours to seconds.

Wrapping It Up: Are You Ready for the Future?

2025 is not just another year for AI—it’s a tipping point. With advancements like agentic AI, multimodal systems, and synthetic data reshaping industries, the onus is on tech entrepreneurs and developers to navigate this evolving landscape with precision and foresight. The future isn’t just about adopting AI; it’s about shaping its trajectory responsibly.