Improving Research Productivity with AI Tools

The landscape of modern research is undergoing a transformative shift, thanks to the advent of Artificial Intelligence (AI). These intelligent systems are making it easier for researchers to process vast amounts of data and extract valuable insights quickly. A crucial component of this transformation is the suite of tools powered by Generative Pre-trained Transformers (GPT), which are designed to handle complex tasks with high efficiency.

AI tools are increasingly becoming indispensable in academic and professional research settings. They assist in summarizing intricate research papers, conducting advanced searches, and enhancing documentation quality. By leveraging these tools, researchers can significantly streamline their workflows and focus more on innovative thinking and problem-solving .

1. Summarizing Complex Research Papers

One of the most time-consuming tasks in research is deciphering complex papers. Fortunately, GPT-powered tools have become invaluable in this domain. SummarizePaper.com is an open-source AI tool specifically designed to summarize articles from arXiv, making them more digestible for researchers.

Additionally, Unriddl streamlines complex topics and provides concise summaries, allowing researchers to grasp intricate ideas swiftly. Another notable tool is Wordtune, which can quickly summarize long documents, thereby aiding in comprehending vast information efficiently. These advancements enable scholars to save time and focus on critical analysis and innovation.

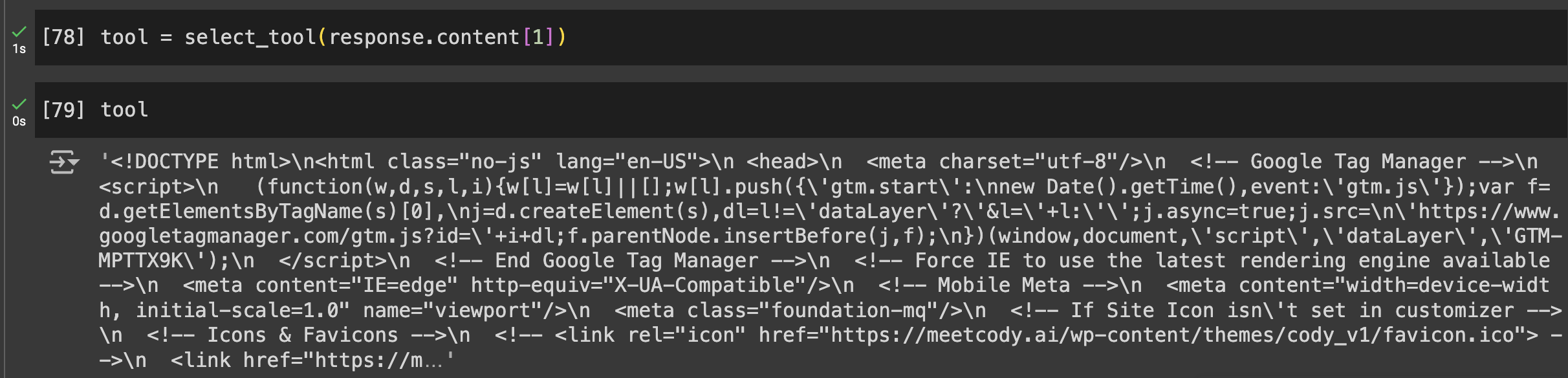

For people looking out for a more versatile tool with intuitive features like selective document analysis, model-agnostic nature and the ability to share bots trained on your research papers – Cody AI is another great choice that incorporates all of these features.

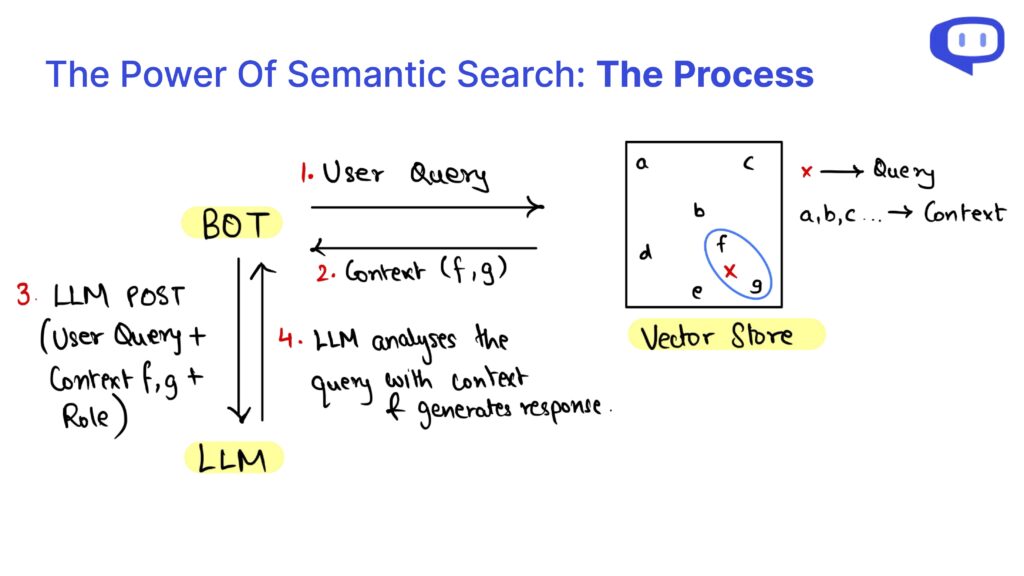

2. Advanced Search and Information Retrieval

Finding precise information quickly is paramount in research, and AI tools excel in this area. Searcholic is an AI-powered search engine that helps researchers locate a wide range of eBooks and documents effortlessly. This tool makes it easier to access diverse sources of information, ensuring that researchers have comprehensive content at their fingertips.

Another powerful tool is Semantic Scholar, which offers access to over 211 million scientific papers. This AI tool enables users to conduct thorough literature reviews by providing advanced search functionalities tailored for scientific research.

Finally, Perplexity combines the functionalities of a search engine and a chatbot, allowing researchers to ask questions and receive detailed answers swiftly. This hybrid approach not only saves time but also improves the efficiency of information retrieval, making it an indispensable tool for modern researchers.

3. Enhancing Research Documentation

Effective documentation is crucial for the dissemination and validation of research. Penelope AI is an invaluable tool that allows researchers to check their academic manuscripts before submission to journals, ensuring that their work adheres to high standards and guidelines.

Another indispensable tool is Grammarly, which corrects grammar and spelling errors, thereby improving the readability and professionalism of research documents. This contributes to the overall quality and clarity of the research, making it more accessible to a wider audience.

Moreover, Kudos helps researchers explain their work in plain language and create visually appealing pages. This service enhances the visibility of research by translating complex topics into more understandable content, thus broadening the potential impact of the research findings.

These tools collectively ensure that research documentation is thorough, well-presented, and comprehensible, ultimately aiding in the effective communication of scientific discoveries.

Conclusion: Embracing AI for Future Research

Incorporating GPT and AI tools into the research process offers numerous benefits, from summarizing complex research papers to enhancing documentation. Tools like SummarizePaper.com and Unriddl simplify the understanding of intricate topics by providing concise summaries, making academic literature more accessible. Additionally, AI-powered search engines like Semant Scholar facilitate efficient information retrieval, greatly enhancing the research workflow.

For documentation, tools such as Penelope AI and Grammarly ensure papers meet high standards and communicate clearly. Kudos further broadens the reach of research by translating complex findings into plain language. These AI tools collectively enhance the precision, efficiency, and impact of research activities.

As we continue to embrace AI in research, we not only improve individual workflows but also contribute to the broader scientific community. Integrating these advanced tools is a step towards more efficient, accurate, and accessible research, driving future innovation and discoveries.