How to Automate Tasks with Anthropic’s Tools and Claude 3?

Getting started with Anthropic’s Tools

The greatest benefit of employing LLMs for tasks is their versatility. LLMs can be prompted in specific ways to serve a myriad of purposes, functioning as APIs for text generation or converting unstructured data into organized formats. Many of us turn to ChatGPT for our daily tasks, whether it’s composing emails or engaging in playful debates with the AI.

The architecture of plugins, also known as ‘GPTs’, revolves around identifying keywords from responses and queries and executing relevant functions. These plugins enable interactions with external applications or trigger custom functions.

While OpenAI led the way in enabling external function calls for task execution, Anthropic has recently introduced an enhanced feature called ‘Tool Use’, replacing their previous function calling mechanism. This updated version simplifies development by utilizing JSON instead of XML tags. Additionally, Claude-3 Opus boasts an advantage over GPT models with its larger context window of 200K tokens, particularly valuable in specific scenarios.

In this blog, we will explore the concept of ‘Tool Use’, discuss its features, and offer guidance on getting started.

What is ‘Tool Use’?

Claude has the capability to interact with external client-side tools and functions, enabling you to equip Claude with your own custom tools for a wider range of tasks.

The workflow for using Tools with Claude is as follows:

- Provide Claude with tools and a user prompt (API request)

- Define a set of tools for Claude to choose from.

- Include them along with the user query in the text generation prompt.

- Claude selects a tool

- Claude analyzes the user prompt and compares it with all available tools to select the most relevant one.

- Utilizing the LLM’s ‘thinking’ process, it identifies the keywords required for the relevant tool.

- Response Generation (API Response)

- Upon completing the process, the thinking prompt, along with the selected tool and parameters, is generated as the output.

Following this process, you execute the selected function/tool and utilize its output to generate another response if necessary.

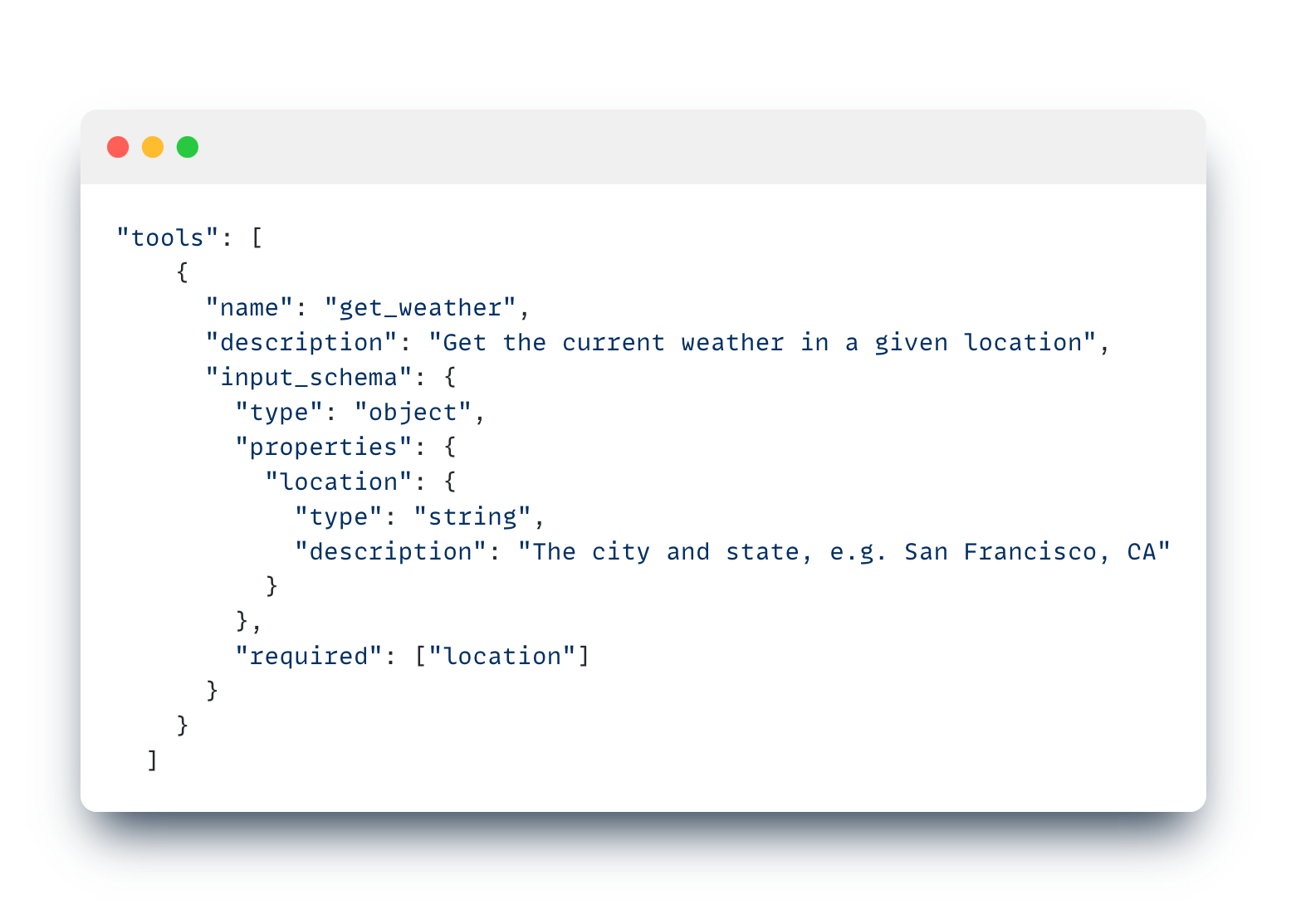

General schema of the tool

This schema serves as a means of communicating the requirements for the function calling process to the LLM. It does not directly call any function or trigger any action on its own. To ensure accurate identification of tools, a detailed description of each tool must be provided. Properties within the schema are utilized to identify the parameters that will be passed into the function at a later stage.

Demonstration

Let’s go ahead and build tools for scraping the web and finding the price of any stock.

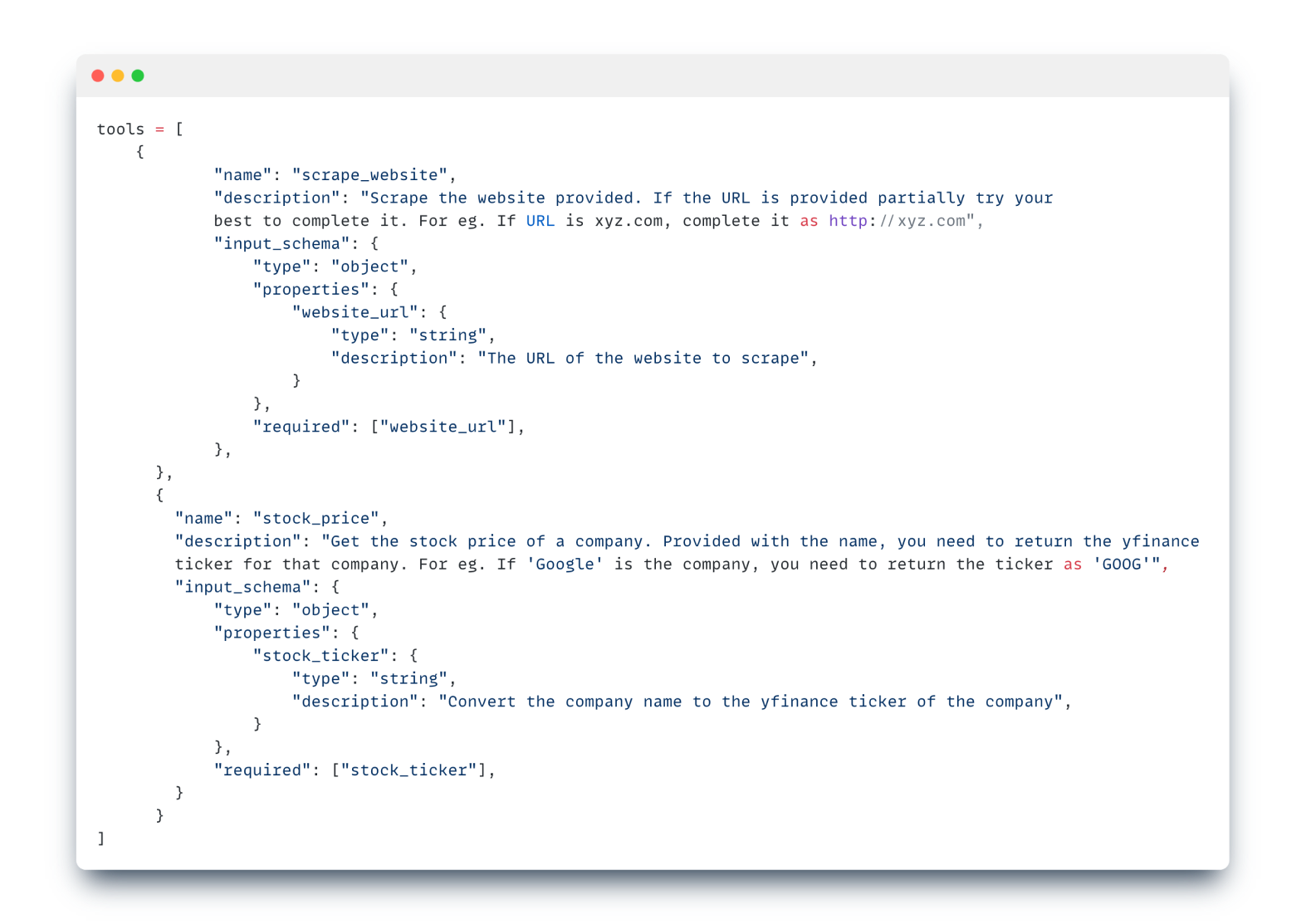

Tools Schema

In the scrape_website tool, it will fetch the URL of the website from the user prompt. As for the stock_price tool, it will identify the company name from the user prompt and convert it to a yfinance ticker.

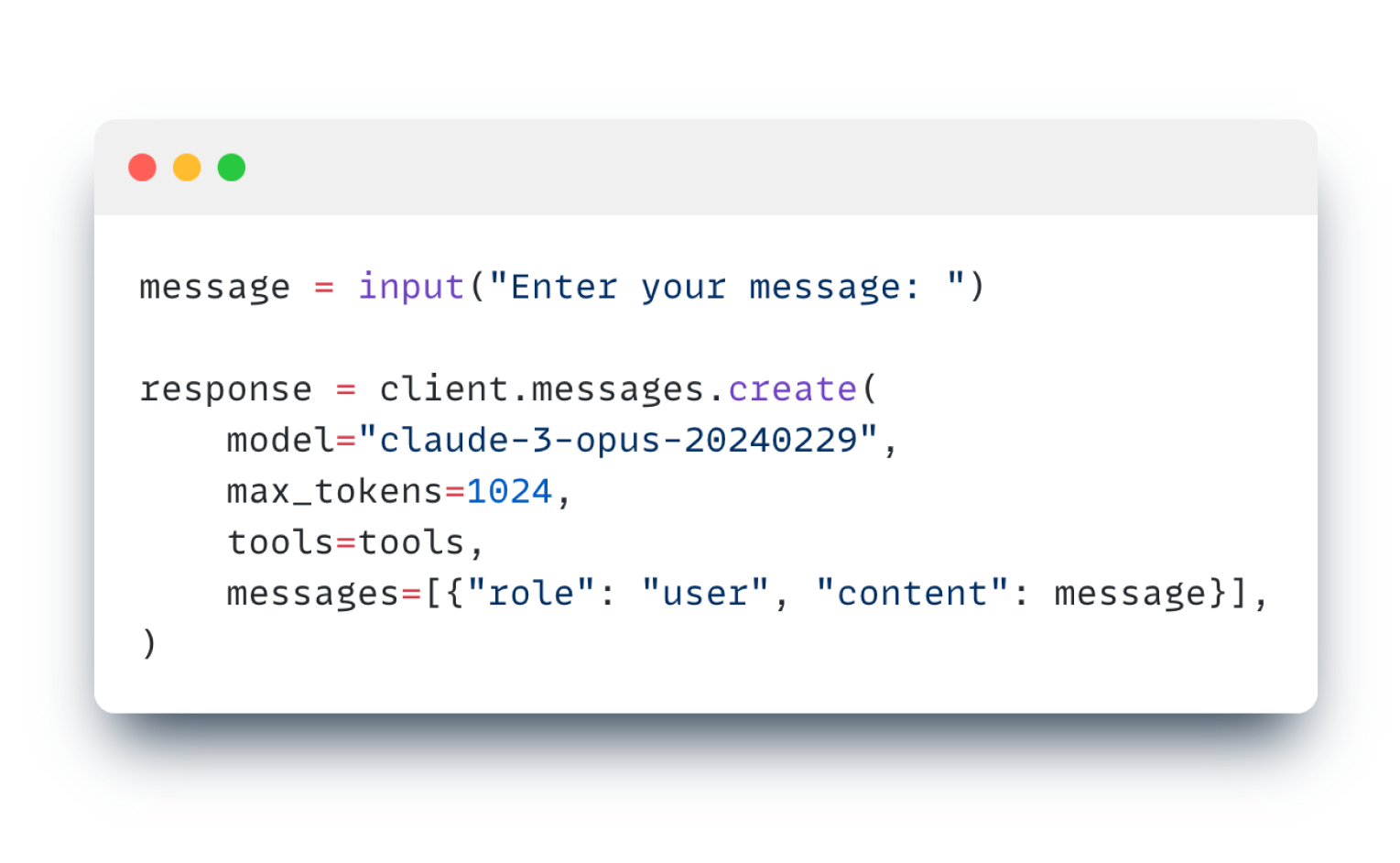

User Prompt

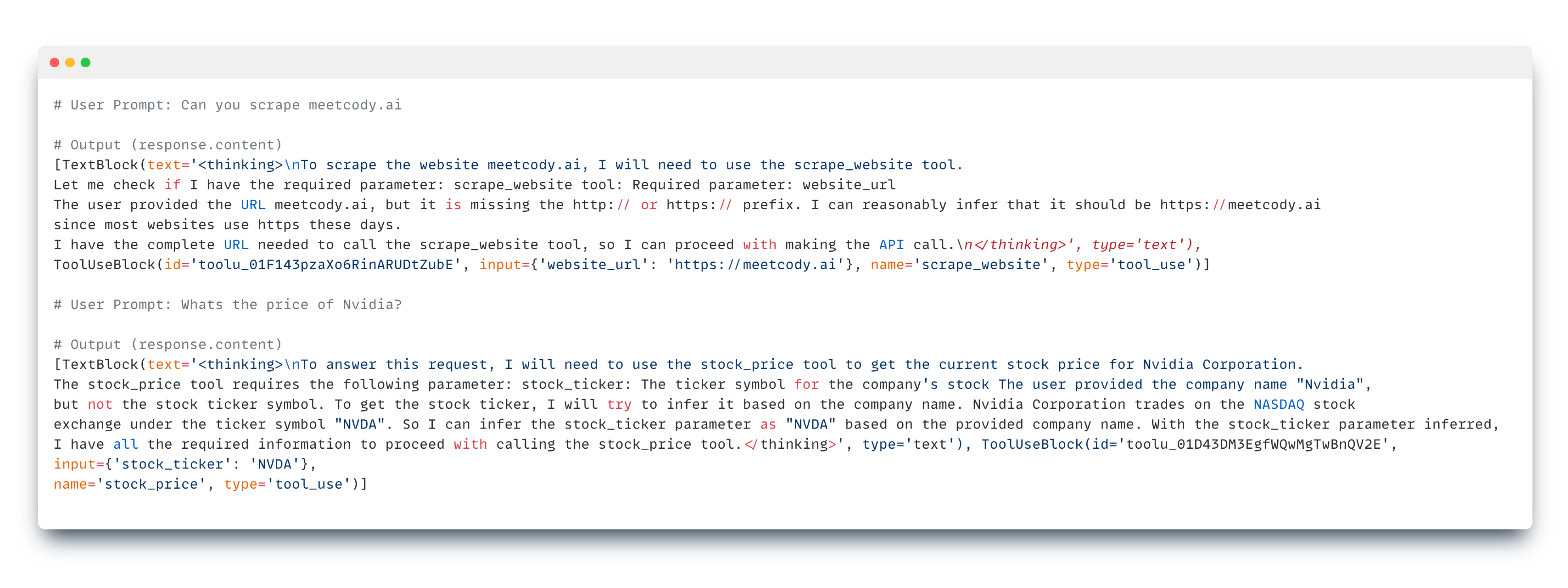

Asking the bot two queries, one for each tool, gives us the following outputs:

The thinking process lists out all the steps taken by the LLM to accurately select the correct tool for each query and executing the necessary conversions as described in the tool descriptions.

Selecting the relevant tool

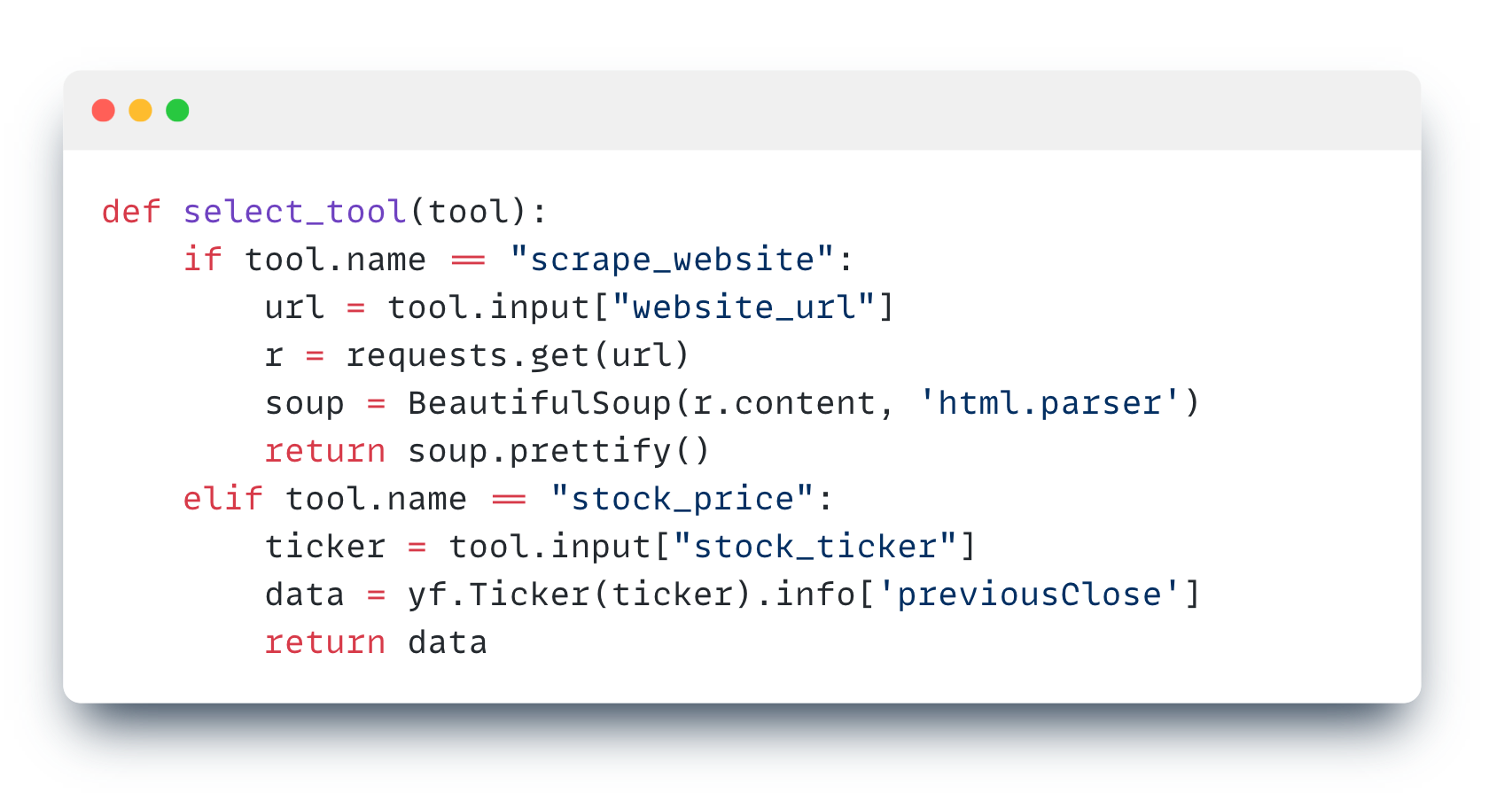

We will have to write some additional code that will trigger the relevant functions based on conditions.

This function serves to activate the appropriate code based on the tool name retrieved in the LLM response. In the first condition, we scrape the website URL obtained from the Tool input, while in the second condition, we fetch the stock ticker and pass it to the yfinance python library.

Executing the functions

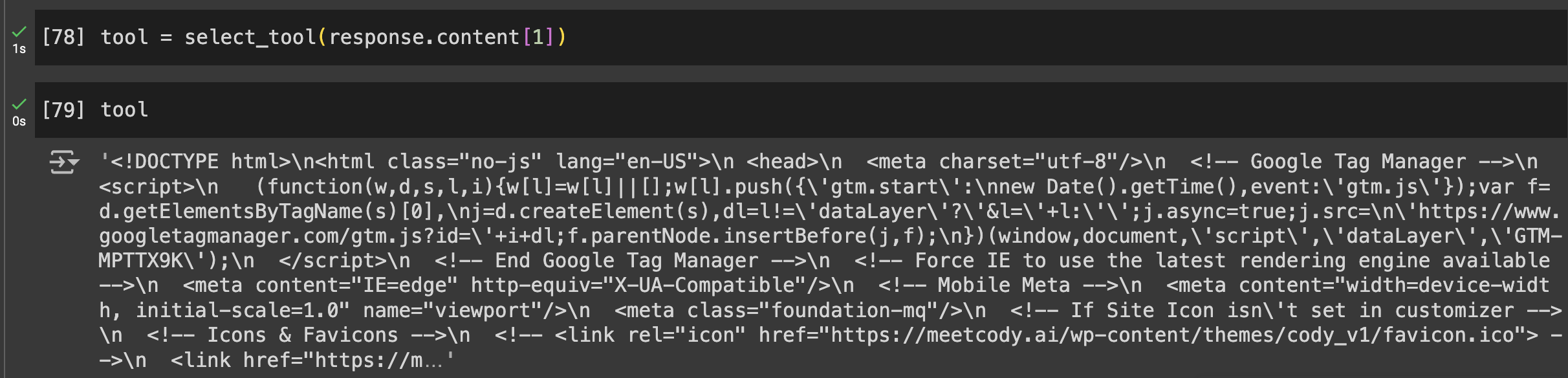

We will pass the entire ToolUseBlock in the select_tool() function to trigger the relevant code.

Outputs

- First Prompt

- Second Prompt

If you want to view the entire source code of this demonstration, you can view this notebook.

Some Use Cases

The ‘tool use’ feature for Claude elevates the versatility of the LLM to a whole new level. While the example provided is fundamental, it serves as a foundation for expanding functionality. Here is one real-life application of it:

Yesterday @AnthropicAI launched a tool use beta!

Here’s an example of something I built with it: a customer service bot that can actually resolve your issue!

Looking forward to seeing what else people build! https://t.co/Xmi7pnwouS pic.twitter.com/T5bE4peexR

— Erik Schluntz (@ErikSchluntz) April 5, 2024

To find more use-cases, you can visit the official repository of Anthropic here.