Groq and Llama 3: A Game-Changing Duo

A couple of months ago, a new company named ‘Groq’ emerged seemingly out of nowhere, making a breakthrough in the AI industry. They provided a platform for developers to access LPUs as inferencing engines for LLMs, especially open-source ones like Llama, Mixtral, and Gemma. In this blog, let’s explore what makes Groq so special and delve into the marvel behind LPUs.

What is Groq?

“Groq is on a mission to set the standard for GenAI inference speed, helping real time AI applications come to life today.” — The Groq Website

Groq isn’t a company that develops LLMs like GPT or Gemini. Instead, Groq focuses on enhancing the foundations of these large language models—the hardware they operate on. It serves as an ‘inference engine.’ Currently, most LLMs in the market utilize traditional GPUs deployed on private servers or the cloud. While these GPUs are expensive and powerful, sourced from companies like Nvidia, they still rely on traditional GPU architecture, which may not be optimally suited for LLM inferencing (though they remain powerful and preferred for training models).

The inference engine provided by Groq works on LPUs — Language Processing Units.

What is an LPU?

A Language Processing Unit is a chip specifically designed for LLMs and it is built on a unique architecture combining CPUs and GPUs to transform the pace, predictability, performance and accuracy of AI solutions for LLMs.

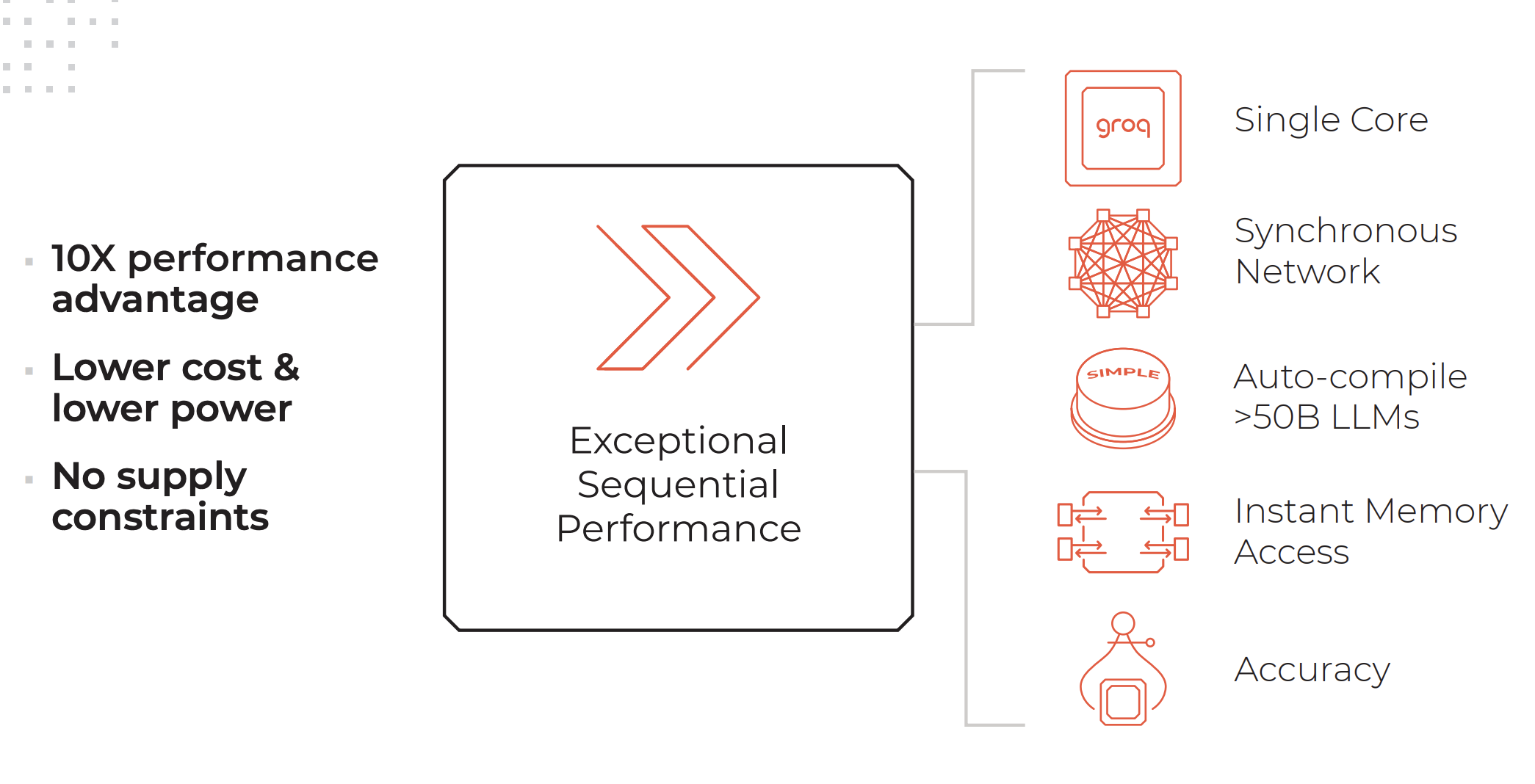

Key attributes of an LPU system. Credits: Groq

An LPU system has as much or more compute as a Graphics Processor (GPU) and reduces the amount of time per word calculated, allowing faster generation of text sequences.

Features of an LPU inference engine as listed on the Groq website:

- Exceptional sequential performance

- Single core architecture

- Synchronous networking that is maintained even for large scale deployments

- Ability to auto-compile >50B LLMs

- Instant memory access

- High accuracy that is maintained even at lower precision levels

Services provided by Groq:

- GroqCloud: LPUs on the cloud

- GroqRack: 42U rack with up to 64 interconnected chips

- GroqNode: 4U rack-ready scalable compute system featuring eight interconnected GroqCard™ accelerators

- GroqCard: A single chip in a standard PCIe Gen 4×16 form factor providing hassle-free server integration

“Unlike the CPU that was designed to do a completely different type of task than AI, or the GPU that was designed based on the CPU to do something kind of like AI by accident, or the TPU that modified the GPU to make it better for AI, Groq is from the ground up, first principles, a computer system for AI”— Daniel Warfield, Towards Data Science

To know more about how LPUs differ from GPUs, TPUs and CPUs, we recommend reading this comprehensive article written by Daniel Warfield for Towards Data Science.

What’s the point of Groq?

LLMs are incredibly powerful, capable of tasks ranging from parsing unstructured data to answering questions about the cuteness of cats. However, their main drawback currently lies in response time. The slower response time leads to significant latency when using LLMs in backend processes. For example, fetching data from a database and displaying it in JSON format is currently much faster when done using traditional logic rather than passing the data through an LLM for transformation. However, the advantage of LLMs lies in their ability to understand and handle data exceptions.

With the incredible inference speed offered by Groq, this drawback of LLMs can be greatly reduced. This opens up better and wider use-cases for LLMs and reduces costs, as with an LPU, you’ll be able to deploy open-source models that are much cheaper to run with really quick response times.

Llama 3 on Groq

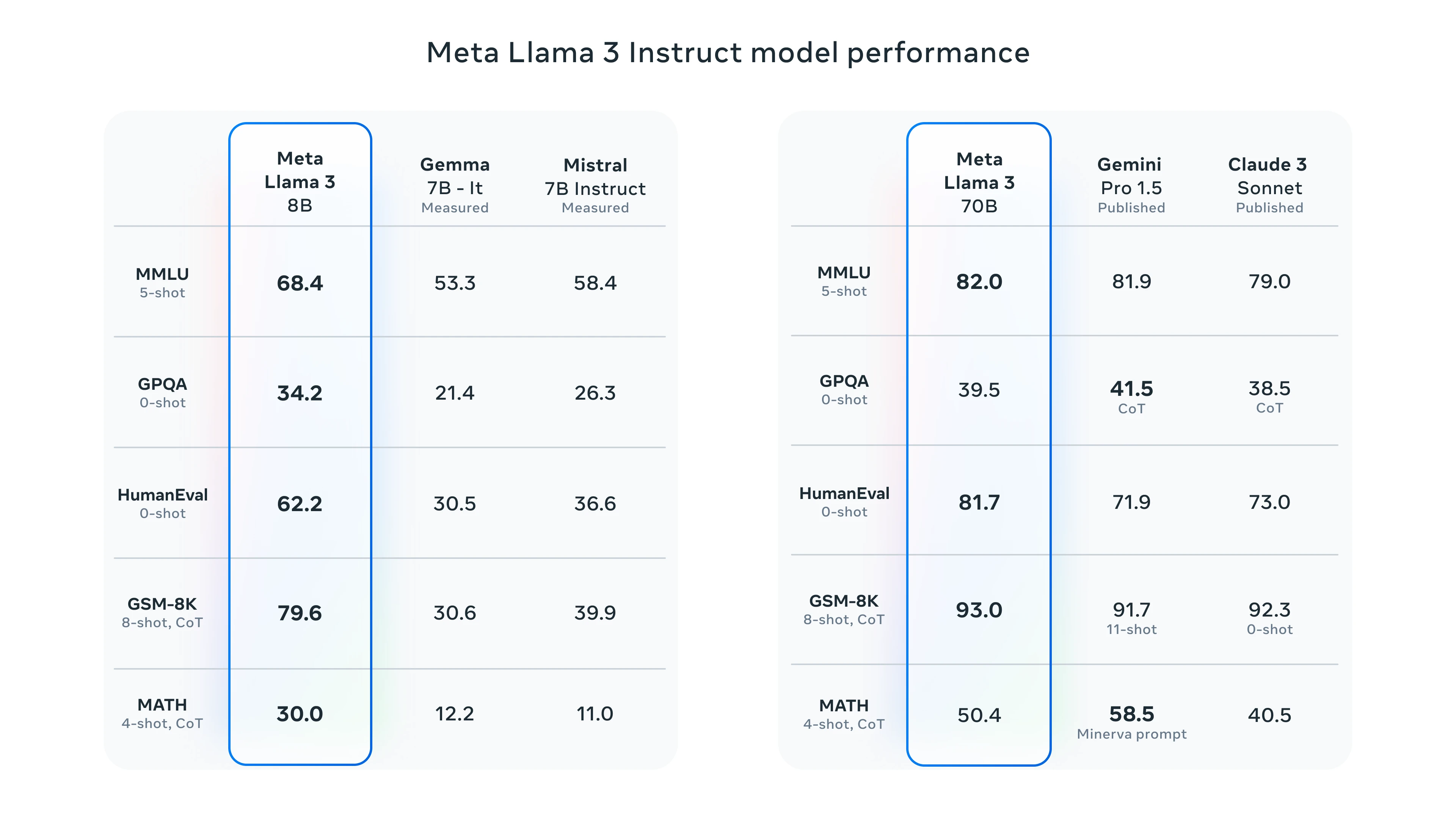

A couple of weeks ago, Meta unveiled their latest iteration of the already powerful and highly capable open-source LLM—Llama 3. Alongside the typical enhancements in speed, data comprehension, and token generation, two significant improvements stand out:

- Trained on a dataset 7 times larger than Llama 2, with 4 times more code.

- Doubled context length to 8,000 tokens.

Llama 2 was already a formidable open-source LLM, but with these two updates, the performance of Llama 3 is expected to rise significantly.

Llama 3 Benchmarks

To test Llama 3, you have the option to utilize Meta AI or the Groq playground. We’ll showcase the performance of Groq by testing it with Llama 3.

Groq Playground

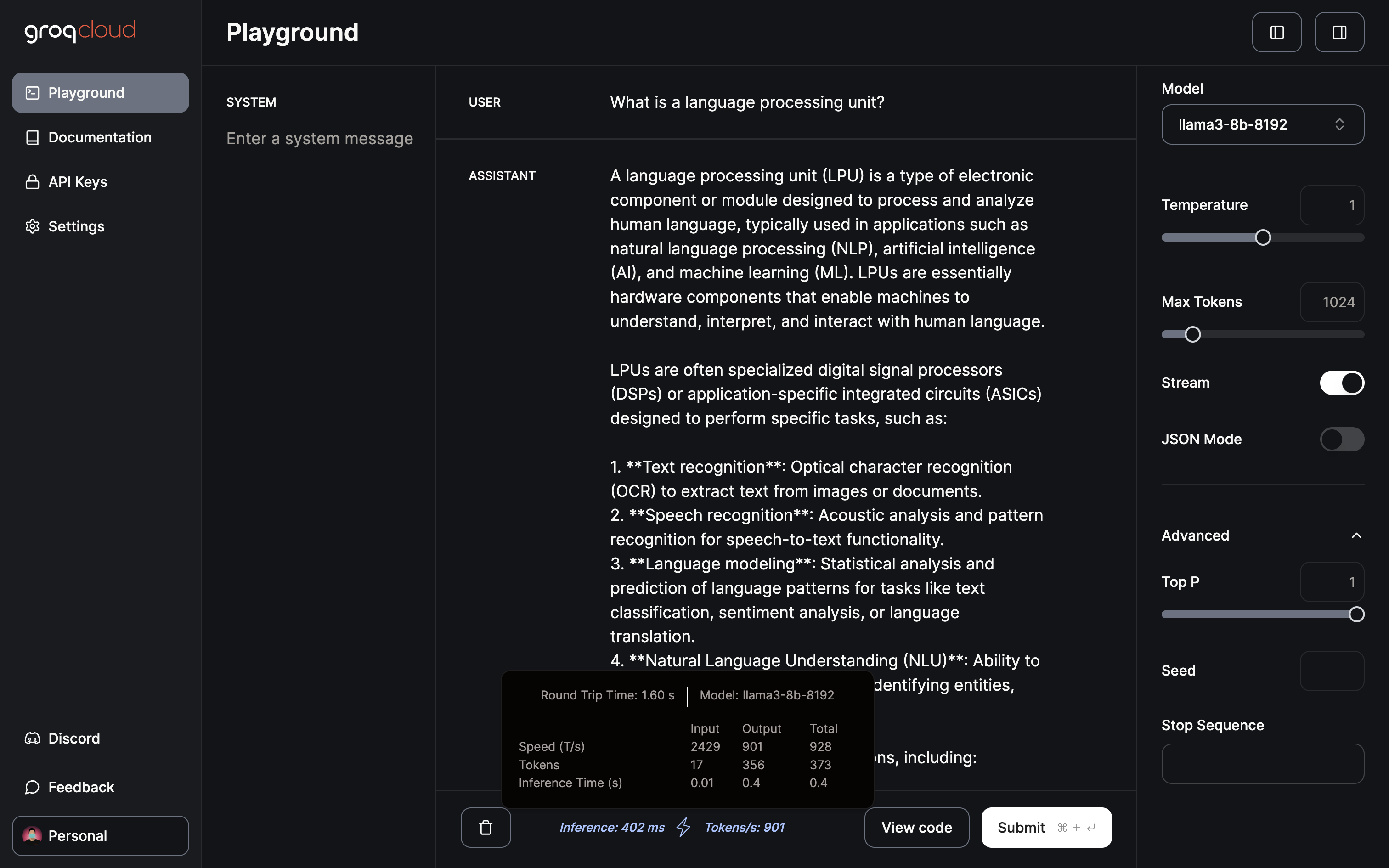

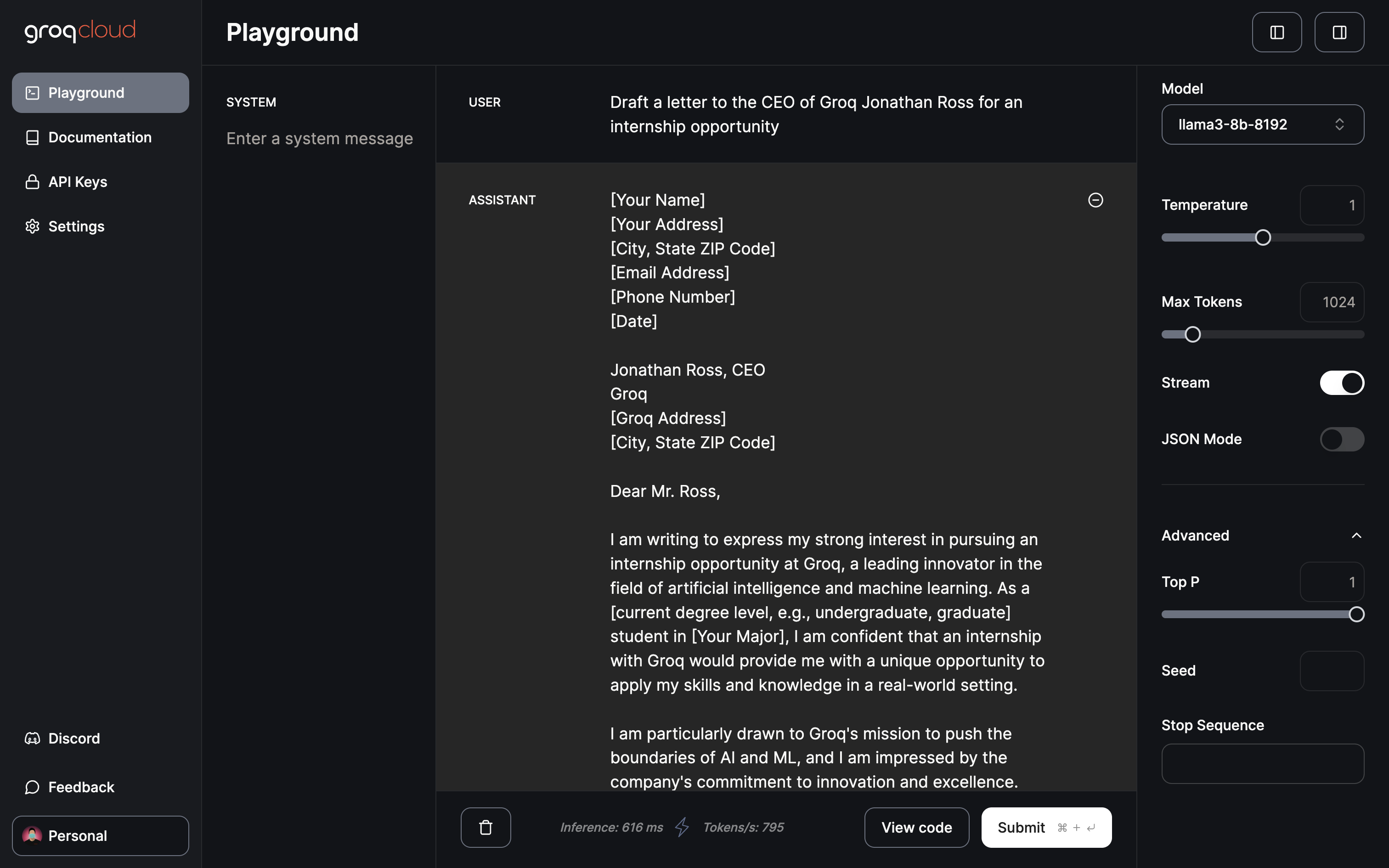

Currently, the Groq playground offers free access to Gemma 7B, Llama 3 70B and 8B, and Mixtral 8x7b. The playground allows you to adjust parameters such as temperature, maximum tokens, and streaming toggle. Additionally, it features a dedicated JSON mode to generate JSON output only.

Only 402ms for inference at the rate of 901 tokens/s

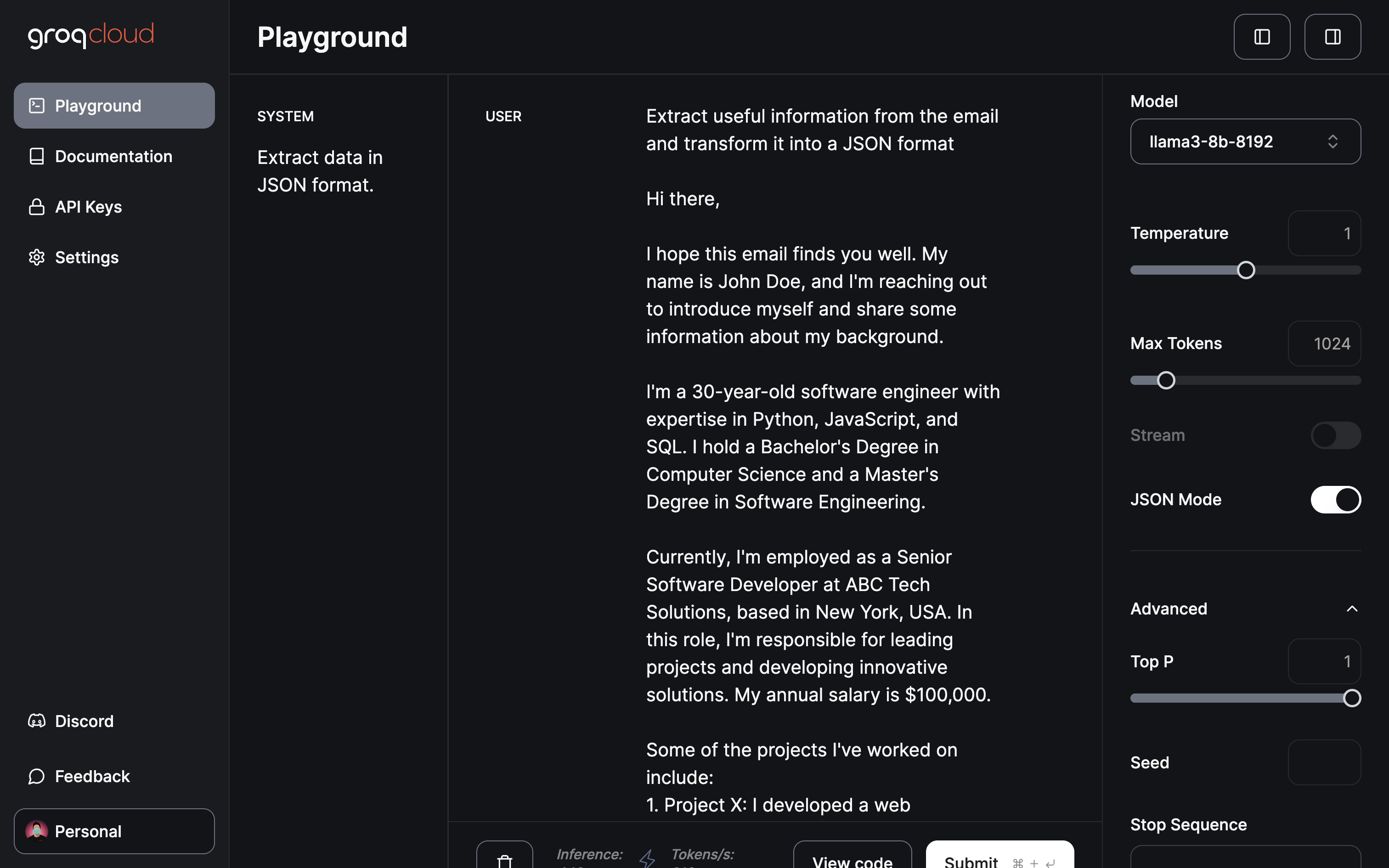

Coming to the most impactful domain/application in my opinion, data extraction and transformation:

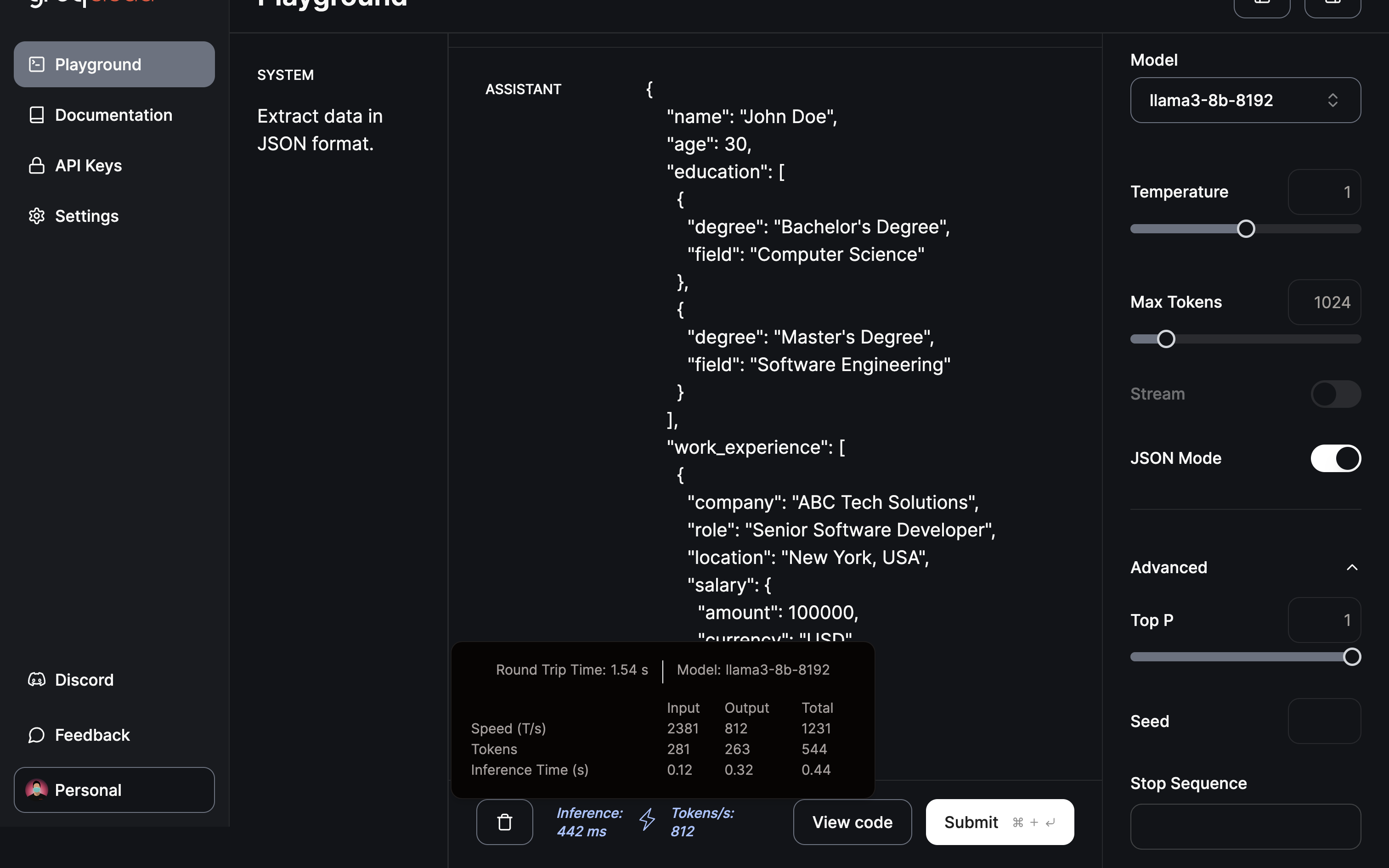

Asking the model to extract useful information and providing a JSON using the JSON mode.

The extraction and transformation to JSON format was completed in less than half a second.

Conclusion

As demonstrated, Groq has emerged as a game-changer in the LLM landscape with their innovative LPU Inference Engine. The rapid transformation showcased here hints at the immense potential for accelerating AI applications. Looking ahead, one can only speculate about the future innovations from Groq. Perhaps, an Image Processing Unit could revolutionize image generation models, contributing to advancements in AI video generation. Indeed, it’s an exciting future to anticipate.

Looking ahead, as LLM training becomes more efficient, the potential for having a personalized ChatGPT, fine-tuned with your data on your local device, becomes a tantalizing prospect. One platform that offers such capabilities is Cody, an intelligent AI assistant tailored to support businesses in various aspects. Much like ChatGPT, Cody can be trained on your business data, team, processes, and clients, using your unique knowledge base.

With Cody, businesses can harness the power of AI to create a personalized and intelligent assistant that caters specifically to their needs, making it a promising addition to the world of AI-driven business solutions.