OpenAI’s highly anticipated DevDay event brought some exciting news and pricing leaks that have left the AI community buzzing with anticipation. Among the key highlights are the release of GPT-4 Turbo, significant price reductions for various services, the GPT-4 turbo 128k context window, and the unveiling of Assistants API. Let’s delve into the details and see how these developments are shaping the future of AI.

GPT-4 Turbo: More Power at a Lower Price

The headline-grabber of the event was undoubtedly the unveiling of the GPT-4 Turbo. This advanced AI model boasts a staggering 128K context window, a significant leap forward from its predecessor, GPT-3.5. With this expanded context, GPT-4 Turbo can read and process information equivalent to a 400-page book in a single context window. This newfound capability eliminates one of the key differentiators for Anthropic, OpenAI’s sibling company, as GPT-4 Turbo now offers a comparable context size.

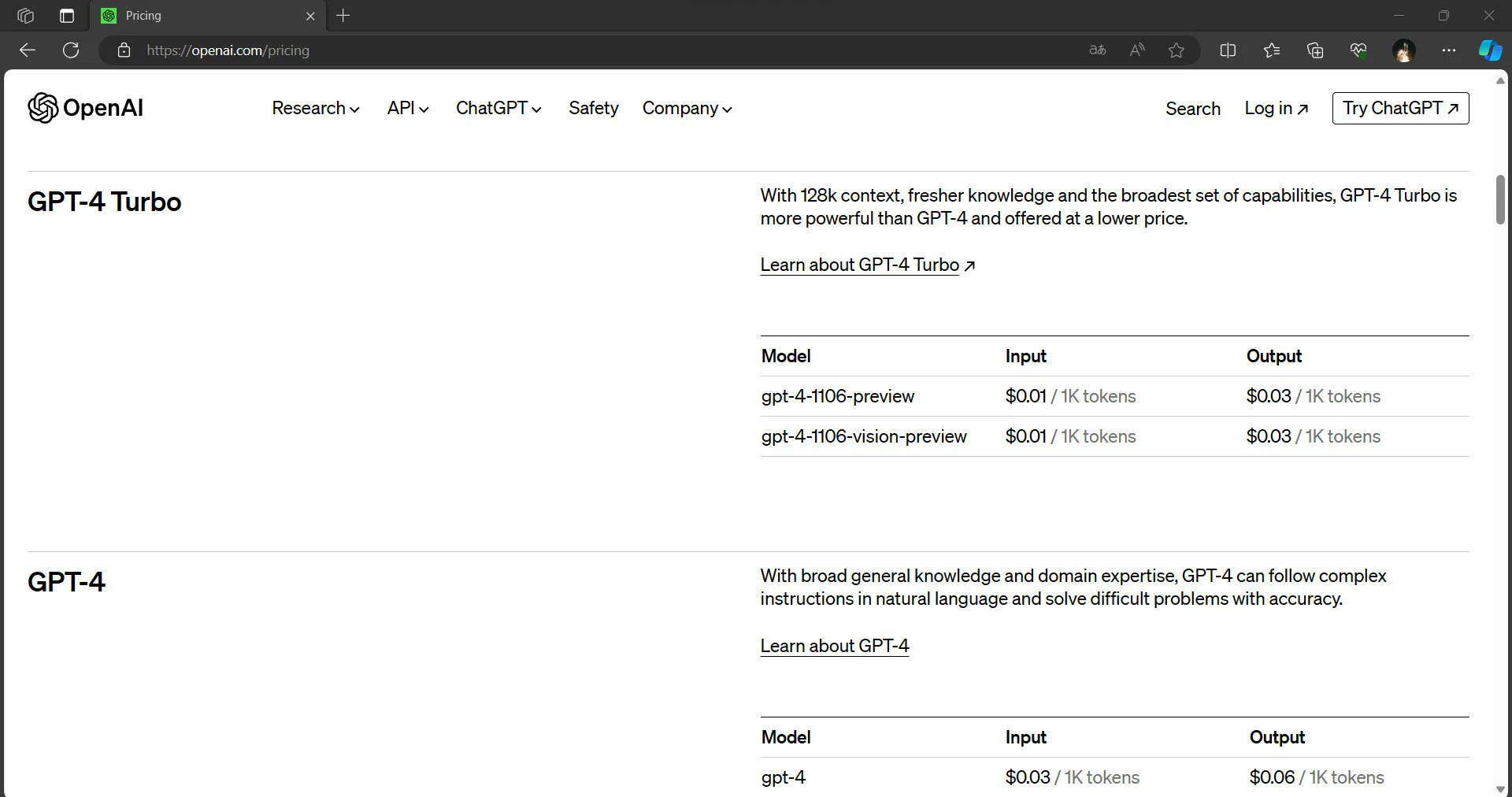

But the news doesn’t stop there. GPT-4 Turbo not only offers a larger context window but also delivers faster output and is available at a fraction of the input and output prices of GPT-4. This combination of enhanced capabilities and cost-effectiveness positions GPT-4 Turbo as a game-changer in the world of AI.

Price Reductions Across the Board

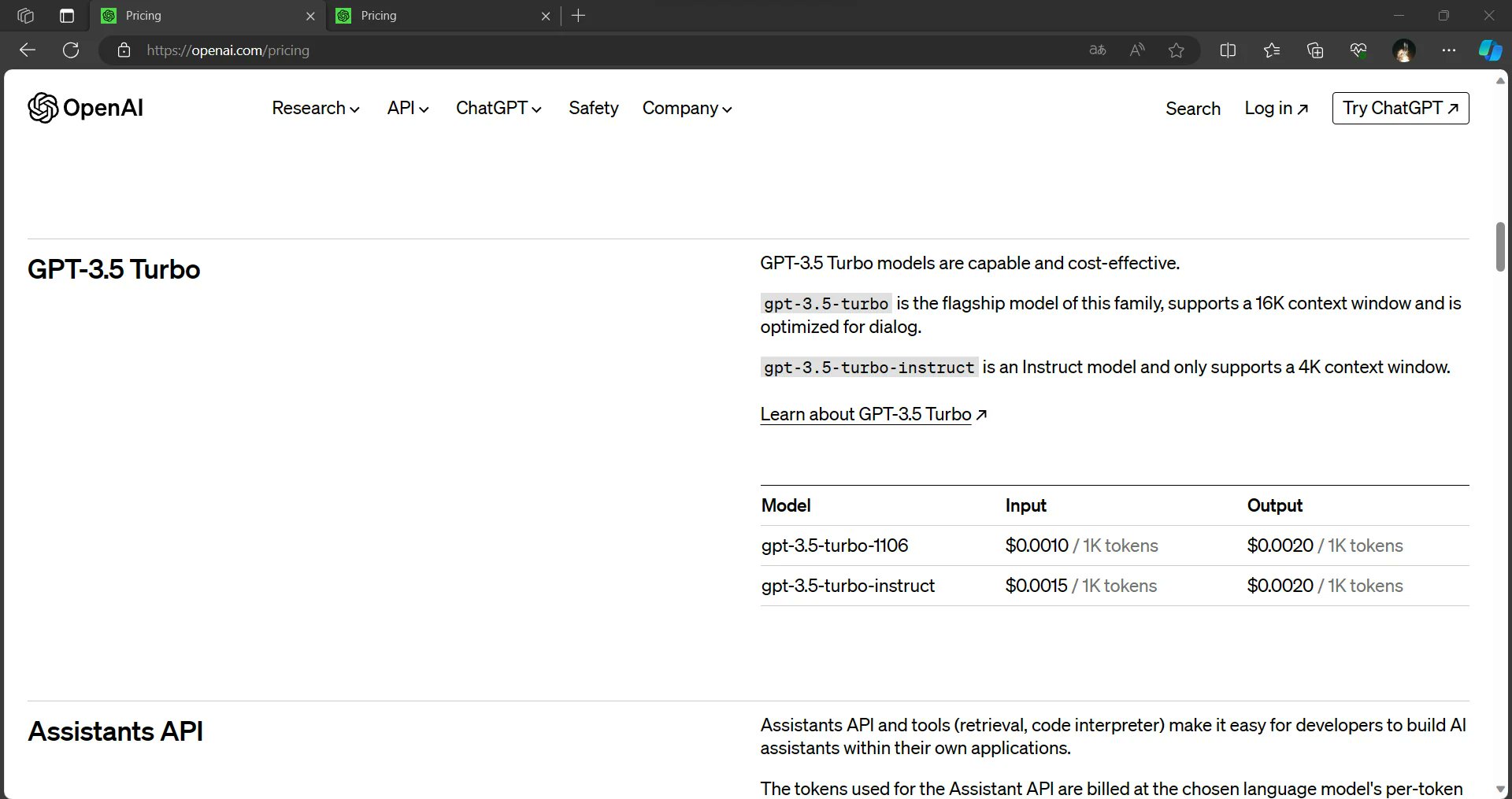

OpenAI is making AI more accessible and affordable than ever before. The leaked information suggests that the input cost for GPT-3.5 has been slashed by 33%. Additionally, GPT-3.5 models will now default to 16K, making it more cost-effective for users. These changes aim to democratize AI usage, allowing a broader audience to harness the power of these models.

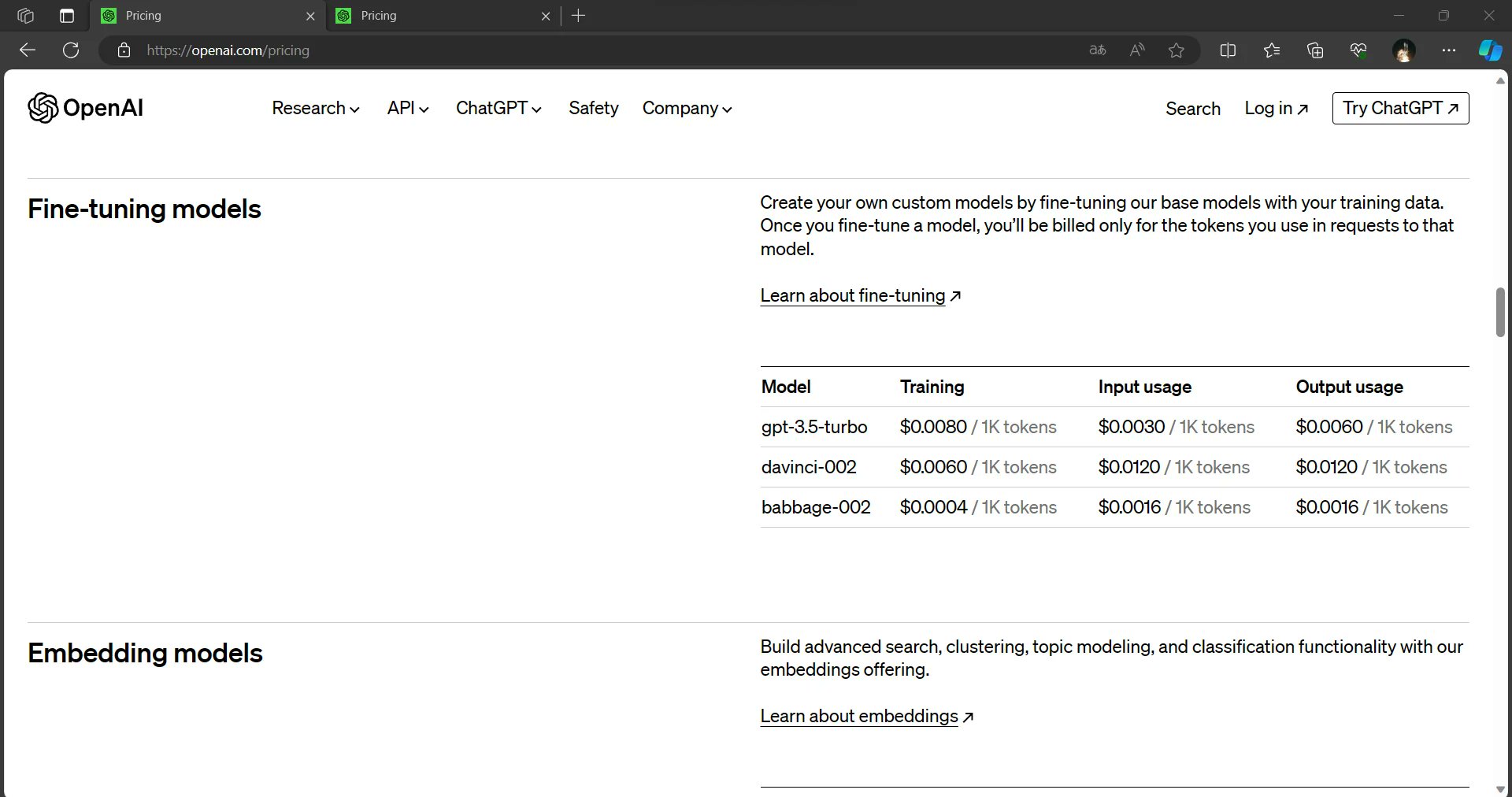

Fine-tuned models, a crucial resource for many AI applications, also benefit from substantial price reductions. Inference costs for fine-tuned models are reportedly slashed by a whopping 75% for input and nearly 60% for output. These reductions promise to empower developers and organizations to deploy AI-driven solutions more economically.

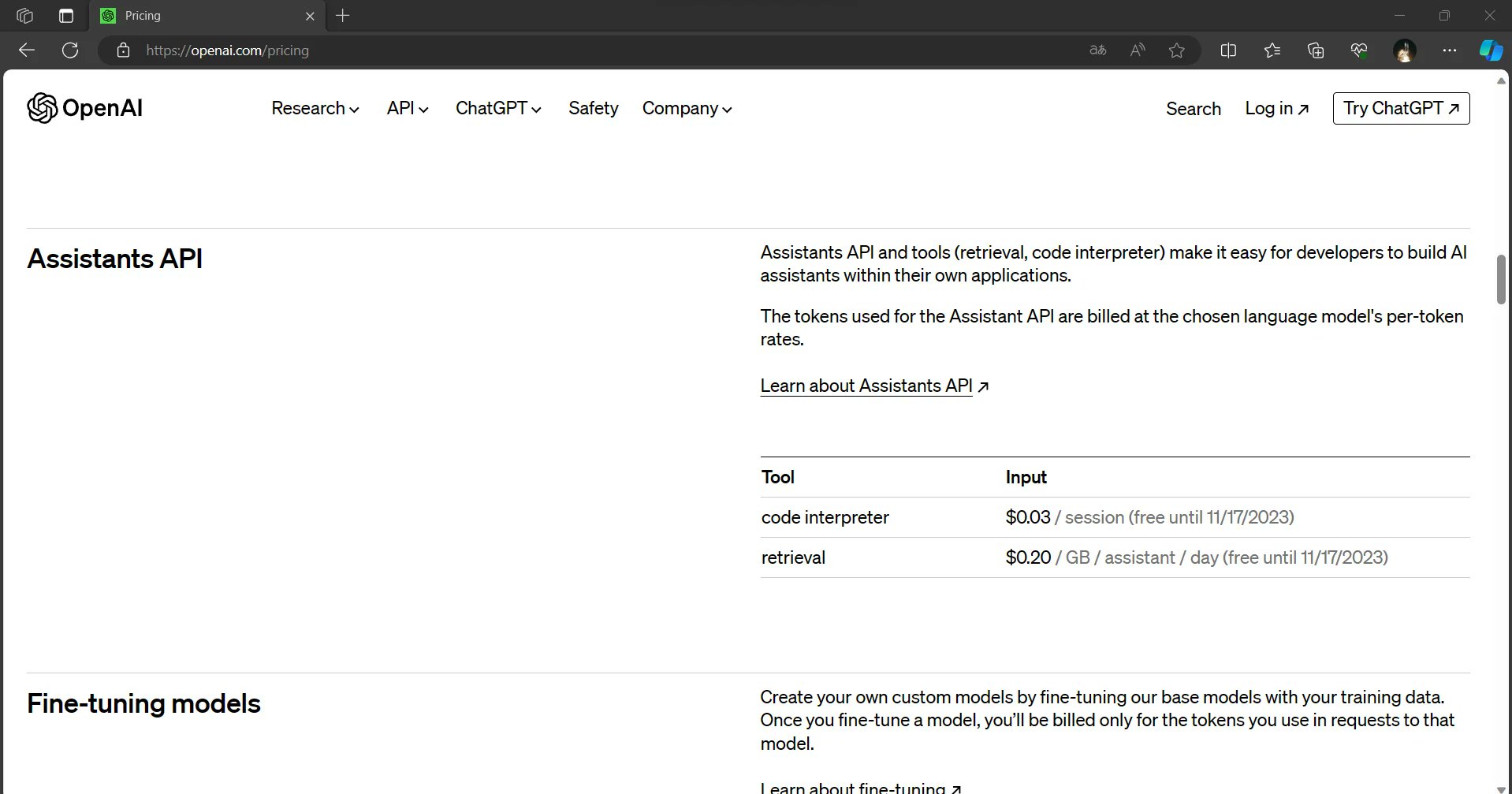

Assistants API: A New Frontier in AI

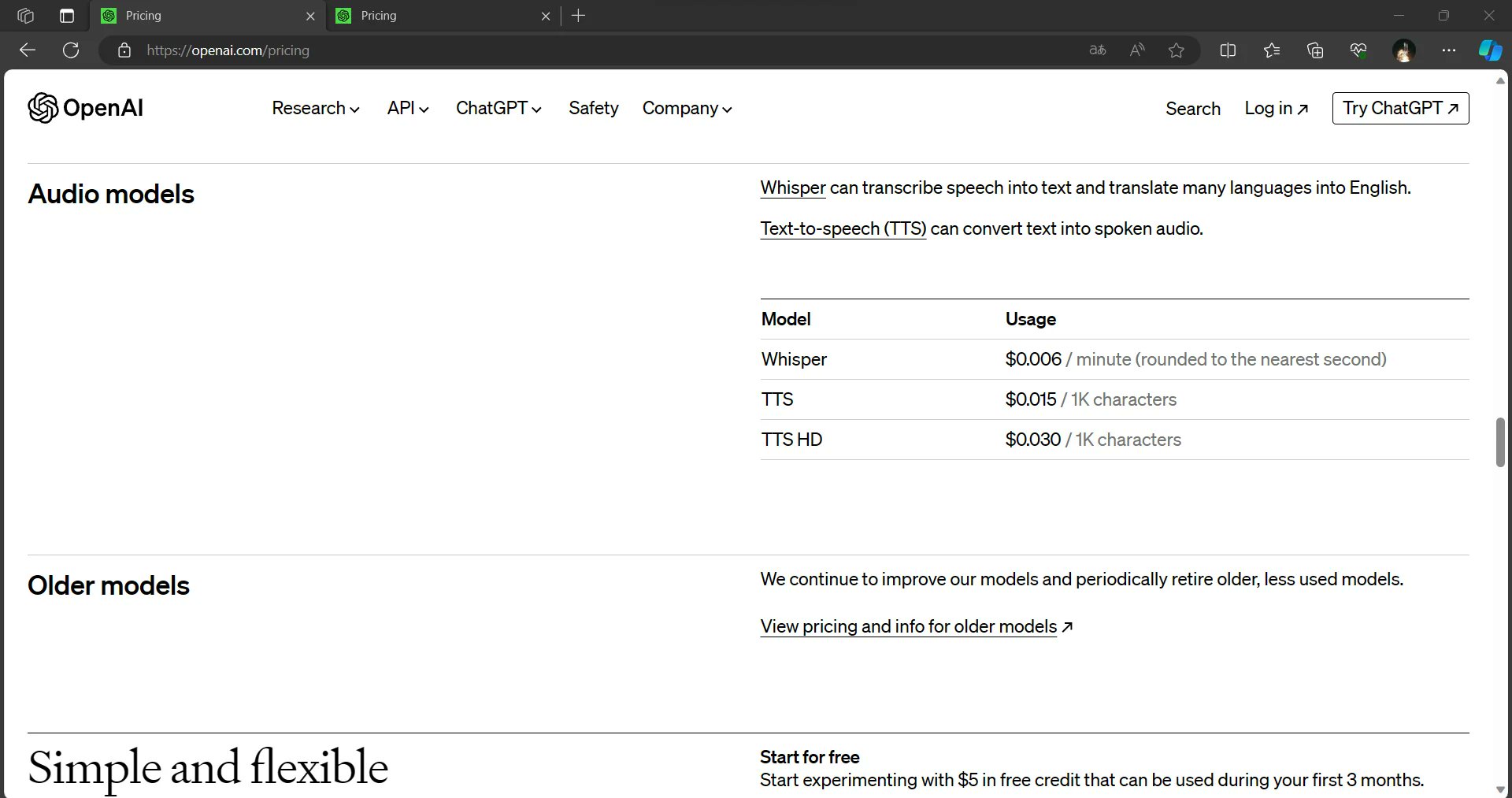

OpenAI’s DevDay also showcased the upcoming Assistants API, which is set to provide users with a code interpreter and retrieval capabilities via an API. This innovation is expected to streamline the integration of AI into various applications, enabling developers to build even more powerful and dynamic solutions.

Dall-E 3 and Dall-E 3 HD: Expanding Creative Horizons

The event also revealed the introduction of Dall-E 3 and Dall-E 3 HD. While these models promise to push the boundaries of creative AI, they are positioned as more expensive options compared to Dall-E 2. However, the enhanced capabilities of these models may justify the higher cost for users seeking cutting-edge AI for image generation and manipulation.

The Power of 128K Context

To put it simply, the GPT-4 Turbo 128K context window allows it to process and understand an astonishing amount of information in a single instance. For context, the previous generation, GPT-3, had a context window of 1,024 tokens. Tokens can represent words, characters, or even subwords, depending on the language and text. GPT-4 Turbo 128K context window is approximately 125 times larger than that of GPT-3, making it a true behemoth in the world of AI language models.

Practical Implications

The introduction of GPT-4 Turbo with its 128K context window is a remarkable step forward in the field of AI. Its ability to process and understand vast amounts of information has the potential to revolutionize how we interact with AI systems, conduct research, create content, and more. As developers and researchers explore the possibilities of this powerful tool, we can expect to see innovative applications that harness the full potential of GPT-4 Turbo’s capabilities, unlocking new horizons in artificial intelligence.

Comprehensive Understanding

With a 128K context, GPT-4 Turbo can read and analyze extensive documents, articles, or datasets in their entirety. This capability enables it to provide more comprehensive and accurate responses to complex questions, research tasks, or data analysis needs.

Contextual Continuity

Previous models often struggled with maintaining context across long documents, leading to disjointed or irrelevant responses. GPT-4 Turbo 128K window allows it to maintain context over extended passages, resulting in more coherent and contextually relevant interactions.

Reducing Information Overload

In an era of information overload, GPT-4 Turbo’s ability to process vast amounts of data in one go can be a game-changer. It can sift through large datasets, extract key insights, and provide succinct summaries, saving users valuable time and effort.

Advanced Research and Writing

Researchers, writers, and content creators can benefit significantly from GPT-4 Turbo’s 128K context. It can assist in generating in-depth research papers, articles, and reports with a deep understanding of the subject matter.

Enhanced Language Translation

Language translation tasks can benefit from the broader context as well. GPT-4 Turbo can better understand the nuances of languages, idiomatic expressions, and cultural context, leading to more accurate translations.

Challenges and Considerations

While GPT-4 Turbo 128K context is undoubtedly a game-changer, it also presents challenges. Handling such large models requires significant computational resources, which may limit accessibility for some users. Additionally, ethical considerations around data privacy and content generation need to be addressed as AI models become more powerful.

More on its Way for GPT-4?

OpenAI’s DevDay event delivered a wealth of exciting updates and pricing leaks that are set to shape the AI landscape. GPT-4 Turbo’s impressive 128K context window, faster output, and reduced pricing make it a standout offering. The overall price reductions for input, output, and fine-tuned models are set to democratize AI usage, making it more accessible to a broader audience. The forthcoming Assistants API and Dall-E 3 models further highlight OpenAI’s commitment to innovation and advancing the field of artificial intelligence.

As these developments unfold, it’s clear that OpenAI is determined to empower developers, businesses, and creative minds with state-of-the-art AI tools and services. The future of AI is looking brighter and more accessible than ever before.

Read More: OpenAI’s ChatGPT Enterprise: Cost, Benefits, and Security