How Does Cody Generate Responses Using Your Documents?

When you get started with Cody, it is possible that you may feel disappointed or disheartened about why Cody is unable to generate the expected responses. In this short blog, we won’t delve deep into how you should be using Cody, but we will give you a rough idea of how Cody uses your documents to generate responses so that you can better understand the generation process and experiment with it.

Two main factors mainly affect response generation using your documents:

- Chunking

- Context Window

Both of these terminologies, chunking and the context window, are interrelated. A simple analogy can be drawn to compare response generation with cooking food. Chunks can be seen as the individual pieces of vegetables that you cut, while the context window represents the size of the cooking utensil. It is important to cut the vegetables into optimal-sized chunks to enhance the overall taste, and a larger utensil allows for more vegetable pieces to be added.

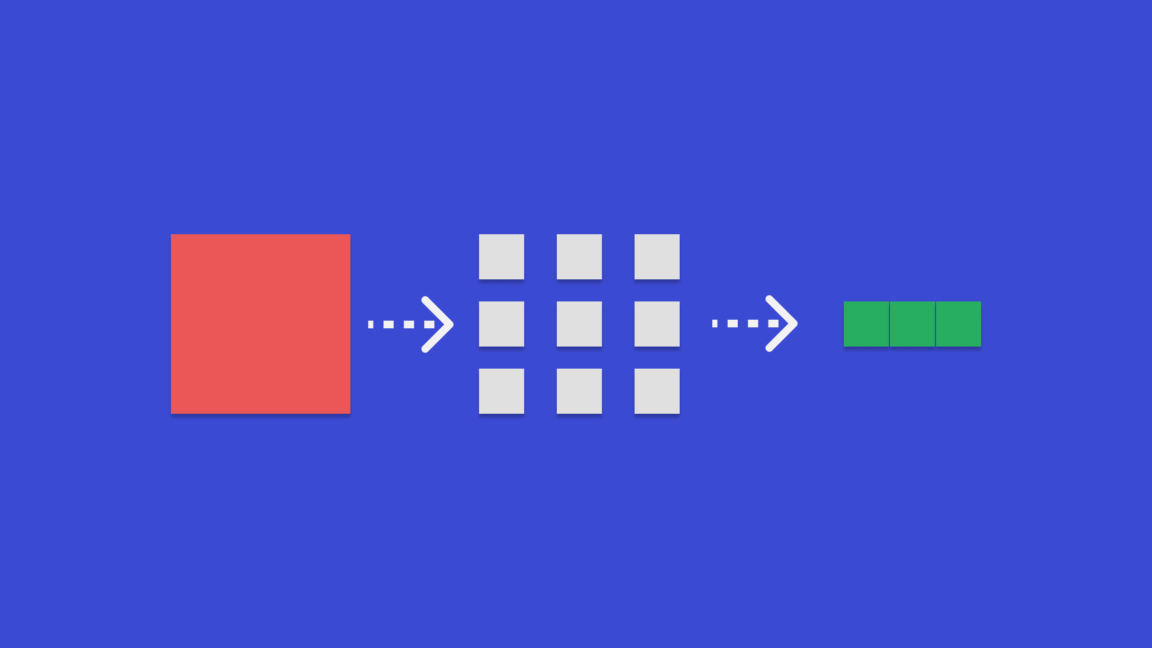

What is Chunking?

In simple terms, chunking is the act of breaking down content into manageable pieces for efficient use of memory. If you have read our blogs, you may be aware that models such as GPT require significant resources, and to tackle the constraints of the context window, we employ multiple processes like chunking.

Chunking is a process performed after you upload the documents to Cody. It divides or segments the document into multiple chunks, with each chunk containing relevant surrounding context. These chunks are then assigned numerical tags for easier computation, which is known as embedding. It is important to find the optimal chunk size. A smaller chunk size reduces context relevance, while a larger chunk size introduces more noise. Cody’s chunking algorithm dynamically adjusts the chunk size based on the token distribution set by the user.

How does the Context Window affect the Bot responses?

Various factors such as personality prompt, relevance score, etc., influence the quality of bot responses. The context window of the model also plays a significant role in determining the quality. The context window refers to the amount of text that an LLM (Language Model) can process in a single call. Since Cody utilizes embeddings and context injection to generate answers using OpenAI models, a larger context window allows for more data ingestion by the model in each query.

💡 Each Query (≤ Context Window) = Bot Personality + Knowledge Chunks + History + User Input + Response

Context windows of different models:

- GPT-3.5: 4096 Tokens (≈3500 words)

- GPT-3.5 16K: 16000 Tokens (≈13000 words)

- GPT-4: 8000 Tokens (≈7000 words)

When the context window is larger, it enables a greater proportion of each parameter, including Personality, Chunks, History, Input, and Response. This expanded context empowers the bot to generate responses that are more relevant, coherent, and creative in nature.

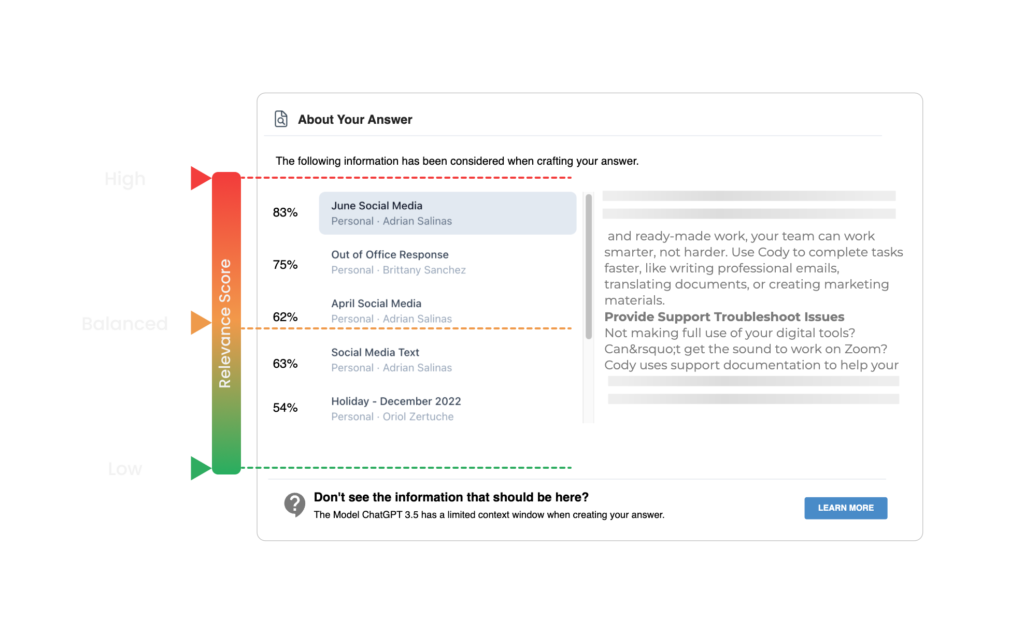

Cody’s latest addition enables users to check document citations by clicking on the document name at the end of the responses. These citations correspond to the chunks obtained through semantic search. Cody determines the chunk threshold for context based on the relevance score set by the user. If the user sets a high relevance score, Cody only uses chunks that surpass a predefined threshold as context for generating the answer.

Example

Assuming a predefined threshold limit value of 90% for a high relevance score, Cody discards any chunks with a relevance score lower than 90%. We recommend new users start with a lower relevance score (low or balanced), specifically when using uploaded documents (PDFs, Powerpoints, Word, etc) or websites. Uploaded documents or websites may encounter formatting and readability issues during pre-processing, which can result in lower relevance scores. Formatting the document using our built-in text editor instead of uploading raw documents will ensure the highest accuracy and trust score.

If you found this blog interesting and wish to delve deeper into the concepts of context window and chunking, we highly recommend reading this blog written by Kristian from All About AI. For more resources, you can also check out our Help Center and join our Discord community.