Hugging Face has quickly become a go-to platform in the machine learning community, boasting an extensive suite of tools and models for NLP, computer vision, and beyond. One of its most popular offerings is Hugging Face Spaces, a collaborative platform where developers can share machine learning applications and demos. These “spaces” allow users to interact with models directly, offering a hands-on experience with cutting-edge AI technology.

In this article, we will highlight five standout Hugging Face Spaces that you should check out in 2024. Each of these spaces provides a unique tool or generator that leverages the immense power of today’s AI models. Let’s delve into the details.

EpicrealismXL

Epicrealismxl is a state-of-the-art text-to-image generator that uses the stablediffusion epicrealism-xl model. This space allows you to provide the application with a prompt, negative prompts, and sampling steps to generate breathtaking images. Whether you are an artist seeking inspiration or a marketer looking for visuals, epicrealismxl offers high-quality image generation that is as realistic as it is epic.

Podcastify

Podcastify revolutionizes the way you consume written content by converting articles into listenable audio podcasts. Simply paste the URL of the article you wish to convert into the textbox, click “Podcastify,” and voila! You have a freshly generated podcast ready for you to listen to or view in the conversation tab. This tool is perfect for multitaskers who prefer auditory learning or individuals on the go.

Dalle-3-xl-lora-v2

Another stellar text-to-image generator, dalle-3-xl-lora-v2, utilizes the infamous DALL-E 3 model. Similar in function to epicrealismxl, this tool allows you to generate images from textual prompts. DALL-E 3 is known for its versatility and creativity, making it an excellent choice for generating complex and unique visuals for various applications.

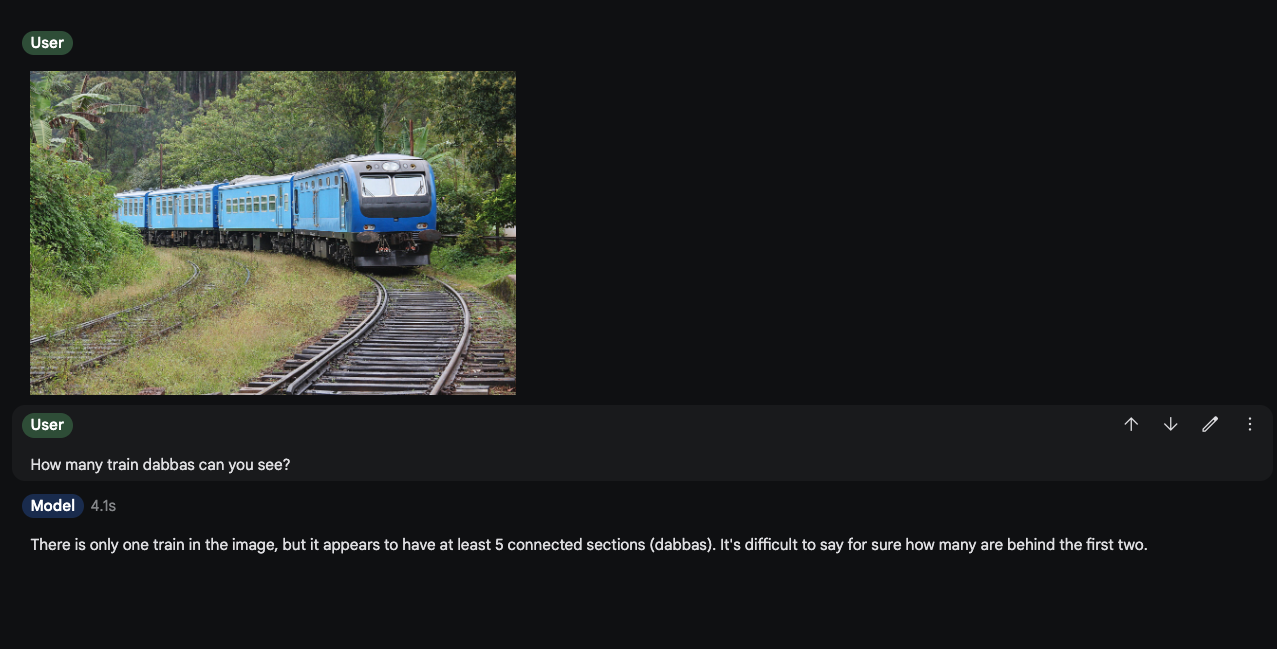

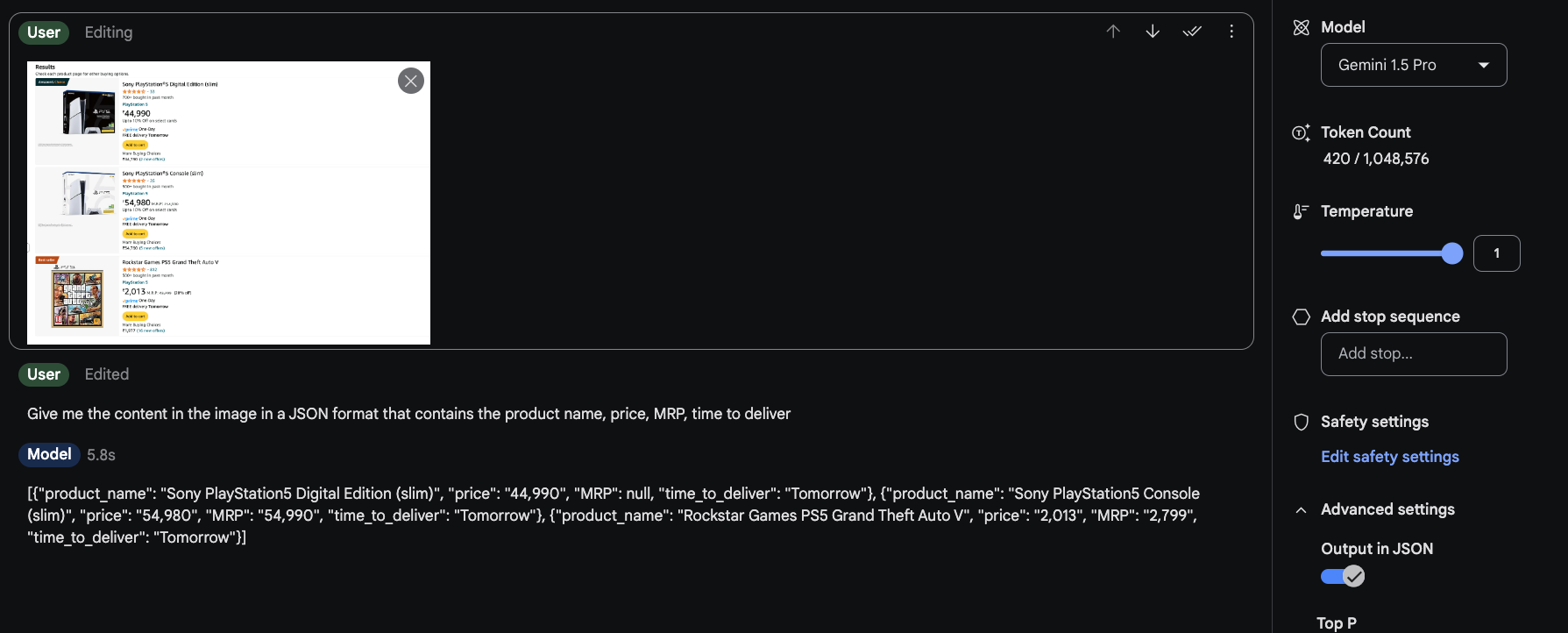

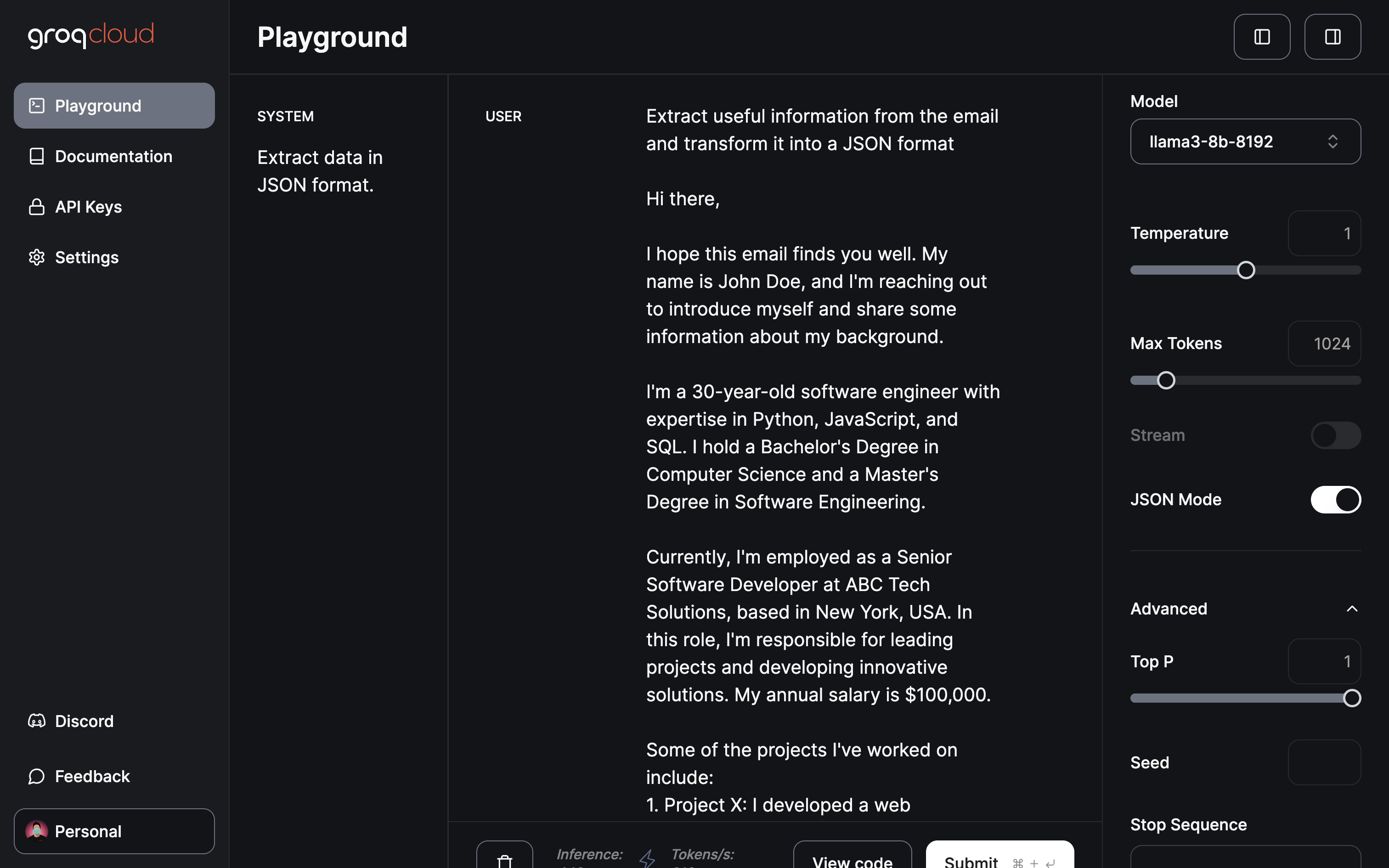

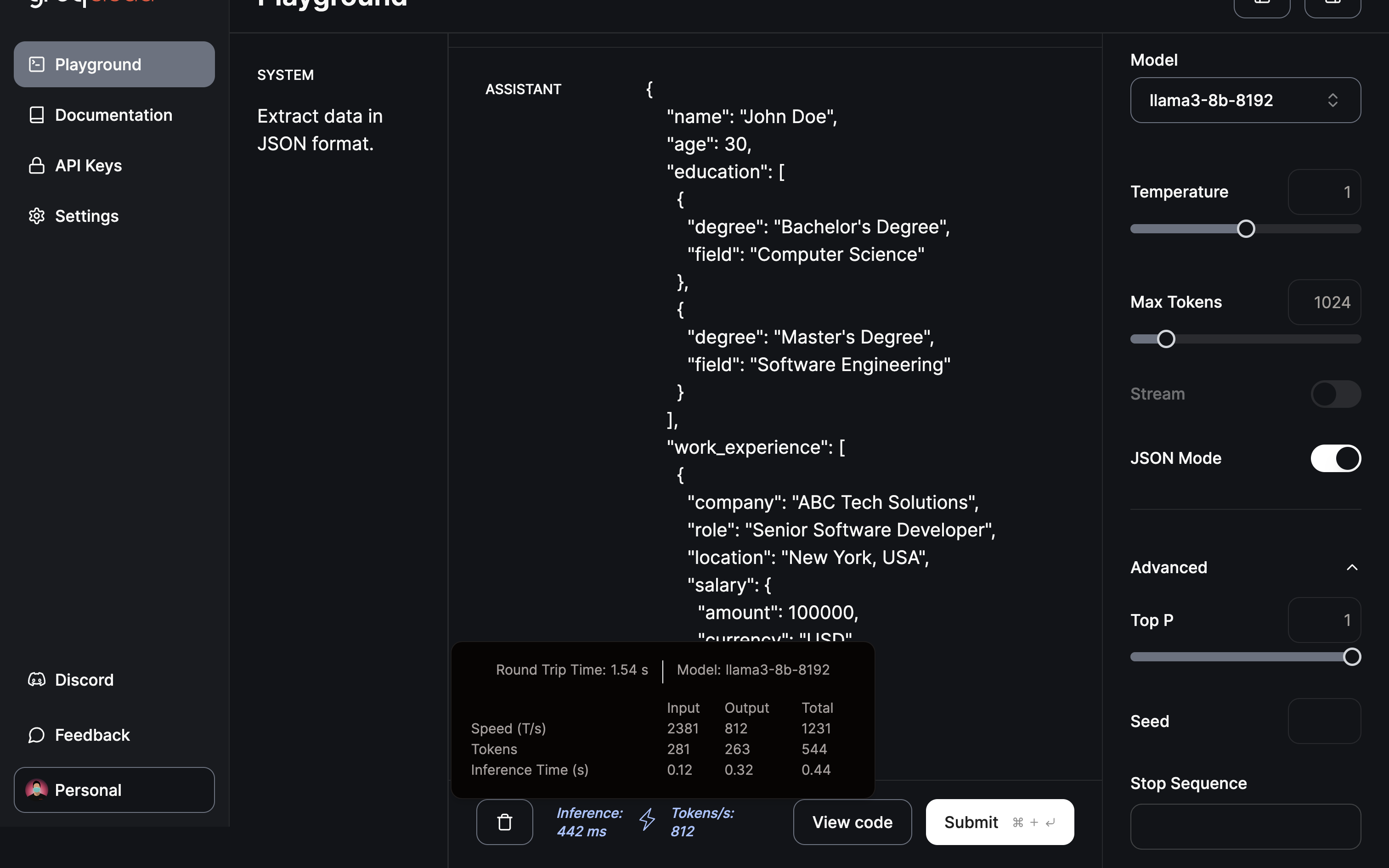

AI Web Scraper

AI Scraper brings advanced web scraping capabilities to your fingertips without requiring any coding skills. This no-code tool lets you easily scrape and summarize web content using advanced AI models hosted on the Hugging Face Hub. Input your desired prompt and source URL to start extracting useful information in JSON format. This tool is indispensable for journalists, researchers, and content creators.

AI QR Code Generator

The AI QR Code Generator takes your QR codes to a whole new artistic level. By using the QR code image as both the initial and control image, this tool allows you to generate QR Codes that blend naturally with your provided prompt. Adjust the strength and conditioning scale parameters to create aesthetically pleasing QR codes that are both functional and beautiful.

Conclusion

Hugging Face Spaces are a testament to the rapid advancements in machine learning and AI. Whether you’re an artist, a content creator, a marketer, or just an AI enthusiast, these top five spaces offer various tools and generators that can enhance your workflow and ignite your creativity. Be sure to explore these spaces to stay ahead of the curve in 2024. If you want to know about the top 5 open source LLMs in 2024, read our blog here.

![Gemma] Building AI Assistant for Data Science 🤖](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgg06dhS6iRpIv8hvyonlwncW-RC5n59E8vhaWRgIVqTP-Z1AbTBDtdJsX8ClDILimlGWlRAIORuZn8349TfUFmgqYyCRcoctTvNC_Kv70z41hCKd-0Fy4Ic4EgKyY0LxQ5rDt1eXi3jvEcTxgTC62glTl4e5Cffge50iiF0fxCBqmq9v-u7KTfIL4Lxb0/s1600/Gemma_social.png)