In a groundbreaking move, OpenAI recently concluded a 12-day event that has set the AI world abuzz. The highlight of this event was the introduction of the OpenAI o3 models, a new family of AI reasoning models that promises to reshape the landscape of artificial intelligence.

At the forefront of this series are two remarkable models: o1 and o3. These models represent a significant leap forward from their predecessor, GPT-4, showcasing enhanced intelligence, speed, and multimodal capabilities. The o1 model, which is now available to Plus and Pro subscribers, boasts a 50% faster processing time and makes 34% fewer major mistakes compared to its preview version.

However, it’s the o3 model that truly pushes the boundaries of AI reasoning. With its advanced cognitive capabilities and complex problem-solving skills, o3 represents a significant stride towards Artificial General Intelligence (AGI). This model has demonstrated unprecedented performance in coding, mathematics, and scientific reasoning, setting new benchmarks in the field.

The o-series marks a pivotal moment in AI development, not just for its impressive capabilities, but also for its focus on safety and alignment with human values. As we delve deeper into the specifics of these models, it becomes clear that OpenAI is not just advancing AI technology, but also prioritizing responsible and ethical AI development.

OpenAI o3 vs o1: A Comparative Analysis

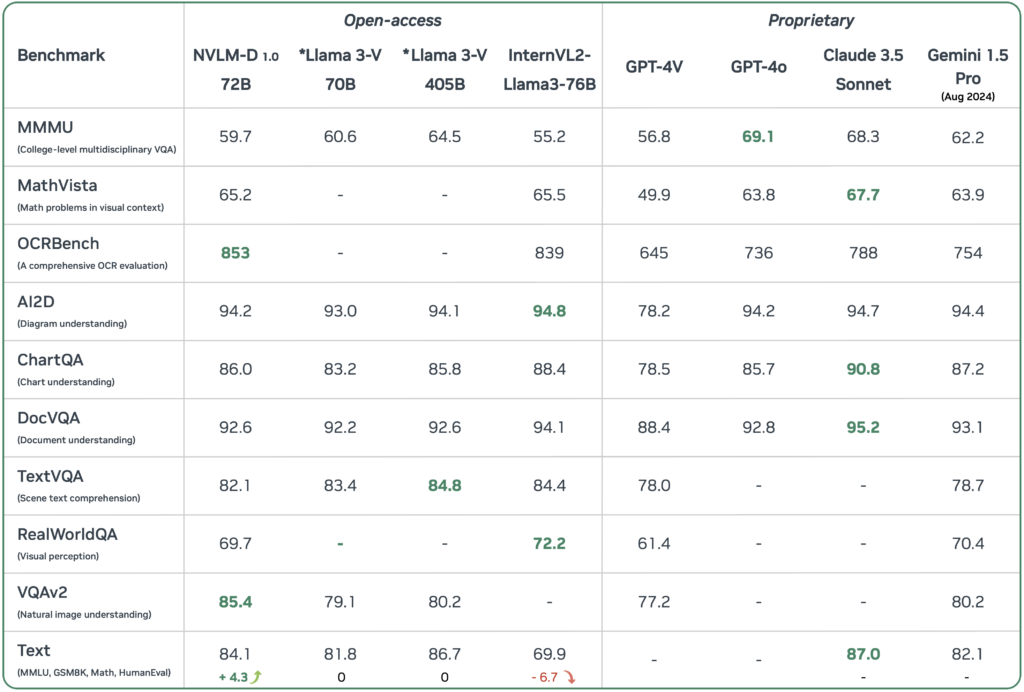

While both o1 and o3 represent significant advancements in AI reasoning, they differ considerably in their capabilities, performance, and cost-efficiency. To better understand these differences, let’s examine a comparative analysis of these models.

| Metric | o3 | o1 Preview |

|---|---|---|

| Codeforces Score | 2727 | 1891 |

| SWE-bench Score | 71.7% | 48.9% |

| AIME 2024 Score | 96.7% | N/A |

| GPQA Diamond Score | 87.7% | 78% |

| Context Window | 256K tokens | 128K tokens |

| Max Output Tokens | 100K | 32K |

| Estimated Cost per Task | $1,000 | $5 |

As evident from the comparison, o3 significantly outperforms o1 Preview across various benchmarks. However, this superior performance comes at a substantial cost. The estimated $1,000 per task for O3 dwarfs the $5 per task for O1 Preview and mere cents for O1 Mini.

Given these differences, the choice between o3 and o1 largely depends on the task complexity and budget constraints. o3 is best suited for complex coding, advanced mathematics, and scientific research tasks that require its superior reasoning capabilities. On the other hand, o1 Preview is more appropriate for detailed coding and legal analysis, while O1 Mini is ideal for quick, efficient coding tasks with basic reasoning requirements.

Source: OpenAI

Recognizing the need for a middle ground, OpenAI has introduced o3 Mini. This model aims to bridge the gap between the high-performance o3 and the more cost-efficient o1 Mini, offering a balance of advanced capabilities and reasonable computational costs. While specific details about o3 Mini are still emerging, it promises to provide a cost-effective solution for tasks that require more advanced reasoning than o1 Mini but don’t warrant the full computational power of o3.

Safety and Deliberative Alignment in OpenAI o3

As AI models like o1 and o3 grow increasingly powerful, ensuring their adherence to human values and safety protocols becomes paramount. OpenAI has pioneered a new safety paradigm called “deliberative alignment” to address these concerns.

- Deliberative alignment is a sophisticated approach.

- It trains AI models to reference OpenAI’s safety policy during the inference phase.

- This process involves a chain-of-thought mechanism.

- Models internally deliberate on how to respond safely to prompts.

- It significantly improves their alignment with safety principles.

- It reduces the likelihood of unsafe responses.

The implementation of deliberative alignment in o1 and o3 models has shown promising results. These models demonstrate an enhanced ability to answer safe questions while refusing unsafe ones, outperforming other advanced models in resisting common attempts to bypass safety measures.

To further ensure the safety and reliability of these models, OpenAI is conducting rigorous internal and external safety testing for o3 and o3 mini. External researchers have been invited to participate in this process, with applications open until January 10th. This collaborative approach underscores OpenAI’s commitment to developing AI that is not only powerful but also aligned with human values and ethical considerations.

Collaborations and Future Developments

Building on its commitment to safety and ethical AI development, OpenAI is actively engaging in collaborations and planning future advancements for its o-series models. A notable partnership has been established with the Arc Price Foundation, focusing on developing and refining AI benchmarks.

OpenAI has outlined an ambitious roadmap for the o-series models. The company plans to launch o3 mini by the end of January, with the full o3 release following shortly after, contingent on feedback and safety testing results. These launches will introduce exciting new features, including API capabilities such as function calling and structured outputs, particularly beneficial for developers working on a wide range of applications.

In line with its collaborative approach, OpenAI is actively seeking user feedback and participation in testing processes. External researchers have been invited to apply for safety testing until January 10th, emphasizing the company’s commitment to thorough evaluation and refinement of its models. This open approach extends to the development of new features for the Pro tier, which will focus on compute-intensive tasks, further expanding the capabilities of the o-series.

By fostering these collaborations and maintaining an open dialogue with users and researchers, OpenAI is not only advancing its AI technology but also ensuring that these advancements align with broader societal needs and ethical considerations. This approach positions the O-series models at the forefront of responsible AI development, paving the way for transformative applications across various domains.

The Future for AI Reasoning

The introduction of OpenAI’s o-series models marks a significant milestone in the evolution of AI reasoning. With o3 demonstrating unprecedented performance across various benchmarks, including a 87.5% score on the ARC-AGI test, we are witnessing a leap towards more capable and sophisticated AI systems. However, these advancements underscore the critical importance of continued research and development in AI safety.

OpenAI envisions a future where AI reasoning not only pushes the boundaries of technological achievement but also contributes positively to society. The ongoing collaboration with external partners, such as the Arc Price Foundation, and the emphasis on user feedback demonstrate OpenAI’s dedication to a collaborative and transparent approach to AI development.

As we stand on the brink of potentially transformative AI capabilities, the importance of active participation in the development process cannot be overstated. OpenAI continues to encourage researchers and users to engage in testing and provide feedback, ensuring that the evolution of AI reasoning aligns with broader societal needs and ethical considerations. This collaborative journey towards advanced AI reasoning holds the promise of unlocking new frontiers in problem-solving and innovation, shaping a future where AI and human intelligence work in harmony.