In 2025, the world of artificial intelligence has become very exciting, with big tech companies competing fiercely to create the most advanced AI systems ever. This intense competition has sparked a lot of new ideas, pushing the limits of what AI can do in thinking, solving problems, and interacting like humans. Over the past month, there have been amazing improvements, with two main players leading the way: Google’s Gemini 2.5 Pro and OpenAI’s GPT-4.5. In a big reveal in March 2025, Google introduced Gemini 2.5 Pro, which they call their smartest creation yet. It quickly became the top performer on the LMArena leaderboard, surpassing its competitors. What makes Gemini 2.5 special is its ability to carefully consider responses, which helps it perform better in complex tasks that require deep thinking.

Not wanting to fall behind, OpenAI launched GPT-4.5, their largest and most advanced chat model so far. This model is great at recognizing patterns, making connections, and coming up with creative ideas. Early tests show that interacting with GPT-4.5 feels very natural, thanks to its wide range of knowledge and improved understanding of what users mean. OpenAI emphasizes GPT-4.5’s significant improvements in learning without direct supervision, designed for smooth collaboration with humans.

These AI systems are not just impressive technology; they are changing how businesses operate, speeding up scientific discoveries, and transforming creative projects. As AI becomes a normal part of daily life, models like Gemini 2.5 Pro and GPT-4.5 are expanding what we think is possible. With better reasoning skills, less chance of spreading false information, and mastery over complex problems, they are paving the way for AI systems that truly support human progress.

Understanding Gemini 2.5 Pro

On March 25, 2025, Google officially unveiled Gemini 2.5 Pro, described as their “most intelligent AI model” to date. This release marked a significant milestone in Google’s AI development journey, coming after several iterations of their 2.0 models. The release strategy began with the experimental version first, giving Gemini Advanced subscribers early access to test its capabilities.

What separates Gemini 2.5 Pro from its predecessors is its fundamental architecture as a “thinking model.” Unlike previous generations that primarily relied on trained data patterns, this model can actively reason through its thoughts before responding, mimicking human problem-solving processes. This represents a significant advancement in how AI systems process information and generate responses.

Key Features and Capabilities:

- Enhanced reasoning abilities – Capable of step-by-step problem solving across complex domains

- Expanded context window – 1 million token capacity (with plans to expand to 2 million)

- Native multimodality – Seamlessly processes text, images, audio, video, and code

- Advanced code capabilities – Significant improvements in web app creation and code transformation

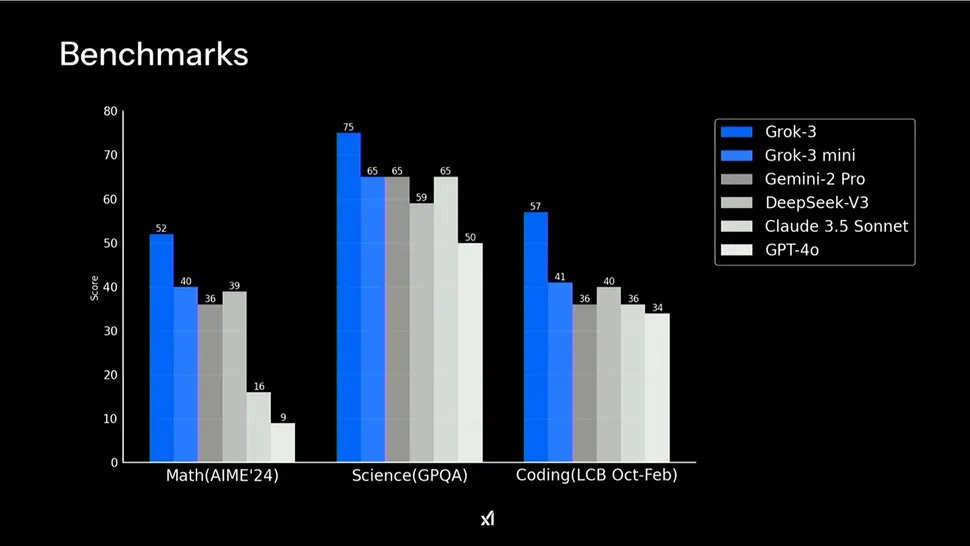

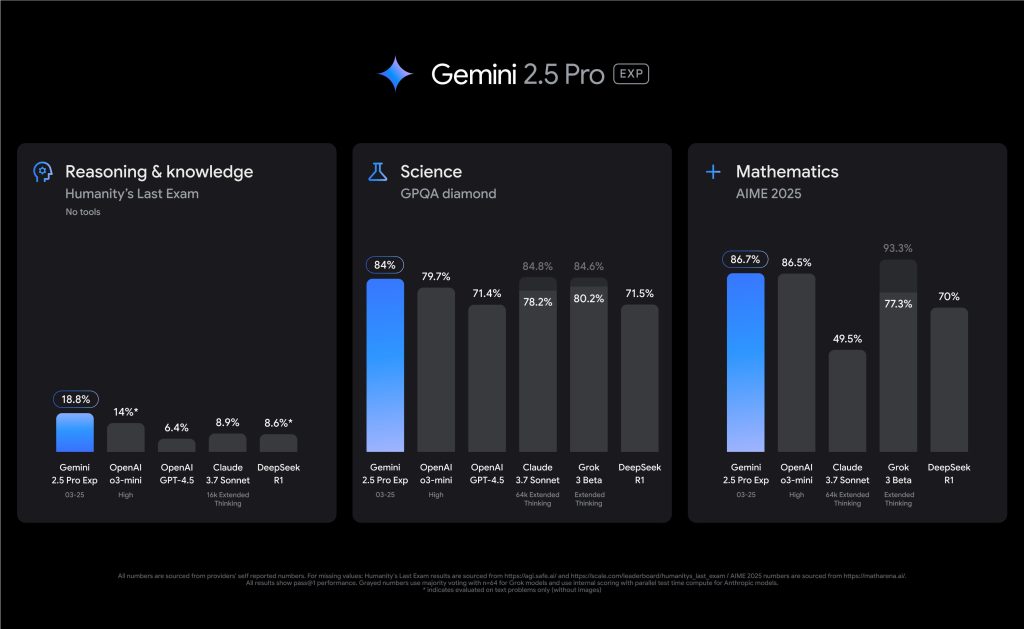

Gemini 2.5 Pro has established itself as a performance leader, debuting at the #1 position on the LMArena leaderboard. It particularly excels in benchmarks requiring advanced reasoning, scoring an industry-leading 18.8% on Humanity’s Last Exam without using external tools. In mathematics and science, it demonstrates remarkable competence with scores of 86.7% on AIME 2025 and 79.7% on GPQA diamond respectively.

Compared to previous Gemini models, version 2.5 Pro represents a substantial leap forward. While Gemini 2.0 introduced important foundational capabilities, 2.5 Pro combines a significantly enhanced base model with improved post-training techniques. The most notable improvements appear in coding performance, reasoning depth, and contextual understanding—areas where earlier versions showed limitations.

Exploring GPT-4.5

In April 2025, OpenAI introduced GPT-4.5, describing it as their “largest and most advanced chat model to date,” signifying a noteworthy achievement in the evolution of large language models. This research preview sparked immediate excitement within the AI community, with initial tests indicating that interactions with the model feel exceptionally natural, thanks to its extensive knowledge base and enhanced ability to comprehend user intent.

GPT-4.5 showcases significant advancements in unsupervised learning capabilities. OpenAI realized this progress by scaling both computational power and data inputs, alongside employing innovative architectural and optimization strategies. The model was trained on Microsoft Azure AI supercomputers, continuing a partnership that has enabled OpenAI to push the boundaries of possibility.

Core Improvements and Capabilities:

- Enhanced pattern recognition – Significantly improved ability to recognize patterns, draw connections, and generate creative insights

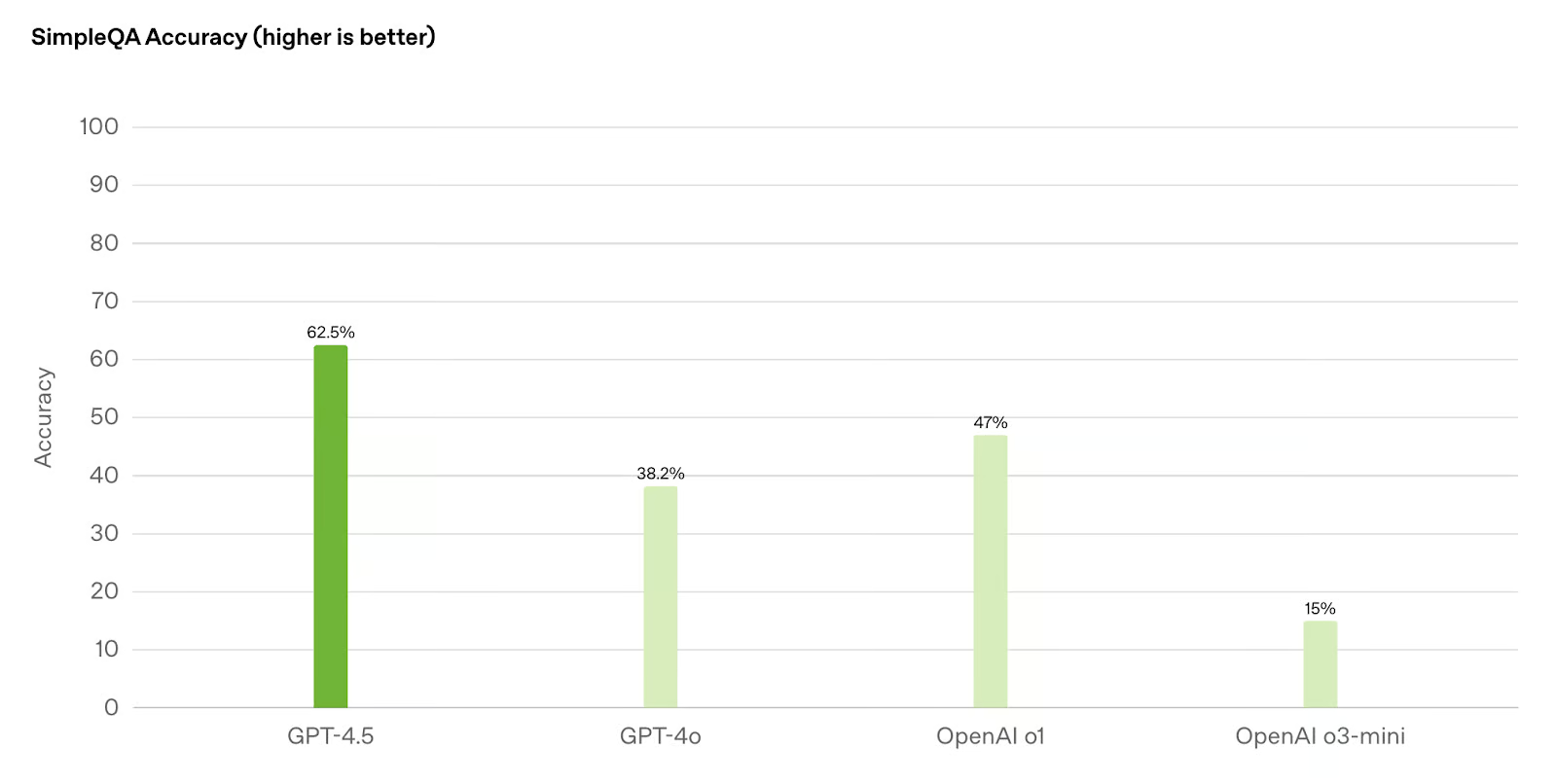

- Reduced hallucinations – Less likely to generate false information compared to previous models like GPT-4o and o1

- Improved “EQ” – Greater emotional intelligence and understanding of nuanced human interactions

- Advanced steerability – Better understanding of and adherence to complex user instructions

OpenAI has placed particular emphasis on training GPT-4.5 for human collaboration. New techniques enhance the model’s steerability, understanding of nuance, and natural conversation flow. This makes it particularly effective in writing and design assistance, where it demonstrates stronger aesthetic intuition and creativity than previous iterations.

In real-world applications, GPT-4.5 shows remarkable versatility. Its expanded knowledge base and improved reasoning capabilities make it suitable for a wide range of tasks, from detailed content creation to sophisticated problem-solving. OpenAI CEO Sam Altman has described the model in positive terms, highlighting its “unique effectiveness” despite not leading in all benchmark categories.

The deployment strategy for GPT-4.5 reflects OpenAI’s measured approach to releasing powerful AI systems. Initially available to ChatGPT Pro subscribers and developers on paid tiers through various APIs, the company plans to gradually expand access to ChatGPT Plus, Team, Edu, and Enterprise subscribers. This phased rollout allows OpenAI to monitor performance and safety as usage scales up.

Performance Metrics: A Comparative Analysis

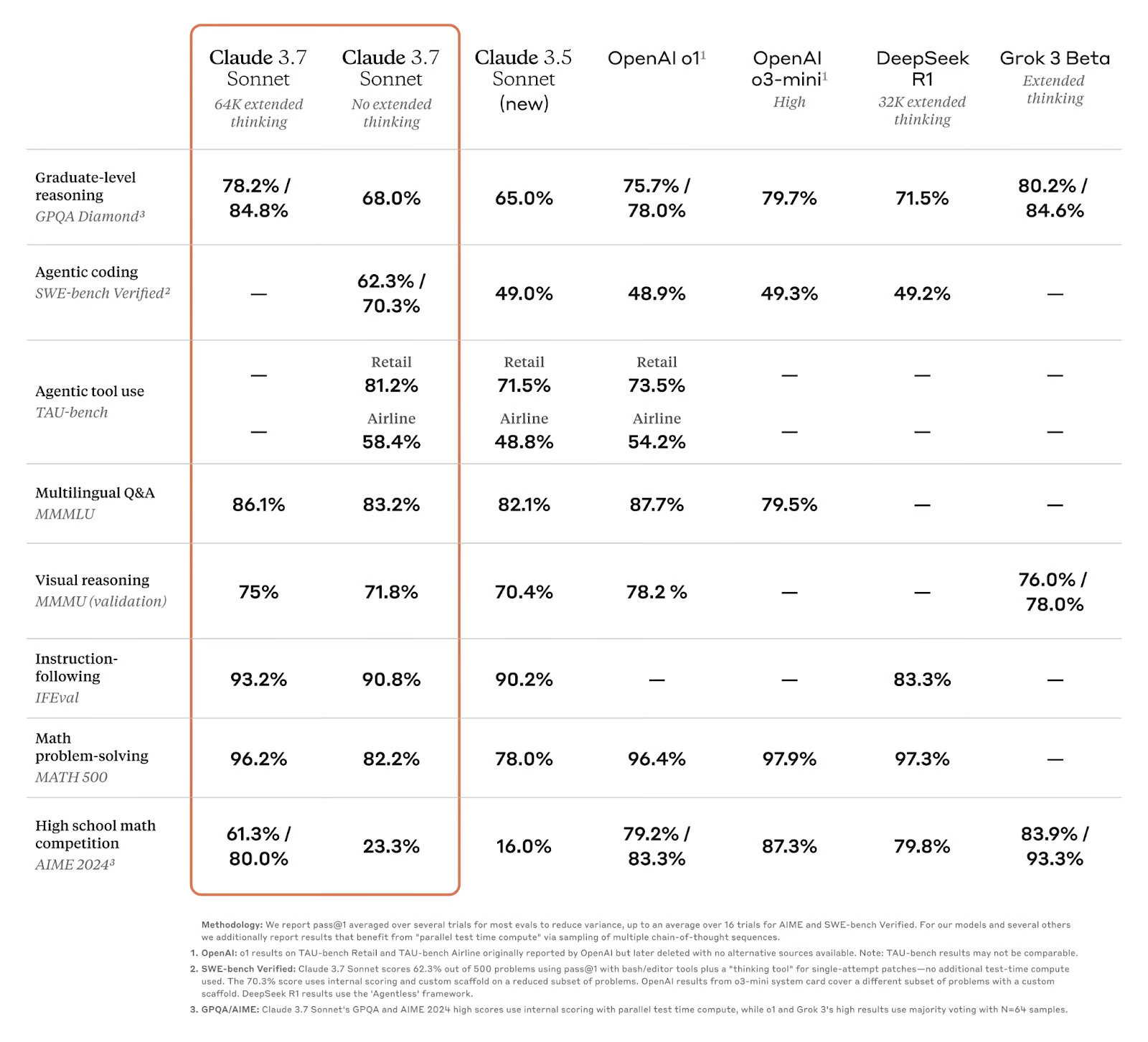

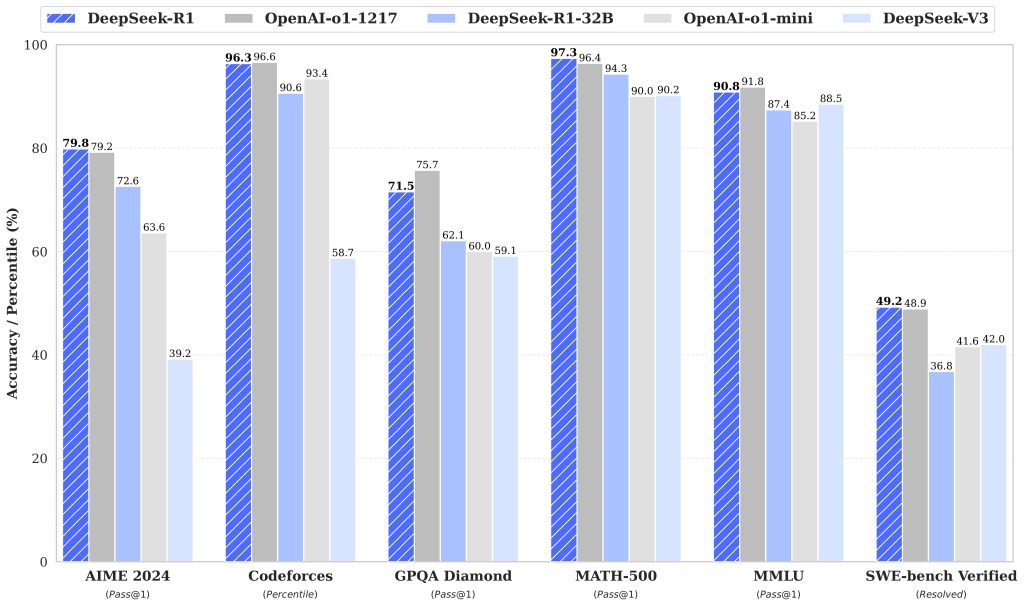

When examining the technical capabilities of these advanced AI models, benchmark performance provides the most objective measure of their abilities. Gemini 2.5 Pro and GPT-4.5 each demonstrate unique strengths across various domains, with benchmark tests revealing their distinct advantages.

| Benchmark | Gemini 2.5 Pro (03-25) | OpenAI GPT-4.5 | Claude 3.7 Sonnet | Grok 3 Preview |

|---|---|---|---|---|

| LMArena (Overall) | #1 | 2 | 21 | 2 |

| Humanity’s Last Exam (No Tools) | 18.8% | 6.4% | 8.9% | – |

| GPQA Diamond (Single Attempt) | 84.0% | 71.4% | 78.2% | 80.2% |

| AIME 2025 (Single Attempt) | 86.7% | – | 49.5% | 77.3% |

| SWE-Bench Verified | 63.8% | 38.0% | 70.3% | – |

| Aider Polyglot (Whole/Diff) | 74.0% / 68.6% | 44.9% diff | 64.9% diff | – |

| MRCR (128k) | 91.5% | 48.8% | – | – |

Gemini 2.5 Pro shows exceptional strength in reasoning-intensive tasks, particularly excelling in long-context reasoning and knowledge retention. It significantly outperforms competitors on Humanity’s Last Exam, which tests the frontier of human knowledge. However, it shows relative weaknesses in code generation, agentic coding, and occasionally struggles with factuality in certain domains.

GPT-4.5, conversely, demonstrates particular excellence in pattern recognition, creative insight generation, and scientific reasoning. It outperforms in the GPQA diamond benchmark, showing strong capabilities in scientific domains. The model also exhibits enhanced emotional intelligence and aesthetic intuition, making it particularly valuable for creative and design-oriented applications. A key advantage is its reduced tendency to generate false information compared to its predecessors.

In practical terms, Gemini 2.5 Pro represents the superior choice for tasks requiring deep reasoning, multimodal understanding, and handling extremely long contexts. GPT-4.5 offers advantages in creative work, design assistance, and applications where factual precision and natural conversational flow are paramount.

Applications and Use Cases

While benchmark performances provide valuable technical insights, the true measure of these advanced AI models lies in their practical applications across various domains. Both Gemini 2.5 Pro and GPT-4.5 demonstrate distinct strengths that make them suitable for different use cases, with organizations already beginning to leverage their capabilities to solve complex problems.

Gemini 2.5 Pro in Scientific and Technical Domains

Gemini 2.5 Pro’s exceptional reasoning capabilities and extensive context window make it particularly valuable for scientific research and technical applications. Its ability to process and analyze multimodal data—including text, images, audio, video, and code—enables it to handle complex problems that require synthesizing information from diverse sources. This versatility opens up numerous possibilities across industries requiring technical precision and comprehensive analysis.

- Scientific research and data analysis – Gemini 2.5 Pro’s strong performance on benchmarks like GPQA (79.7%) demonstrates its potential to assist researchers in analyzing complex scientific literature, generating hypotheses, and interpreting experimental results

- Software development and engineering – The model excels at creating web applications, performing code transformations, and developing complex programs with a 63.8% score on SWE-Bench Verified using custom agent setups

- Medical diagnosis and healthcare – Its reasoning capabilities enable analysis of medical imagery alongside patient data to support healthcare professionals in diagnostic processes

- Big data analytics and knowledge management – The 1 million token context window (expanding soon to 2 million) allows processing of entire datasets and code repositories in a single prompt

GPT-4.5’s Excellence in Creative and Communication Tasks

In contrast, GPT-4.5 demonstrates particular strength in tasks requiring nuanced communication, creative thinking, and aesthetic judgment. OpenAI emphasized training this model specifically for human collaboration, resulting in enhanced capabilities for content creation, design assistance, and natural communication.

- Content creation and writing – GPT-4.5 shows enhanced aesthetic intuition and creativity, making it valuable for generating marketing copy, articles, scripts, and other written content

- Design collaboration – The model’s improved understanding of nuance and context makes it an effective partner in design processes, from conceptualization to refinement

- Customer engagement – With greater emotional intelligence, GPT-4.5 provides more appropriate and natural responses in customer service contexts

- Educational content development – The model excels at tailoring explanations to different knowledge levels and learning styles

Companies across various sectors are already integrating these models into their workflows. Microsoft has incorporated OpenAI’s technology directly into its product suite, providing enterprise users with immediate access to GPT-4.5’s capabilities. Similarly, Google’s Gemini 2.5 Pro is finding applications in research institutions and technology companies seeking to leverage its reasoning and multimodal strengths.

The complementary strengths of these models suggest that many organizations may benefit from utilizing both, depending on specific use cases. As these technologies continue to mature, we can expect to see increasingly sophisticated applications that fundamentally transform knowledge work, creative processes, and problem-solving across industries.

The Future of AI: What’s Next?

As Gemini 2.5 Pro and GPT-4.5 push the boundaries of what’s possible, the future trajectory of AI development comes into sharper focus. Google’s commitment to “building thinking capabilities directly into all models” suggests a future where reasoning becomes standard across AI systems. Similarly, OpenAI’s approach of “scaling unsupervised learning and reasoning” points to models with ever-expanding capabilities to understand and generate human-like content.

The coming years will likely see AI models with dramatically expanded context windows beyond the current limits, more sophisticated reasoning, and seamless integration across all modalities. We may also witness the rise of truly autonomous AI agents capable of executing complex tasks with minimal human supervision. However, these advancements bring significant challenges. As AI capabilities increase, so too does the importance of addressing potential risks related to misinformation, privacy, and the displacement of human labor.

Ethical considerations must remain at the forefront of AI development. OpenAI acknowledges that “each increase in model capabilities is an opportunity to make models safer”, highlighting the dual responsibility of advancement and protection. The AI community will need to develop robust governance frameworks that encourage innovation while safeguarding against misuse.

The AI revolution represented by Gemini 2.5 Pro and GPT-4.5 is only beginning. While the pace of advancement brings both excitement and apprehension, one thing remains clear: the future of AI will be defined not just by technological capabilities, but by how we choose to harness them for human benefit. By prioritizing responsible development that augments human potential rather than replacing it, we can ensure that the next generation of AI models serve as powerful tools for collective progress.