RAG-as-a-Service: Unlock Generative AI for Your Business

With the rise of Large Language Models (LLMs) and generative AI trends, integrating generative AI solutions in your business can supercharge workflow efficiency. If you’re new to generative AI, the plethora of jargon can be intimidating. This blog will demystify the basic terminologies of generative AI and guide you on how to get started with a custom AI solution for your business with RAG-as-a-Service.

What is Retrieval Augmented Generation (RAG)?

Retrieval Augmented Generation (RAG) is a key concept in implementing LLMs or generative AI in business workflows. RAG leverages pre-trained Transformer models to answer business-related queries by injecting relevant data from your specific knowledge base into the query process. This data, which the LLMs may not have been trained on, is used to generate accurate and relevant responses.

RAG is both cost-effective and efficient, making generative AI more accessible. Let’s explore some key terminologies related to RAG.

Key Terminologies in RAG

Chunking

LLMs are resource-intensive and are trained on manageable data lengths known as the ‘Context Window.’ The Context Window varies based on the LLM used. To address its limitations, business data provided as documents or textual literature is segmented into smaller chunks. These chunks are utilized during the query retrieval process.

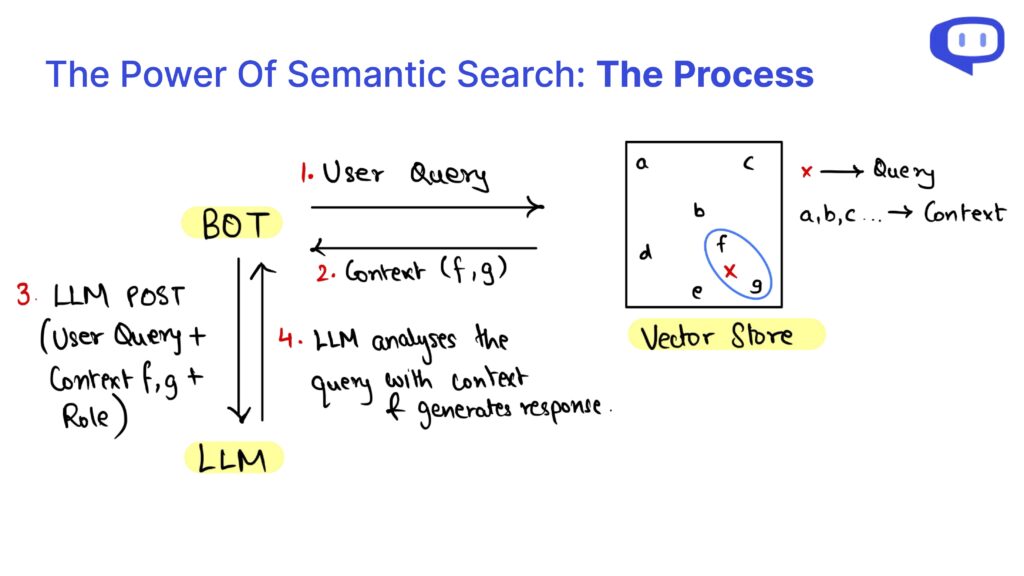

Since the chunks are unstructured and the queries may differ syntactically from the knowledge base data, chunks are retrieved using semantic search.

Vector Databases

Vector databases like Pinecone, Chromadb, and FAISS store the embeddings of business data. Embeddings convert textual data into numerical form based on their meaning and are stored in a high-dimensional vector space where semantically similar data are closer.

When a user query is made, the embeddings of the query are used to find semantically similar chunks in the vector database.

RAG-as-a-Service

Implementing RAG for your business can be daunting if you lack technical expertise. This is where RAG-as-a-Service (RaaS) comes into play.

We at meetcody.ai offer a plug-and-play solution for your business needs. Simply create an account with us and get started for free. We handle the chunking, vector databases, and the entire RAG process, providing you with complete peace of mind.

FAQs

1. What is RAG-as-a-Service (RaaS)?

RAG-as-a-Service (RaaS) is a comprehensive solution that handles the entire Retrieval Augmented Generation process for your business. This includes data chunking, storing embeddings in vector databases, and managing semantic search to retrieve relevant data for queries.

2. How does chunking help in the RAG process?

Chunking segments large business documents into smaller, manageable pieces that fit within the LLM’s Context Window. This segmentation allows the LLM to process and retrieve relevant information more efficiently using semantic search.

3. What are vector databases, and why are they important?

Vector databases store the numerical representations (embeddings) of your business data. These embeddings allow for the efficient retrieval of semantically similar data when a query is made, ensuring accurate and relevant responses from the LLM.

Integrate RAG into your business with ease and efficiency by leveraging the power of RAG-as-a-Service. Get started with meetcody.ai today and transform your workflow with advanced generative AI solutions.