OpenAI GPT-3.5 Turbo & GPT 4 Fine Tuning

OpenAI has ushered in a new era for AI developers, unveiling an enhanced GPT-3.5 Turbo model. This isn’t just any release; developers now have the latitude to tailor the model, optimizing it to resonate more with their unique applications. Intriguingly, OpenAI posits that when fine-tuned, GPT-3.5 Turbo can potentially eclipse the prowess of the foundational GPT-4 in specialized tasks.

This customization drives home several advantages:

- Coherent Instructions: Developers can mold the model to adhere to specific guidelines, ensuring it remains in sync with the language tone set by the initial prompt.

- Consistent Responses: Whether it’s auto-completing code or scripting API calls, the model can be guided to yield more consistent outcomes.

- Tonal Refinement: A brand’s voice can be distinctive. The model can be tweaked to mirror this voice, ensuring alignment with brand identity.

One of the standout features of this fine-tuning capability is efficiency. Early adopters have spotlighted a whopping 90% reduction in prompt size post fine-tuning without compromising on the model’s performance. This not only accelerates API calls but also proves to be cost-effective.

Delving into the mechanics, fine-tuning is a multifaceted process. It involves preparing a training dataset, sculpting the fine-tuned model, and deploying it. The linchpin here is the dataset preparation, encompassing tasks like prompt creation, showcasing a plethora of well-structured demonstrations, training the model on these demonstrations, and subsequently testing its mettle.

However, OpenAI strikes a note of caution. While the allure of fine-tuning is undeniable, it shouldn’t be the inaugural step in elevating a model’s performance. It’s an intricate endeavor demanding substantial time and expertise. Before embarking on the fine-tuning journey, developers should first acquaint themselves with techniques such as prompt engineering, prompt chaining, and function calling. These strategies, coupled with other best practices, often serve as the preliminary steps in model enhancement.

Anticipation Builds for GPT-4 Fine-Tuning

Building on the momentum of their GPT-3.5 Turbo fine-tuning announcement, OpenAI has teased the developer community with another revelation: the imminent arrival of fine-tuning capabilities for the much-anticipated GPT-4 model, slated for release this fall. This has certainly ratcheted up the excitement levels, with many eager to harness the enhanced capabilities of GPT-4.

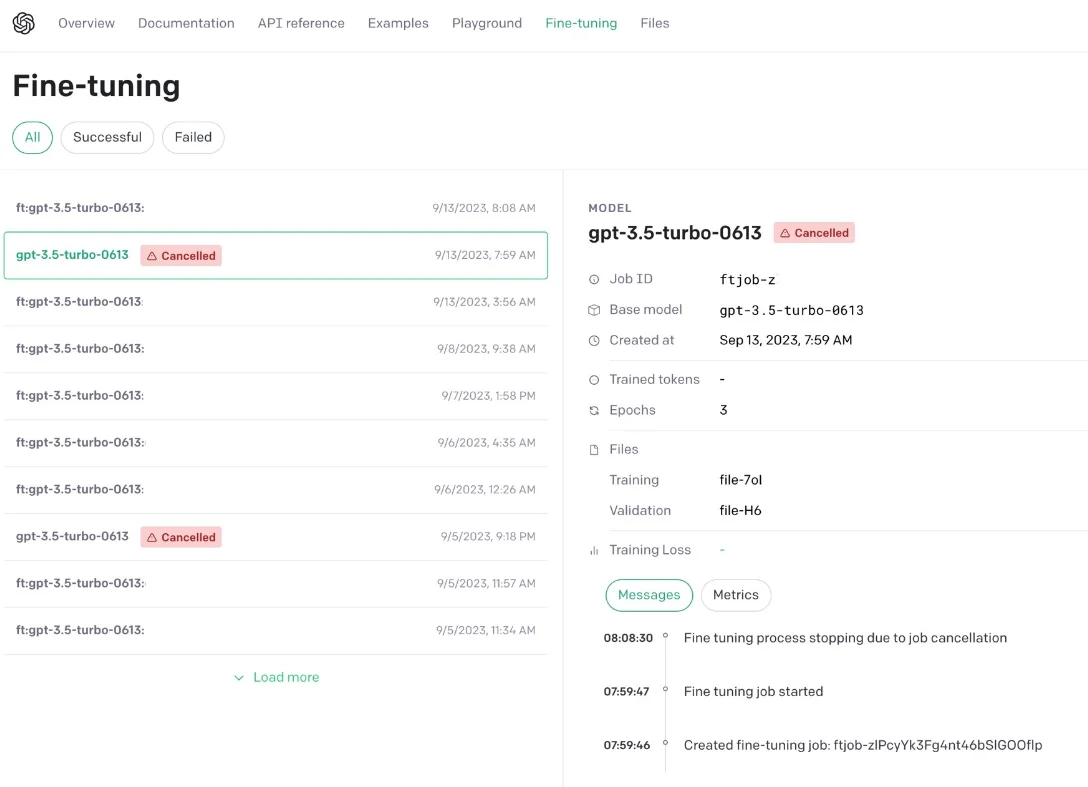

Easily Fine-Tuning Becomes Easier

In the latest update, OpenAI has launched its fine-tuning user interface. Developers can now visually track their fine-tuning activities. And, there’s more on the horizon; the ability to craft fine-tunes directly through this UI will be unfurled in the coming months.

Furthermore, OpenAI is all about empowering its users. They’ve escalated the concurrent training limit from a solitary model to three, allowing developers to fine-tune multiple models concurrently, maximizing efficiency.

With these advancements, OpenAI continues to fortify its position at the forefront of AI innovation, consistently offering tools that not only redefine the present but also pave the way for the future.