Gemini 1.5 Flash vs GPT-4o: Google’s Response to GPT-4o?

The AI race has intensified, becoming a catch-up game between the big players in tech. The launch of GPT-4o just before Google I/O is no coincidence. GPT-4o’s incredible capabilities in multimodality, or omnimodality to be precise, have created a significant impact in the Generative AI competition. However, Google is not one to hold back. During Google I/O, they announced new variants of their Gemini and Gemma models. Among all the models announced, the Gemini 1.5 Flash stands out as the most impactful. In this blog, we will explore the top features of the Gemini 1.5 Flash and compare it to the Gemini 1.5 Pro and Gemini 1.5 Flash vs GPT-4o to determine which one is better.

Comparison of Gemini 1.5 Flash vs GPT-4o

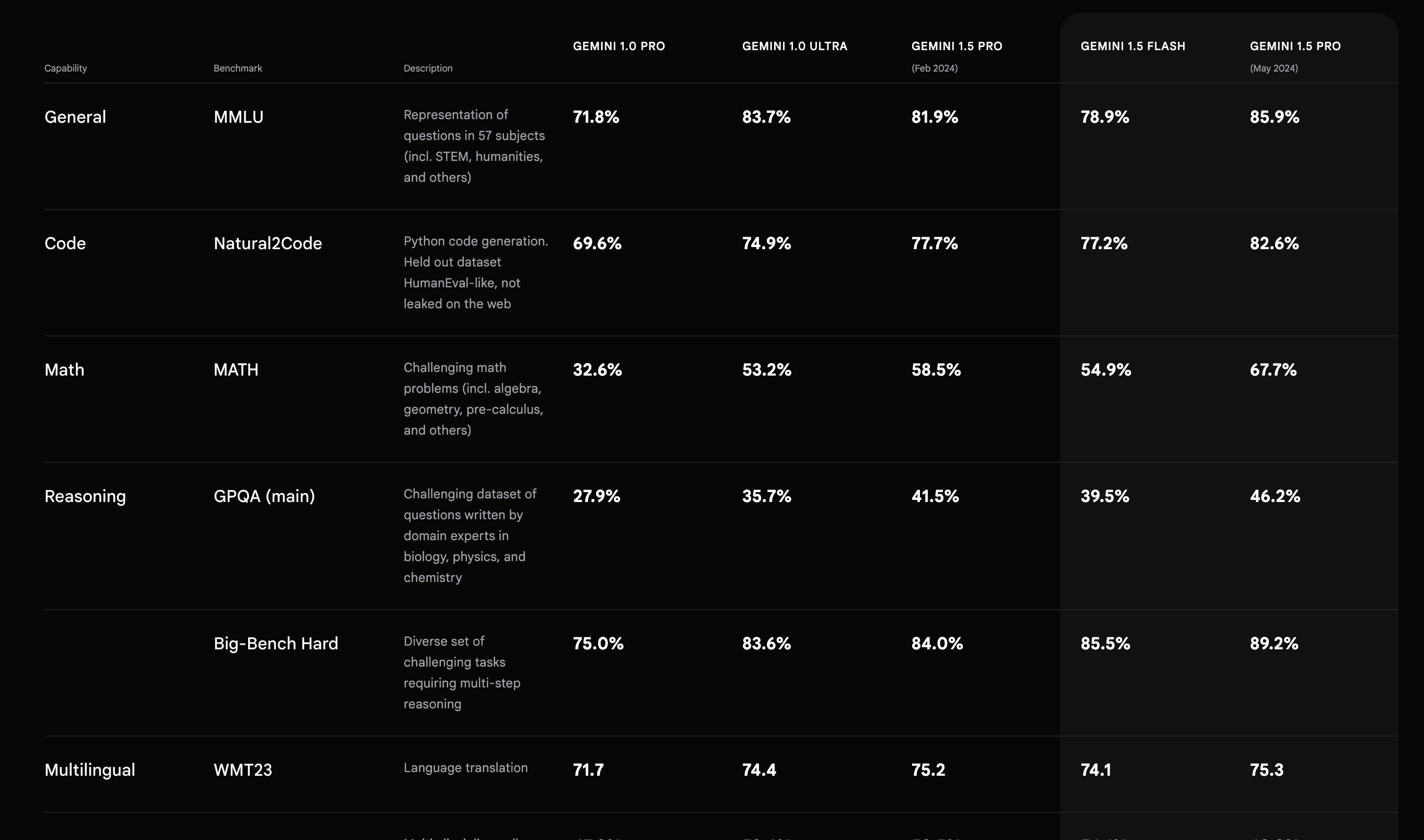

Based on the benchmark scores released by Google, the Gemini 1.5 Flash has superior performance on audio compared to all other LLMs by Google and is on par with the outgoing Gemini 1.5 Pro (Feb 2024) model for other benchmarks. Although we would not recommend relying completely on benchmarks to assess the performance of any LLM, they help in quantifying the difference in performance and minor upgrades.

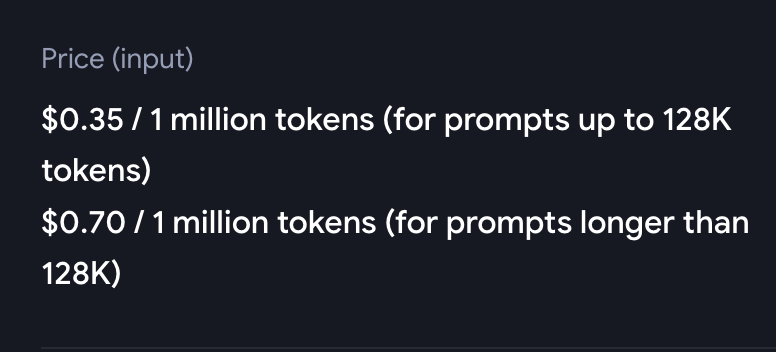

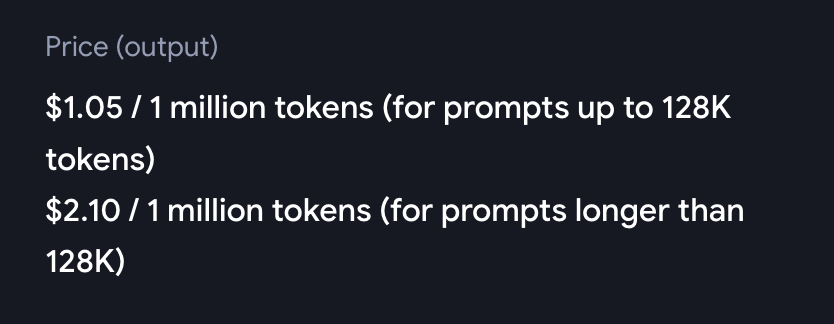

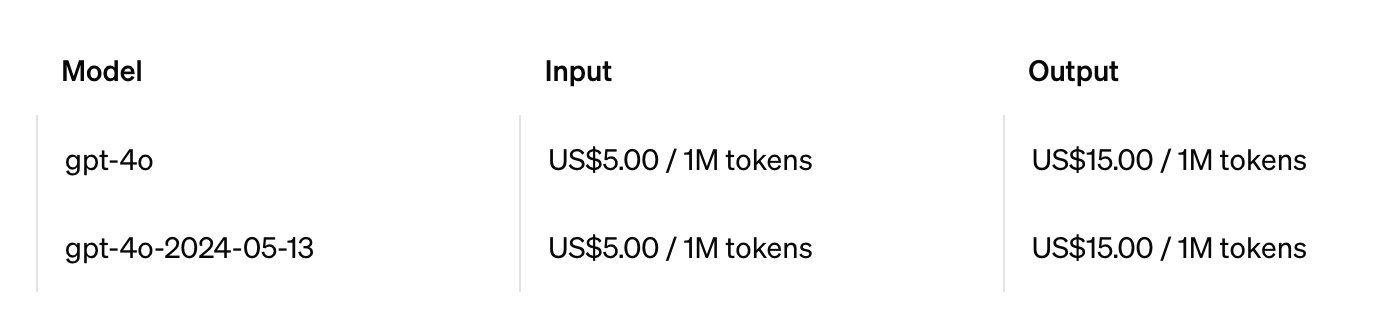

The elephant in the room is the cost of the Gemini 1.5 Flash. Compared to GPT-4o, the Gemini 1.5 Flash is much more affordable.

Context Window

Just like the Gemini 1.5 Pro, the Flash comes with a context window of 1 million tokens, which is more than any of the OpenAI models and is one of the largest context windows for production-grade LLMs. A larger context window allows for more data comprehension and can improve third-party techniques such as RAG (Retrieval-Augmented Generation) for use cases with a large knowledge base by increasing the chunk size. Additionally, a larger context window allows more text generation, which is helpful in scenarios like writing articles, emails, and press releases.

Multimodality

Gemini-1.5 Flash is multimodal. Multimodality allows for inputting context in the form of audio, video, documents, etc. LLMs with multimodality are more versatile and open the doors for more applications of generative AI without any preprocessing required.

“Gemini 1.5 models are built to handle extremely long contexts; they have the ability to recall and reason over fine-grained information from up to at least 10M tokens. This scale is unprecedented among contemporary large language models (LLMs), and enables the processing of long-form mixed-modality inputs including entire collections of documents, multiple hours of video, and almost five days long of audio.” — DeepMind Report

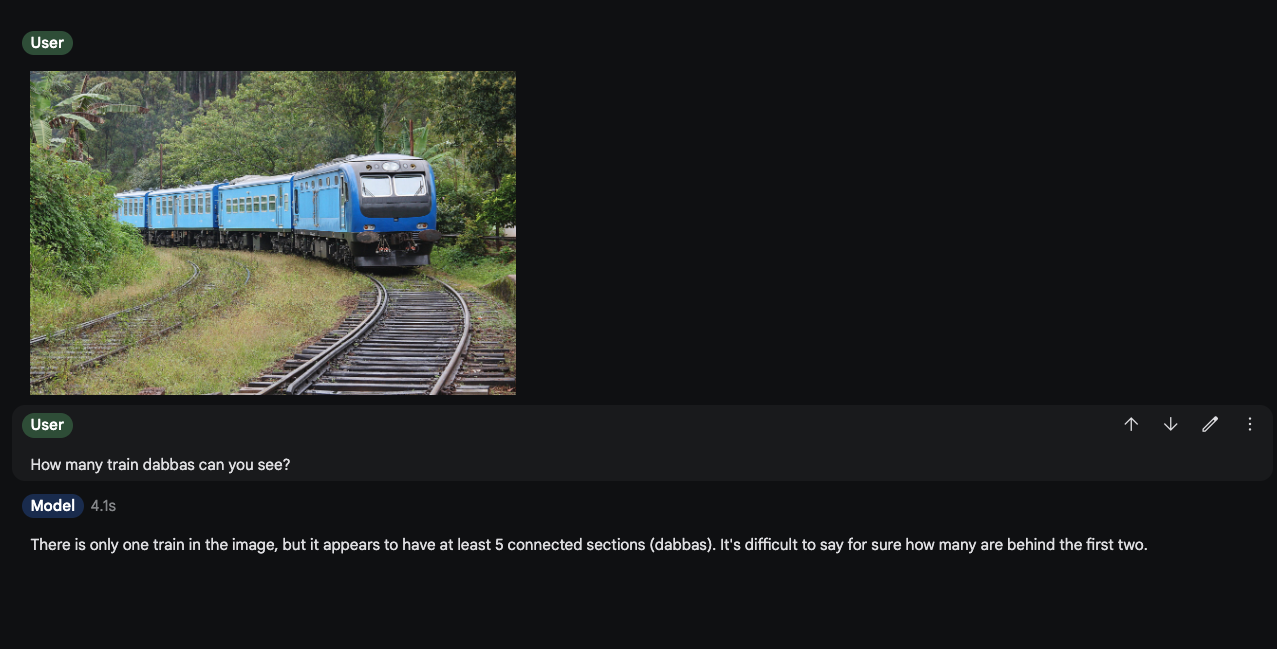

Dabbas = Train coach in Hindi. Demonstrating the Multimodality and Multilingual performance.

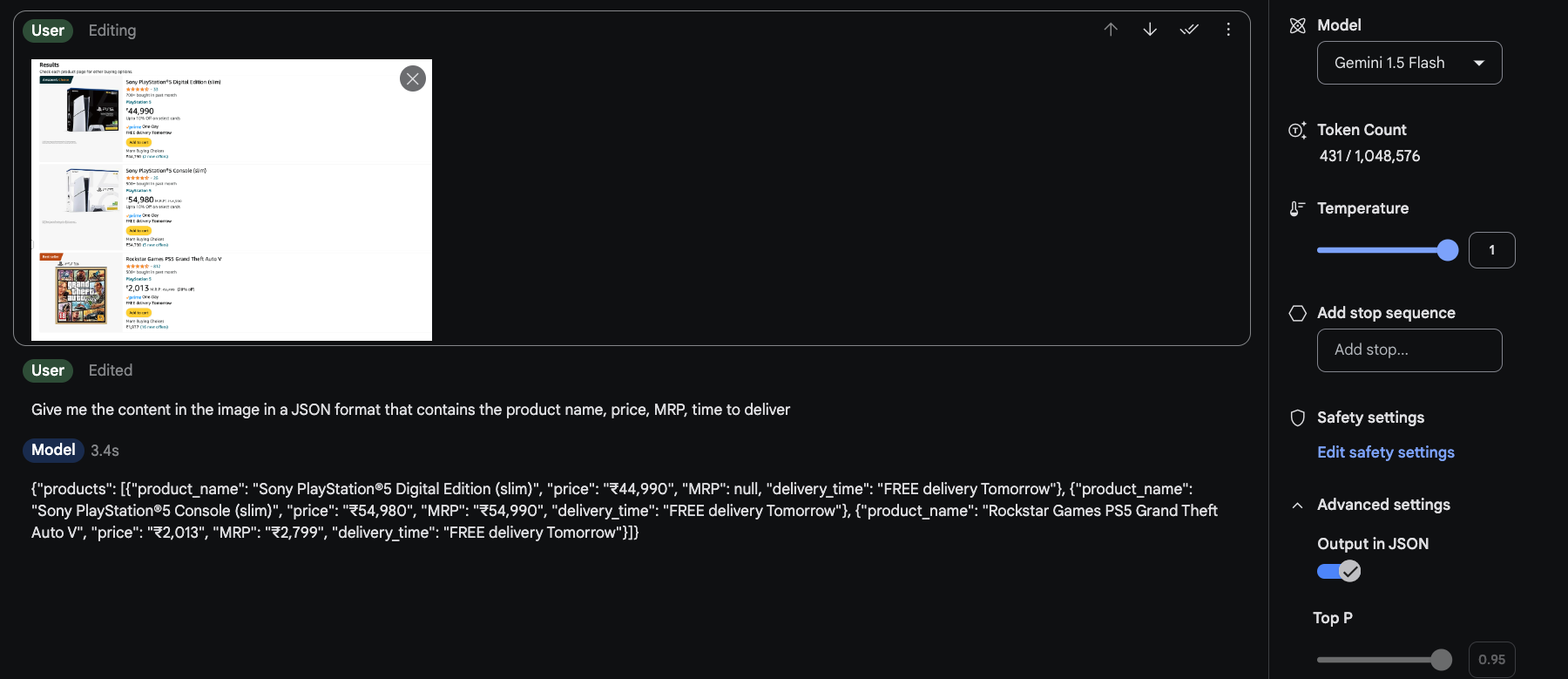

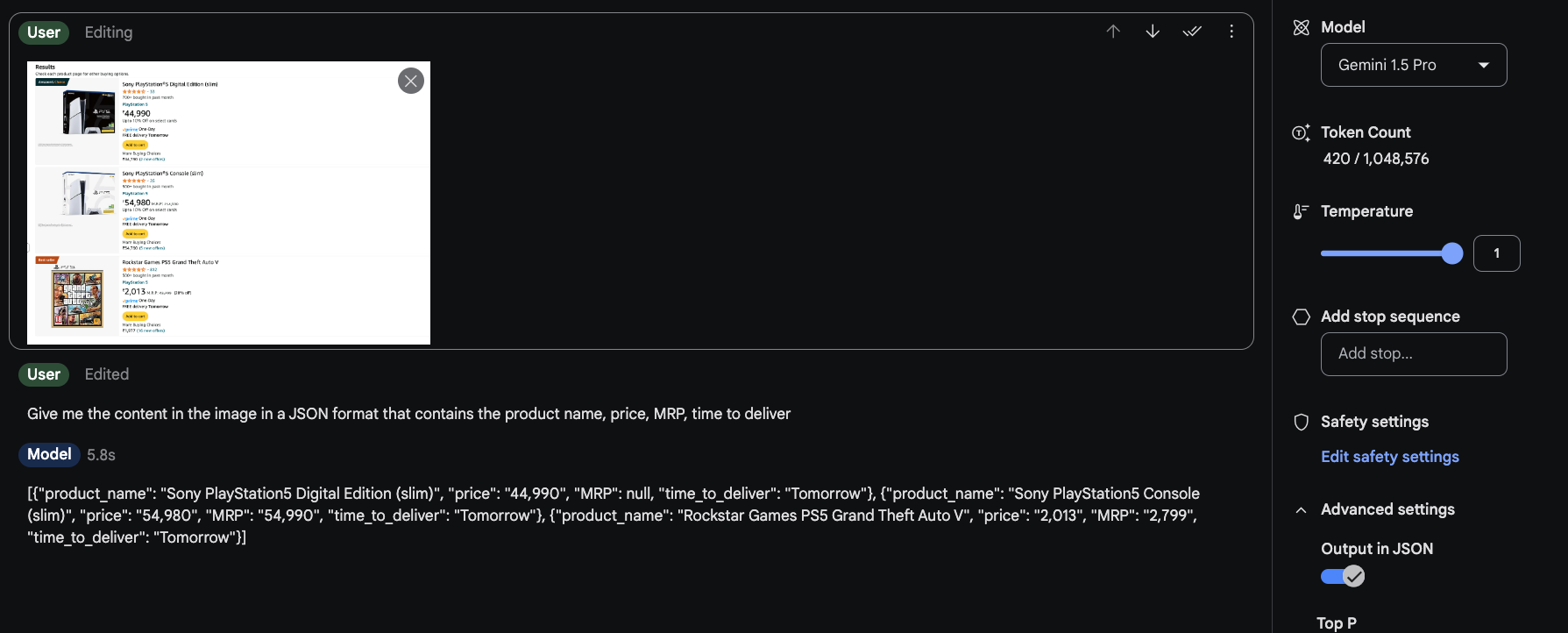

Having multimodality also allows us to use LLMs as substitutes for other specialized services. For eg. OCR or Web Scraping.

Easily scrape data from web pages and transform it.

Speed

Gemini 1.5 Flash, as the name suggests, is designed to have an edge over other models in terms of response time. For the example of web scraping mentioned above, there is approximately a 2.5-second difference in response time, which is almost 40% quicker, making the Gemini 1.5 Flash a better choice for automation usage or any use case that requires lower latency.

Some interesting use-cases of Gemini 1.5 Flash

Summarizing Videos

Gemini 1.5 Pro’s video understanding is the most underrated things in AI.

In ~50s, it “saw” a 11min Youtube video (~175k tokens) of the most iconic moments in sport and was able to perfectly (to my knowledge) list all 18 of the moments.

There is no other video AI this good! pic.twitter.com/LaVGR3ATfU

— Deedy (@deedydas) April 5, 2024

Writing Code using Video

This is mind blowing 🤯

I gave Gemini 1.5 Flash video recording of me shopping and it gave me Selenium code in ~5 seconds.

This can change so many things. pic.twitter.com/Ojm6aueLe7

— Min Choi (@minchoi) May 18, 2024

Automating Gameplay

I built my own omni assistant using Gemini 1.5 Flash to guide me through Super Mario 64.

Gemini can see what I do on my screen and communicate with me in real time via voice, and thanks to the long 1M context, it has a memory of everything we do together.

Incredible. pic.twitter.com/doTngufjFL

— Pietro Schirano (@skirano) May 21, 2024