The Power Of GPT-3.5 16K

Should you upgrade to the paid version of Cody? Here’s why you might want to.

A few days ago, we released a newer model for all our paid users right after the OpenAI release: GPT-3.5 16k. As intimidating as it might sound, it could be a game-changer for your business. In this blog, we will delve into the use cases of GPT-3.5 16k, explore its advantages, and highlight how it differs from the existing GPT-3.5 model and the latest higher-end GPT-4.

What is GPT-3.5 16K?

If you have used Cody’s free version before, you might already be familiar with the vanilla GPT-3.5 model, which utilizes OpenAI’s gpt-3.5-turbo model. This model is the popular choice for many users as it offers affordability, speed and reliability in most cases. On the other hand, the GPT-3.5-16k utilizes OpenAI’s gpt-3.5-turbo-16k model, which is an extension of the gpt-3.5-turbo. The significant difference lies in the ’16k’ aspect.

What is 16K?

The ’16K’ suffix indicates that the model has a context window of 16,000 tokens, a significant increase from the existing 4,096 tokens. In our previous blog, we explained what tokens are in detail. A smaller context window in models can result in several limitations, including:

- Lack of relevance: With a limited context window, the model may struggle to capture and maintain relevance to the broader context of a conversation or task.

- Inability to maintain context: A smaller context window can make it challenging for the model to remember and reference information from earlier parts of a conversation, leading to inconsistencies and difficulties in maintaining a coherent dialogue.

- Constraints on input-query lengths: Shorter context windows impose constraints on the length of input queries, making it difficult to provide comprehensive information or ask complex questions.

- Knowledge-base context limitations: A smaller context window may face limitations in incorporating knowledge from relevant documents from the knowledge due to the data ingestion limit.

Advantages of a bigger context window

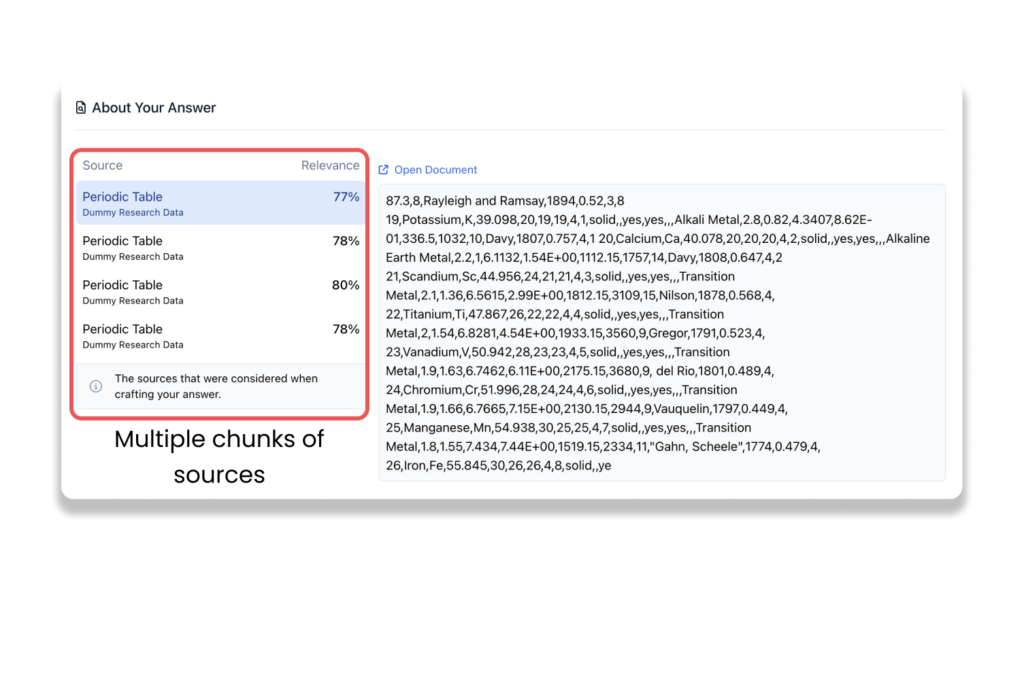

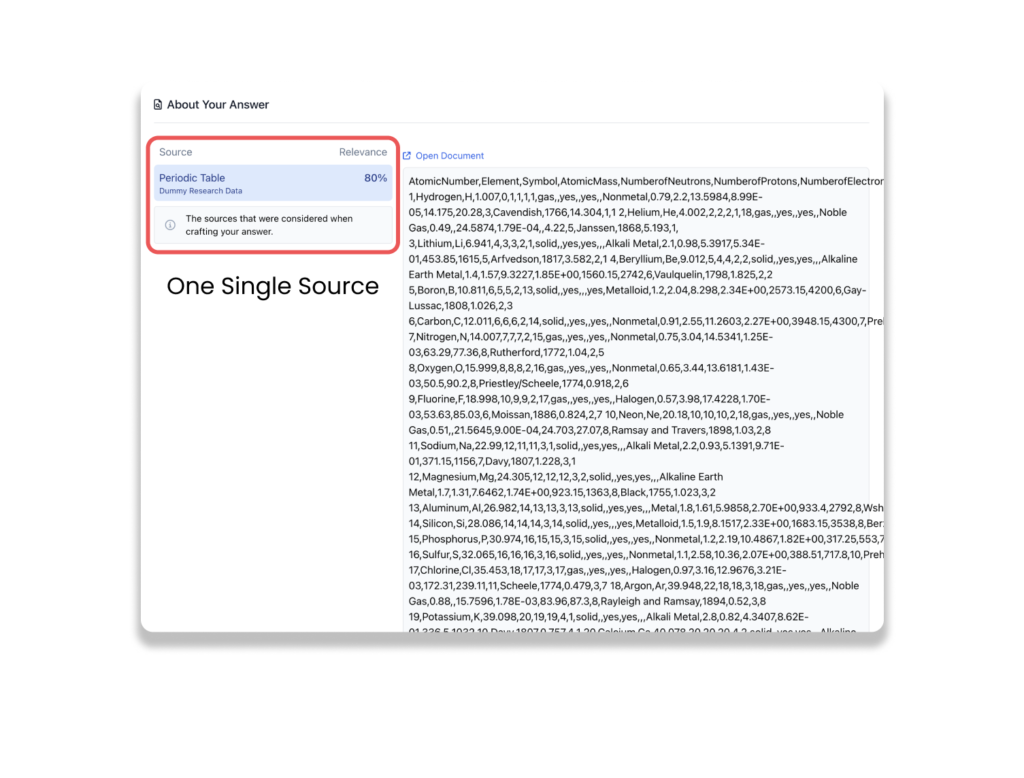

A question might arise in the minds of a few people: How is GPT-3.5 able to process over 1000 web pages and documents on Cody, despite its capacity of only 4096 tokens? With advancements in the field of generative AI, providing context does not mean simply feeding the entire document as is to language models like GPT-3.5 Turbo. Backend processes such as chunking, embeddings, and vector databases are utilized to preprocess the data, maintaining relevance within the chunks and allowing the model to navigate the predefined context window.

In the current scenario, a larger context window would enhance the AI’s overall performance by accommodating larger and more complex inputs, while reducing the number of vector-storage transactions required to generate a response. Since the context window encompasses both the input and output, a larger window would also enable the model to provide elaborate and coherent responses while maintaining the conversational context.

A larger context window would also help mitigate any hallucinations that may occur when the token limit in a conversation is exceeded.

GPT-3.5 Turbo 16K v/s GPT-4

Although gpt-3.5-turbo-16k is the latest release from OpenAI, gpt-4 still outshines it in various aspects such as understanding visual context, improved creativity, coherence, and multilingual performance. The only area where GPT-3.5-16k excels is the context window, as GPT-4 is currently available in the 8k variant, with the 32k variant still being gradually rolled out.

In the meantime, until the 32k version of gpt-4 becomes widely accessible, the GPT-3.5-16k stands out with its larger context window. If you’re specifically looking for a model that offers a more extensive context window, the GPT-3.5-16k is the ideal choice.

Use cases of a bigger context window

- Customer Support: A larger context window enhances the short-term memory of the model, making it well-suited for applications involving customer support, form-filling, and user data collection. It enables the model to maintain context over a longer period, leading to more relevant responses to user inputs such as names, customer IDs, complaints, and feedback.

- Employee Training: Leveraging Cody for employee training purposes proves highly effective. Employee training often involves extensive data related to business activities, steps, and processes. To maintain contextual relevance throughout the training program, it becomes necessary to incorporate the entire conversation history of the trainee. A larger context window allows for the inclusion of more historical information, facilitating a more comprehensive and effective training experience.

- Data Analysis: Tasks involving financial analysis and statistical inference often require processing large volumes of data to derive meaningful insights. With a bigger context window, the model can hold more relevant information during computation, resulting in a more coherent and accurate analysis. For instance, comparing balance sheets and the overall performance of a company year over year can be executed more effectively with a larger context window.

Comparison between GPT-3.5 4K v/s 16K

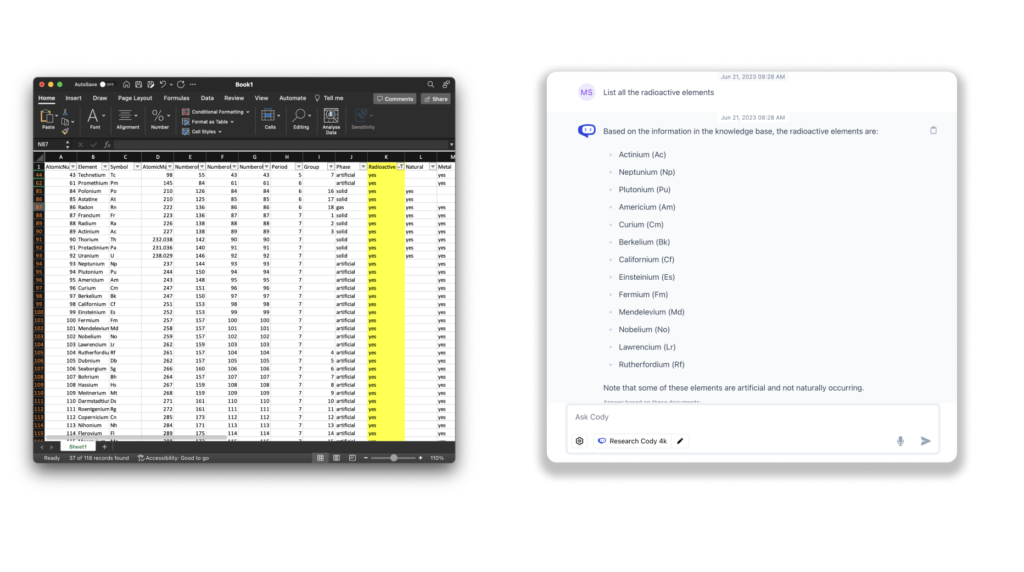

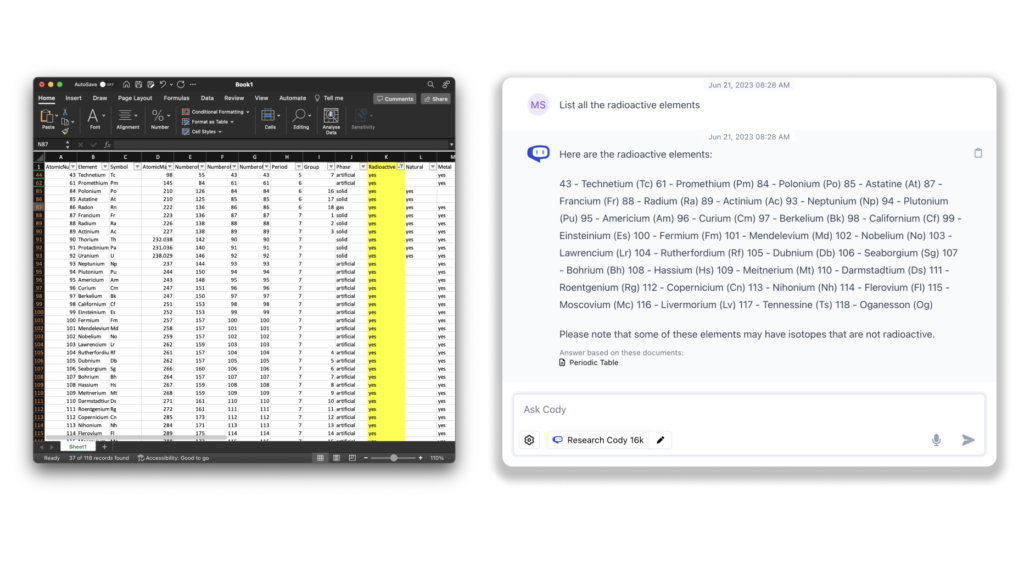

To demonstrate the improvements on the 16K model, we queried a .csv file of the Periodic Table containing 118 elements and their features.

From the comparison, it is visible that GPT-3.5 4K was unable to produce all the radioactive elements in its response and skipped some elements. In contrast, GPT-3.5 16K produced almost all the radioactive elements present in the provided table. This demonstrates the improved elaboration of responses due to the bigger context window. This was just a small glimpse into the potential the 16k context window holds with there being endless applications and implementations of the same. With GPT-4 32K in the pipeline, the 16K model can facilitate a smoother transition to a bigger context window.

Should you upgrade?

The larger context window is undoubtedly a significant update and not merely a gimmick. Improved context understanding plays a crucial role in enhancing the quality of responses, and a larger context window unlocks substantial potential for these Language Model Models (LLMs). By allowing for a more extensive grasp of the conversation history and contextual cues, LLMs can deliver more accurate and contextually appropriate outputs.

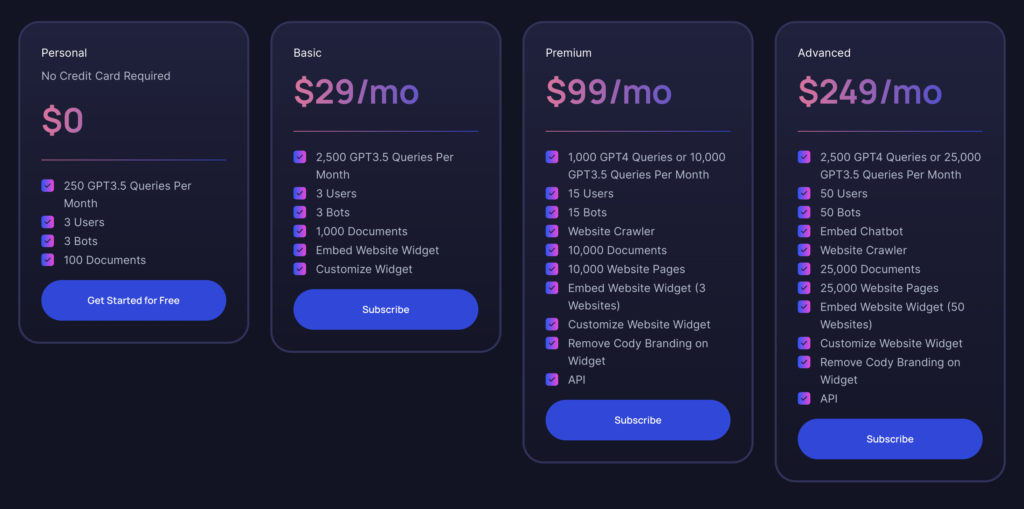

As mentioned earlier, the 16K variant of GPT-3.5 is available to all users starting from the Basic Plan. If you have been using the Personal (free) plan for some time, you would have already experienced the capabilities offered by Cody. The Basic Plan provides excellent value for money, particularly if you don’t require the additional features of GPT-4. It is suitable for individuals who are building a bot as a project or a prototype for their business with the added model choice of GPT-3.5 16K. In the future, when we release the GPT-4 32K variant, you can always upgrade to the premium plan when the need arises for more tokens.

For larger enterprises, the Advanced Plan is the most powerful option, catering to resource-intensive and high-volume usage requirements. It offers comprehensive capabilities to meet the demands of large-scale operations.